The landscape of Artificial Intelligence has undergone a seismic shift, moving from the dominance of closed-source proprietary models to a vibrant ecosystem of open-source Large Language Models (LLMs). As we navigate 2025, these models have become the primary drivers for enterprise innovation, offering unparalleled transparency, data security, and cost-efficiency. Based on the latest educational insights from Instaclustr, the “open-source revolution” is no longer a niche movement—it is the bedrock of modern AI deployment.

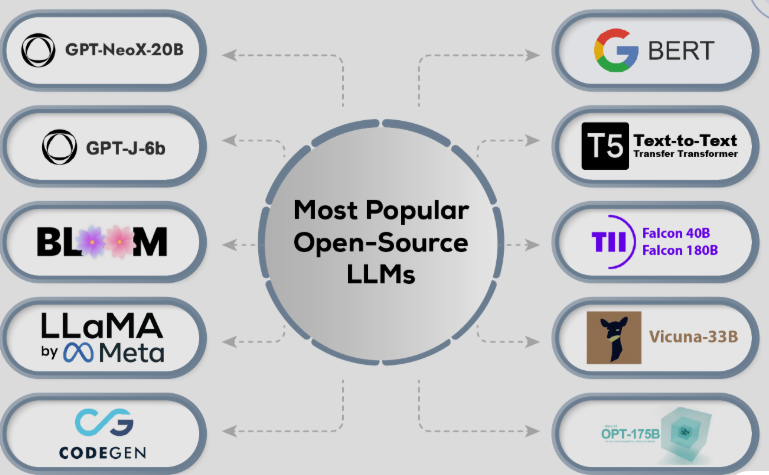

Top 10 Open-Source LLMs for 2025

The definitive list of the most influential and high-performing open-source LLMs for 2025 includes models that span from lightweight edge-computing tools to massive, multi-modal powerhouses. According to industry research, the top 10 models are:

-

Meta Llama 3 (and 3.1): The gold standard for general-purpose reasoning and dialogue.

-

Google Gemma 2: A lightweight, high-performance series optimized for speed and efficiency.

-

Cohere Command R+: The leader in Retrieval-Augmented Generation (RAG) and enterprise tool-use.

-

Mistral Mixtral-8x22B: A state-of-the-art Mixture-of-Experts (MoE) model for balanced throughput.

-

TII Falcon 2: A multi-modal innovator featuring vision-to-language capabilities.

-

xAI Grok 1.5: Designed for high-context engagement and personality-driven interactions.

-

Alibaba Qwen 1.5: The premier choice for multilingual support across 30+ languages.

-

BigScience BLOOM: A transparent, massive model built for global research and auditability.

-

LMSYS Vicuna-13B: A cost-effective, chat-optimized model that rivals early GPT-4 performance.

-

EleutherAI GPT-NeoX: A foundational research model that remains a staple for developer customization.

Why Open-Source LLMs are Dominating the Enterprise in 2025

The adoption of open-source models is primarily driven by the need for sovereignty over data and infrastructure. Unlike closed-source models (like GPT-4), open-source LLMs allow developers to inspect weights, fine-tune models on proprietary data without sending that data to a third party, and deploy models on-premises or in private clouds. This shift has essentially democratized AI, allowing small-to-mid-sized enterprises (SMEs) to compete with tech giants by building bespoke solutions tailored to their specific industry needs.

Beyond privacy, the cost-effectiveness of open-source LLMs cannot be overstated. By eliminating the “per-token” billing cycle of managed APIs, organizations can control their total cost of ownership (TCO) through infrastructure optimization. Whether running a 7B parameter model on a local workstation or a 400B model on a distributed cluster, open source provides the flexibility to scale horizontally as business demands grow.

Detailed Analysis of the Top 10 Models

1. Meta Llama 3 & 3.1: The Industry Pillar

Meta’s release of the Llama family redefined what open-source models could achieve. The Llama 3 series, including the massive 405B variant, uses a standard transformer architecture enhanced by Grouped-Query Attention (GQA) for improved inference efficiency. It is widely regarded as the best “all-rounder” for 2025.

-

Best For: General chat, complex reasoning, and long-form content generation.

-

Key Advantage: A massive community ecosystem that ensures immediate support for tools like Llama.cpp and vLLM.

2. Google Gemma 2: Speed Meets Precision

Gemma 2 represents Google’s commitment to “open-weights” models. Available in 9B and 27B parameter sizes, Gemma 2 is designed to run at high speeds on diverse hardware, from consumer-grade GPUs to massive TPUs. It utilizes a “sliding window attention” mechanism to maintain a high level of context without skyrocketing memory usage.

-

Best For: On-device applications, personal assistants, and edge-AI.

-

Key Advantage: Incredible performance-to-size ratio, often outperforming models twice its size.

3. Cohere Command R+: The RAG Specialist

Enterprise AI thrives on RAG (Retrieval-Augmented Generation), and Command R+ is purpose-built for this task. It features a massive 128K token context window and is natively optimized for “tool use,” meaning it can autonomously decide when to search a database or use a calculator to provide a factual answer.

-

Best For: Enterprise search, customer support bots, and multi-step agentic workflows.

-

Key Advantage: High precision in information retrieval and reduced “hallucination” rates.

4. Mistral Mixtral-8x22B: Efficiency Through Experts

Mistral AI’s Mixture-of-Experts (MoE) approach is a technical marvel. Instead of activating all 141B parameters for every word, it only activates a subset of “experts,” providing the power of a large model with the speed of a much smaller one. This makes it an ideal candidate for high-throughput environments where latency is a concern.

-

Best For: High-volume text processing and cost-efficient scaling.

-

Key Advantage: Significant savings on compute resources without sacrificing output quality.

5. TII Falcon 2: Breaking the Text Barrier

Developed by the Technology Innovation Institute (TII) in the UAE, Falcon 2 is a pioneer in the open-source multi-modal space. The Falcon 2 11B VLM (Vision-to-Language Model) can “see” images and describe them or answer questions about their content, bridging the gap between computer vision and natural language processing.

-

Best For: Visual accessibility tools, document digitization, and multimodal research.

-

Key Advantage: One of the few highly capable open-source models that handles visual inputs natively.

6. xAI Grok 1.5: High Context and Personality

Grok 1.5, from Elon Musk’s xAI, focuses on long-context processing and a distinct, engaging personality. With the ability to handle up to 128,000 tokens, it is particularly useful for analyzing long documents or maintain complex, multi-turn conversations without losing the thread of the topic.

-

Best For: Entertainment, marketing, and deep document analysis.

-

Key Advantage: A unique conversational style that makes it more relatable in consumer-facing apps.

7. Alibaba Qwen 1.5: The Multilingual Leader

For global companies, Qwen 1.5 is indispensable. It supports over 30 languages and has been trained on a massive, diverse dataset that includes significant representation from non-English sources. This results in superior performance in translation and localized content creation.

-

Best For: International customer service and global content localization.

-

Key Advantage: Exceptional performance in Asian languages where Western models often struggle.

8. BigScience BLOOM: Full Transparency

BLOOM (BigScience Large Open-science Open-access Multilingual Language Model) is unique because of its focus on transparency. Every aspect of its training, from the dataset to the compute usage, is documented. With 176B parameters, it remains a heavyweight in the world of academic and ethical AI research.

-

Best For: Research, ethical auditing, and highly regulated industries.

-

Key Advantage: A truly open-science approach that guarantees no “black box” algorithms.

9. LMSYS Vicuna-13B: The “People’s Model”

Vicuna was created by fine-tuning Llama on user-shared conversations. It proved that a relatively small 13B model could achieve 90% of the quality of massive models like ChatGPT if the training data was high-quality and instruction-tuned.

-

Best For: Prototyping, small-scale chat applications, and educational tools.

-

Key Advantage: Extremely easy to run on a single consumer GPU (like an RTX 3090/4090).

10. EleutherAI GPT-NeoX: The Developer’s Canvas

GPT-NeoX-20B is a foundational model that prioritizes modularity. It is the go-to choice for developers who want to experiment with different training schedules or architectures. While newer models might outrank it in benchmarks, its codebase is the template for countless specialized fine-tunes across the web.

-

Best For: Model experimentation and specialized niche fine-tuning.

-

Key Advantage: Completely permissive licensing and highly documented training code.

Comparative Specs for 2025 LLMs

The following table provides a quick reference for the technical specifications of these leading models.

| Model | Parameters | Context Window | Best Use Case |

| Llama 3.1 | 8B – 405B | 128K | General Purpose / Logic |

| Gemma 2 | 9B – 27B | 8K | High-Speed / Edge AI |

| Command R+ | 104B | 128K | Enterprise RAG / Agents |

| Mixtral 8x22B | 141B (MoE) | 64K | Efficient Throughput |

| Falcon 2 | 11B | 8K | Multimodal / Vision |

| Grok 1.5 | 314B | 128K | Engagement / Long-Context |

| Qwen 1.5 | 0.5B – 110B | 32K | Multilingual Support |

| BLOOM | 176B | 2K | Global Research |

| Vicuna-13B | 13B | 2K | Chat / Consumer Hardware |

| GPT-NeoX | 20B | 2K | Dev Customization |

Infrastructure and Implementation: Tips for Success

Choosing a model is only the first step. To successfully implement an open-source LLM in 2025, organizations must consider their infrastructure stack. Managed open-source platforms, such as those provided by Instaclustr, offer the benefit of “managed operations” for the underlying data infrastructure (like Apache Kafka for data streaming or OpenSearch for vector storage), which are essential for feeding real-time data into your LLM.

Hardware Considerations

-

7B to 13B Models: Can generally run on a single modern GPU (16GB–24GB VRAM). Ideal for local development.

-

30B to 70B Models: Usually require multi-GPU setups (e.g., 2x A100 80GB) or quantized versions to fit on consumer hardware.

-

100B+ Models: These are “data-center grade” and require distributed clusters or specialized AI cloud providers.

The Power of Quantization

In 2025, you no longer need the full 16-bit precision for every model. Techniques like Quantization (GGUF, AWQ, EXL2) allow you to compress a model's weights from 16-bit to 4-bit or 8-bit. This significantly reduces VRAM requirements with a negligible drop in accuracy, making models like Llama 3 70B accessible to a much wider audience.

Conclusion: Navigating the Open-Source Future

The “Top 10” of 2025 illustrates a clear trend: specialization is winning over generalism. Instead of one “master model,” we now have a toolbox where Gemma 2 handles the edge, Command R+ handles the enterprise data, and Qwen manages the global translations. By leveraging these open-source assets, businesses can build AI systems that are more secure, more transparent, and significantly cheaper than proprietary alternatives.

As the ecosystem continues to evolve, the integration of managed open-source data layers will remain the critical differentiator for successful AI strategies. The freedom to switch models, the ability to control data, and the power to innovate without permission are the true hallmarks of the open-source era.