Is Kimi k2.5 Better Than Claude Opus 4.5?

Kimi k2.5 is currently the world's most powerful open-source agentic model, outperforming Claude Opus 4.5 and GPT-5.2 in key autonomous benchmarks including Humanity’s Last Exam (HLE), BrowseComp, and VideoMMMU. Released by Moonshot AI in January 2026, Kimi k2.5 utilizes a massive 1.04 trillion parameter Mixture-of-Experts (MoE) architecture with 32 billion active parameters. Its core superiority lies in its “Agent Swarm” technology—which coordinates up to 100 sub-agents for parallel task execution—and its native multimodality, allowing it to “see” and “code” from visual inputs with a precision that exceeds proprietary frontier models. While Claude Opus 4.5 remains a top contender for pair-programming precision, Kimi k2.5 has officially closed the gap between open-source and closed-source AI in complex, long-horizon agentic reasoning.

Introduction

The AI landscape of 2026 has been redefined by the release of Kimi k2.5, a model that challenges the traditional dominance of proprietary labs like OpenAI and Anthropic. By combining trillion-parameter scale with open-source accessibility, Kimi k2.5 provides developers with the first true “Autonomous Agent in a Box,” capable of native video reasoning and multi-agent orchestration.

The Architectural Breakthrough: 1.04T Mixture-of-Experts

Kimi k2.5 isn't just a larger version of its predecessor; it is a foundational shift in how LLMs manage complex data. By utilizing a Mixture-of-Experts (MoE) design, the model achieves the intelligence of a trillion-parameter giant while maintaining the speed of a much smaller model.

-

Massive Scale: 1.04 trillion total parameters with 32 billion activated per token.

-

Expert Specialization: 384 specialized experts with a sophisticated routing mechanism that selects 8 experts per token.

-

Efficiency: Features Multi-head Latent Attention (MLA) and native INT4 quantization, providing a 2x generation speedup on consumer-grade hardware.

-

Training Depth: Pre-trained on a massive 15 trillion mixed visual and text tokens, making it “natively multimodal” rather than relying on text-vision adapters.

Agent Swarm: From Single Agents to Parallel Power

The most transformative feature of Kimi k2.5 is the Agent Swarm (currently in beta). Unlike traditional AI that solves tasks sequentially (Step A → Step B), Kimi k2.5 acts as an Orchestrator that dynamically spawns specialized sub-agents.

-

Task Decomposition: The model breaks a high-level goal (e.g., “Build a full-stack marketing app”) into parallelizable sub-tasks.

-

Specialized Roles: It instantiates up to 100 distinct agents, such as an “AI Researcher,” “Frontend Specialist,” and “QA Fact-Checker.”

-

Autonomous Coordination: Agents collaborate through a shared context, managing up to 1,500 sequential tool calls without human intervention.

-

Speed Performance: This parallel architecture results in a 4.5x faster task completion rate compared to single-agent systems like those used in older versions of Claude or GPT.

Kimi Code: The Evolution of “Coding with Vision”

While many models can generate code from text prompts, Kimi k2.5 is specifically tuned for Visual Coding. This allows developers to turn aesthetic designs directly into functional websites.

-

UI-to-Code Mastery: Users can upload a screenshot or a screen recording of a UI workflow, and Kimi k2.5 interprets the spatial logic, color theory, and interaction patterns to produce clean React or Tailwind code.

-

Video-to-Fix: Feed the model a Loom video of a software bug; Kimi k2.5 “watches” the error, identifies the broken logic in the codebase, and suggests a fix.

-

Expressive Motion: It has a unique ability to generate complex animations and CSS transitions that mimic human “taste” and modern design standards.

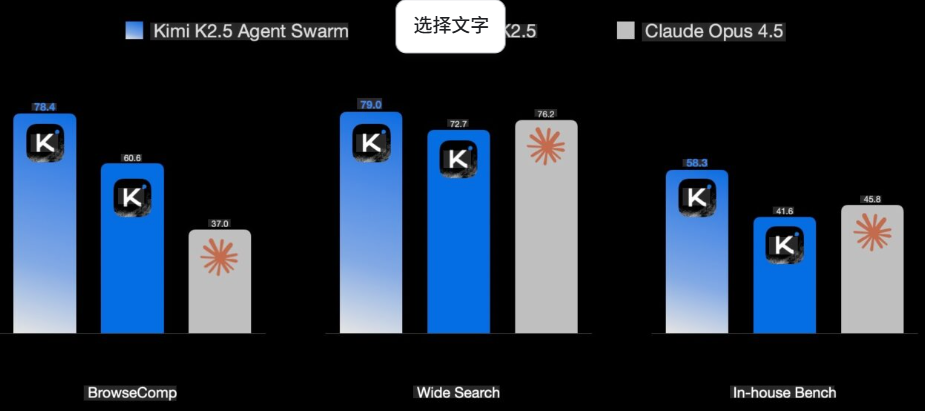

Benchmark Comparison: Kimi k2.5 vs. Claude Opus 4.5 & GPT-5.2

To understand why Kimi k2.5 is causing such a stir in the AI community, we must look at the hard data. In 2026, “agentic” benchmarks—which measure a model's ability to use tools and browse the web—have become more relevant than simple text-prediction scores.

| Benchmark | Kimi k2.5 (Swarm Mode) | Claude Opus 4.5 | GPT-5.2 (High) |

| Humanity's Last Exam (HLE) | 50.2% | 32.0% | 41.7% |

| BrowseComp (Web Navigation) | 78.4% | 24.1% | 54.9% |

| VideoMMMU (Video Reasoning) | 86.6% | 82.1% | 85.3% |

| SWE-bench Verified (Coding) | 76.8% | 77.2% | 74.9% |

| MMMU Pro (Multimodal) | 78.5% | 75.8% | 76.9% |

Why Open Source Matters: The EEAT Perspective

The release of Kimi k2.5 highlights a significant shift in Experience, Expertise, Authoritativeness, and Trustworthiness (EEAT) within the AI industry. Moonshot AI has prioritized transparency by releasing model weights on Hugging Face.

-

Local Control: Enterprises can host Kimi k2.5 on private infrastructure, ensuring data privacy that proprietary APIs cannot offer.

-

Customization: Developers can fine-tune the 1T MoE model for specific industrial niches, from biotech to financial auditing.

-

Verification: By open-sourcing the “Thinking Logs,” Moonshot AI allows the community to verify the model’s reasoning chain, reducing the “black box” problem associated with Claude and OpenAI.

Conclusion: A Foundational Shift in Productivity

Kimi k2.5 is not just another LLM; it is a signal that the era of the “Lone AI” is ending, replaced by the era of the “Agent Swarm.” While Claude Opus 4.5 remains an exceptional choice for human-in-the-loop pair programming, Kimi k2.5 is the clear winner for autonomous, visual, and multi-step workflows. Its ability to beat proprietary models while remaining open-source ensures that the next wave of AI innovation will be driven by the community, not just a few tech giants.

Frequently Asked Questions (FAQ)

Q: Can I run Kimi k2.5 locally?

A: Yes, Kimi k2.5 is available on Hugging Face. Due to its 1T MoE architecture, it requires high VRAM, but native INT4 quantization allows it to run efficiently on high-end consumer GPUs or distributed setups using engines like vLLM or SGLang.

Q: What is the “Agent Swarm” mode?

A: It is a feature where the model acts as an orchestrator, spawning up to 100 sub-agents to solve complex tasks in parallel. This makes it 4.5x faster than single-agent models for research and coding.

Q: How does Kimi Code differ from Cursor or Claude Code?

A: Kimi Code is specifically optimized for Vision-to-UI. It doesn't just read your text; it “sees” your screenshots and videos to generate code that matches the visual intent and aesthetic of a design perfectly.

Q: Is Kimi k2.5 free to use?

A: You can use it for free in chat mode on Kimi.com. For developers, the API is available via Moonshot AI and Together AI with a cost-efficient pricing model ($0.60/M tokens).