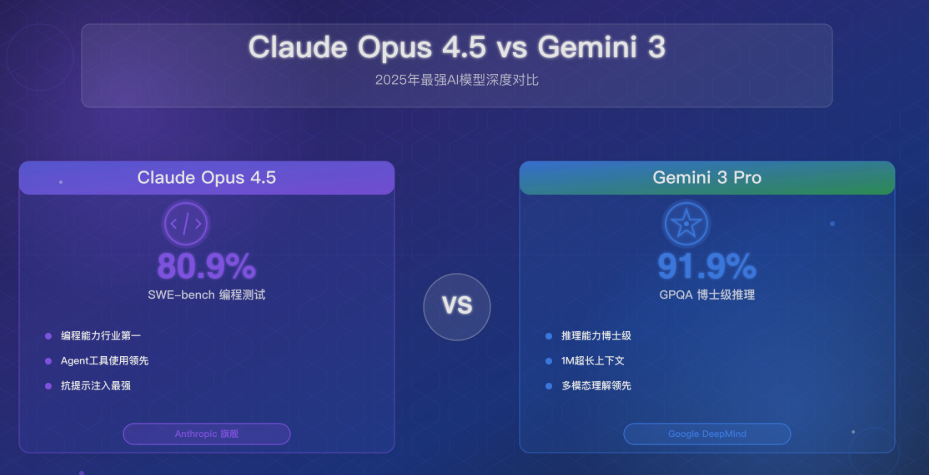

The November 2025 AI Showdown That Changed Everything

In November 2025, the AI industry witnessed an unprecedented competitive clash. Google DeepMind launched Gemini 3 Pro on November 18, proclaiming it as “the most intelligent model ever created.” Just seven days later, Anthropic fired back with Claude Opus 4.5, equally claiming the title of “world's most intelligent model.” This wasn't just another product launch cycle—it was a strategic battle that fundamentally reshaped both capabilities and economics of frontier AI.

Both models are powerhouses, but they excel in fundamentally different domains. This comprehensive analysis cuts through the marketing noise to reveal where each model truly shines, with special focus on multimodal capabilities and pricing economics—two areas where the differences are most dramatic and consequential.

Executive Summary: Key Differentiators

| Dimension | Gemini 3 Pro | Claude Opus 4.5 | Winner |

|---|---|---|---|

| Multimodal Support | Text, Images, Video, Audio | Text, Images only | 🏆 Gemini (Clear) |

| Context Window | 1M tokens | 200K tokens | 🏆 Gemini (5x larger) |

| Input Pricing | $2/M tokens | $5/M tokens | 🏆 Gemini (60% cheaper) |

| Output Pricing | $12/M tokens | $25/M tokens | 🏆 Gemini (52% cheaper) |

| Coding Excellence | 76.2% SWE-bench | 80.9% SWE-bench | 🏆 Claude |

| Agent Reliability | 85.3% tool accuracy | 88.9% tool accuracy | 🏆 Claude |

| Architecture | Sparse MoE | Dense Transformer | Different approaches |

| Primary Strength | Multimodal + Reasoning | Coding + Agents | Complementary |

The Bottom Line: These aren't competing models—they're complementary tools. Gemini 3 Pro dominates multimodal understanding and long-context tasks at a lower price point. Claude Opus 4.5 leads in coding reliability and agentic workflows with superior safety. The optimal strategy for many teams is using both.

Multimodal Capabilities: The Architectural Divide

The Fundamental Difference

The multimodal gap between Gemini 3 Pro and Claude Opus 4.5 isn't about performance—it's about architectural capability boundaries. This is the most decisive differentiator between the two models.

Gemini 3 Pro's Multimodal Architecture:

- Native multimodal training from ground up

- Video, audio, images, and text trained in unified embedding space

- Models “see” and “hear” directly without intermediate conversion

- Can reason across modalities simultaneously

Claude Opus 4.5's Architecture:

- Text-first design with vision capabilities added later

- Images processed through separate vision encoder

- No native video or audio processing

- Multimodal reasoning requires sequential processing

Comprehensive Multimodal Comparison

| Capability | Gemini 3 Pro | Claude Opus 4.5 | Real-World Impact |

|---|---|---|---|

| Image Understanding | ✅ 81.0% (MMMU-Pro) | ✅ 80.7% (MMMU) | Essentially tied |

| Video Understanding | ✅ 87.6% (Video-MMMU) | ❌ Not supported | Gemini exclusive |

| Audio Processing | ✅ Native support | ❌ Not supported | Gemini exclusive |

| Maximum Video Length | 1 hour (based on 1M context) | N/A | Gemini only |

| Chart/Diagram Analysis | 81.4% (CharXiv) | ~75% (estimated) | Gemini stronger |

| OCR Accuracy | 0.115 error rate | 0.145 error rate | Gemini 20% better |

| Cross-Modal Reasoning | Native | Sequential | Architectural advantage |

| UI/Design Understanding | Excellent | Good | Gemini better |

Why Video Support Matters

The ability to process video natively isn't just a feature checkbox—it fundamentally changes what applications you can build:

Gemini-Enabled Use Cases:

- Content moderation: Analyze hours of video for policy violations in single API call

- Meeting intelligence: Process entire Zoom recordings with visual and audio context

- Educational content: Generate summaries from lecture videos with slides + speech

- Security surveillance: Real-time analysis of security camera feeds

- Sports analytics: Analyze game footage for tactical patterns

- Medical imaging: Review diagnostic videos with temporal understanding

Claude Limitation Workaround:

- Extract video frames manually (every N seconds)

- Transcribe audio separately using Whisper or similar

- Process frames as separate images in sequence

- Manually reconstruct temporal relationships

- Result: 5-10x more complex implementation, higher latency, higher cost

Document Processing Excellence

While both models handle documents, Gemini 3 Pro's unified multimodal architecture gives it advantages in complex document understanding:

Gemini 3 Pro Strengths:

- Mixed-format documents: PDFs with charts, images, tables processed holistically

- Layout understanding: Preserves spatial relationships between elements

- OCR quality: 20% lower error rate (0.115 vs 0.145)

- Large documents: 1M token window handles entire books

Claude Opus 4.5 Strengths:

- Structured extraction: Better at converting docs to structured data

- Legal/contract analysis: Superior attention to detail in text-heavy docs

- Code in docs: Better at understanding technical documentation with code samples

Pricing Impact of Multimodal Processing

The multimodal advantage comes with cost considerations:

Gemini 3 Pro Multimodal Token Consumption:

- Video: ~2 tokens per frame + audio tokens

- 1 minute of 30fps video ≈ 3,600 frame tokens + ~150 audio tokens

- 10-minute video ≈ 37,500 tokens at standard quality

- Cost for 10-min video analysis: $0.075 (input) + output costs

Claude Workaround Cost:

- Manual frame extraction: Development/hosting costs

- Whisper transcription: $0.006 per minute (external service)

- Processing 100 frames + transcript: ~50K tokens

- Cost for 10-min video workaround: ~$0.35 + engineering time

Verdict: For video-intensive applications, Gemini's native support provides 5-10x cost advantage beyond just API pricing.

Pricing Economics: Beyond the Headline Numbers

Official Pricing Breakdown

| Pricing Component | Claude Opus 4.5 | Gemini 3 Pro | Difference |

|---|---|---|---|

| Input Tokens (standard) | $5.00 per million | $2.00 per million | Gemini 60% cheaper |

| Output Tokens (standard) | $25.00 per million | $12.00 per million | Gemini 52% cheaper |

| Input Tokens (>200K context) | $5.00 per million | $4.00 per million | Gemini 20% cheaper |

| Output Tokens (>200K context) | $25.00 per million | $18.00 per million | Gemini 28% cheaper |

| Knowledge Cutoff | March 2025 | January 2025 | Claude newer |

| Context Window | 200K tokens | 1M tokens | Gemini 5x larger |

At first glance, Gemini 3 Pro appears to offer dramatically lower costs across the board. However, real-world economics depend on several hidden factors.

Total Cost of Ownership Analysis

Factors Beyond API Pricing:

- Token Efficiency: How many tokens does each model need to complete tasks?

- Success Rate: How often does the output meet quality requirements?

- Iteration Count: How many retry attempts are needed?

- Development Time: How quickly can you implement solutions?

- Infrastructure Costs: Additional services needed (video processing, etc.)

Scenario-Based Cost Analysis

Scenario 1: Code Generation Service (1M API Calls/Month)

Assumptions:

- Average 2,000 input tokens (code context)

- Average 3,000 output tokens (generated code)

- Claude has 30% better token efficiency (fewer tokens for same result)

| Model | Input Cost | Output Cost | Monthly Total |

|---|---|---|---|

| Claude Opus 4.5 | $10,000 | $75,000 | $85,000 |

| Gemini 3 Pro | $4,000 | $46,800 | $50,800 |

| Gemini w/ 30% more tokens | $5,200 | $60,840 | $66,040 |

Savings: Even with Claude's efficiency advantage, Gemini saves ~$19,000/month (22%)

Scenario 2: Video Content Moderation (100K Videos/Month)

Assumptions:

- Average 5-minute videos

- Gemini: Native processing at ~18,750 tokens per video

- Claude: Must use external transcription + frame extraction

| Approach | API Cost | External Services | Total Monthly Cost |

|---|---|---|---|

| Gemini 3 Pro (native) | $3,750 (input) | $0 | $3,750 |

| Claude Opus 4.5 (workaround) | ~$12,500 | $3,000 (Whisper + storage) | $15,500 |

Savings: Gemini saves $11,750/month (76%) for video applications

Scenario 3: Document Analysis (Contracts, Legal)

Assumptions:

- Average 50K token documents

- Claude has higher accuracy (fewer human reviews needed)

- Human review costs $50/document

| Model | API Cost (100K docs) | Review Savings | Net Cost |

|---|---|---|---|

| Claude Opus 4.5 | $15,000 | -$250,000 (5K fewer reviews) | -$235,000 |

| Gemini 3 Pro | $6,000 | $0 (baseline) | $6,000 |

Verdict: For high-stakes tasks where accuracy matters more than cost, Claude's reliability can save far more than the API price difference.

Hidden Costs to Consider

| Cost Factor | Impact on Gemini | Impact on Claude |

|---|---|---|

| Extended Thinking/Deep Think | 3-5x token multiplication | 2-3x token multiplication |

| Retry Logic | May need more retries on complex tasks | Higher success rate = fewer retries |

| Development Time | Simpler for multimodal | Simpler for pure coding |

| Infrastructure | Minimal external services | May need video/audio processing |

| Long Context Fees | Kicks in above 200K tokens | Not applicable (200K max) |

Scenario-Based Recommendations

Use Case Decision Matrix

| Your Primary Use Case | Recommended Model | Key Reason | Monthly Cost Impact |

|---|---|---|---|

| Video Content Analysis | 🏆 Gemini 3 Pro | Only option with native support | 70-80% savings |

| Backend Code Development | 🏆 Claude Opus 4.5 | 80.9% SWE-bench, fewer bugs | Higher quality worth premium |

| Frontend/UI Development | 🏆 Gemini 3 Pro | WebDev Arena leader, design understanding | 50% cost savings |

| Legal Document Analysis | 🏆 Claude Opus 4.5 | Higher accuracy, fewer reviews needed | Saves on human review |

| Academic Research | 🏆 Gemini 3 Pro | 91.9% GPQA Diamond, 1M context | Lower cost + better performance |

| Autonomous Agents | 🏆 Claude Opus 4.5 | 88.9% tool accuracy, better safety | Reliability > cost |

| Customer Support Chatbot | 🏆 Gemini 3 Pro | 40-50% lower cost at scale | Significant savings |

| Code Review/Refactoring | 🏆 Claude Opus 4.5 | Architectural understanding | Quality justifies cost |

| Meeting Transcription + Summary | 🏆 Gemini 3 Pro | Native audio, 1M context for long meetings | Only native solution |

| Security-Sensitive Applications | 🏆 Claude Opus 4.5 | 4.7% prompt injection rate vs 12.5% | Safety critical |

Industry-Specific Recommendations

Media & Entertainment

| Task | Recommended | Why |

|---|---|---|

| Video editing assistants | Gemini 3 Pro | Native video understanding |

| Script writing | Either | Similar text capabilities |

| Content moderation | Gemini 3 Pro | Video + cost efficiency |

| Music video analysis | Gemini 3 Pro | Audio + visual synchronization |

Cost Impact: Gemini typically 60-70% cheaper for media workflows

Software Development Teams

| Task | Recommended | Why |

|---|---|---|

| Bug fixing | Claude Opus 4.5 | 80.9% SWE-bench accuracy |

| UI prototyping | Gemini 3 Pro | Design-to-code conversion |

| Architecture planning | Claude Opus 4.5 | Deeper reasoning, safety |

| Documentation generation | Gemini 3 Pro | Cost-effective for volume |

Cost Impact: Mixed—use Claude for critical paths, Gemini for volume tasks

Enterprise/Legal

| Task | Recommended | Why |

|---|---|---|

| Contract analysis | Claude Opus 4.5 | Accuracy > cost |

| Compliance scanning | Claude Opus 4.5 | Better safety guardrails |

| Document summarization | Gemini 3 Pro | 1M context, lower cost |

| Discovery automation | Claude Opus 4.5 | Fewer errors in critical work |

Cost Impact: Claude premium justified by risk reduction

Education & E-Learning

| Task | Recommended | Why |

|---|---|---|

| Lecture video analysis | Gemini 3 Pro | Native video + audio |

| Homework assistance | Gemini 3 Pro | Cost-effective at scale |

| Coding tutors | Claude Opus 4.5 | Better teaching quality |

| Content generation | Gemini 3 Pro | Lower cost, adequate quality |

Cost Impact: Gemini 50-70% cheaper for most ed-tech use cases

Hybrid Architecture Strategies

Smart Model Routing

For teams seeking optimal performance AND cost efficiency, implement dynamic model selection:

class ModelRouter:

def __init__(self):

self.gemini_client = GeminiClient()

self.claude_client = ClaudeClient()

def choose_model(self, task_type, content, priority='balanced'):

"""

Dynamic model selection based on task characteristics

priority: 'cost', 'quality', or 'balanced'

"""

# Multimodal tasks → Only Gemini can handle

if self.has_video(content) or self.has_audio(content):

return self.gemini_client

# Ultra-long context → Gemini's 1M advantage

if self.token_count(content) > 150000:

return self.gemini_client

# Critical coding → Claude's reliability

if task_type in ['bug_fix', 'security_review', 'architecture']:

if priority in ['quality', 'balanced']:

return self.claude_client

# Agent workflows → Claude's safety

if task_type == 'autonomous_agent':

return self.claude_client

# Default to cost optimization

if priority == 'cost':

return self.gemini_client

# UI/frontend → Gemini's design understanding

if task_type in ['ui_development', 'design_to_code']:

return self.gemini_client

# Balanced: Claude for quality-critical, Gemini otherwise

return self.claude_client if priority == 'quality' else self.gemini_client

Cost Optimization Patterns

Pattern 1: First-Pass Gemini, Final-Pass Claude

def optimize_code_review(code):

# First pass: Gemini finds obvious issues (cheaper)

initial_review = gemini.analyze(code, task='find_bugs')

# Only send to Claude if issues found

if initial_review.has_serious_issues():

final_review = claude.analyze(code, task='deep_review')

return final_review

return initial_review

Savings: 70% of reviews stay on Gemini, 30% escalate to Claude

Pattern 2: Gemini for Prototyping, Claude for Production

def development_pipeline(spec):

# Rapid prototyping with Gemini (faster + cheaper)

prototype = gemini.generate_code(spec, quality='prototype')

# Validate prototype

if passes_basic_tests(prototype):

# Production hardening with Claude

production_code = claude.refactor(prototype, quality='production')

return production_code

# If prototype fails, try Claude directly

return claude.generate_code(spec, quality='production')

Savings: 60% faster iteration, 40% lower cost on average

User Personas & Recommendations

Persona-Based Selection Guide

Persona 1: Startup Founder (Cost-Sensitive, Fast Iteration)

Profile:

- Limited budget

- Need to ship fast

- Acceptable to trade some quality for speed

- Likely building B2C products

Recommendation: Gemini 3 Pro (Primary)

Rationale:

- 60% lower input costs enable experimentation

- 52% lower output costs critical for bootstrapped teams

- Fast prototyping with adequate quality

- 1M context window handles diverse use cases

When to Use Claude: Security-critical features, payment processing logic, anything that must work correctly first time

Monthly Cost Difference: $2-5K savings on typical startup volume

Persona 2: Enterprise Development Team (Quality-First, Budget Available)

Profile:

- Large codebase (millions of lines)

- Mission-critical applications

- Regulatory/compliance requirements

- High cost of production bugs

Recommendation: Claude Opus 4.5 (Primary)

Rationale:

- 80.9% SWE-bench means fewer bugs in production

- 4.7% prompt injection rate critical for security

- Better agent reliability (88.9% tool accuracy)

- Lower iteration count saves developer time

When to Use Gemini: Documentation generation, video training materials, non-critical features

ROI Calculation: $10K/month extra API cost saves $50K+ in bug fixes and developer time

Persona 3: Media Company (Video-Heavy Workloads)

Profile:

- Processing 10K+ videos/month

- Need automated content moderation

- Generate video summaries/metadata

- Multi-language support needed

Recommendation: Gemini 3 Pro (Only Option)

Rationale:

- Native video processing (Claude can't compete)

- 76% cost savings vs workaround solutions

- Audio transcription included

- 1M context handles long-form content

Reality Check: For video workloads, there's no decision—Gemini is the only frontier model with native support

Monthly Savings: $10-20K compared to Claude + external video processing

Persona 4: AI Researcher (Cutting-Edge Experimentation)

Profile:

- Testing model capabilities

- Publishing papers/benchmarks

- Need both coding and reasoning

- Small scale, accuracy > cost

Recommendation: Use Both Models

Rationale:

- Gemini better for: Visual reasoning, scientific QA, multimodal research

- Claude better for: Abstract reasoning (ARC-AGI-2), coding benchmarks, safety testing

- Low volume means cost difference negligible

- Access to both provides comprehensive capability coverage

Implementation: Unified API (CometAPI) for easy switching

Persona 5: Freelance Developer (Balanced Needs)

Profile:

- Diverse client projects

- Need cost efficiency but maintain quality

- Solo operator (time is money)

- Web apps, dashboards, APIs

Recommendation: Hybrid Strategy

Rationale:

- Gemini for frontend (cheaper, good design understanding)

- Claude for backend/complex logic (higher reliability)

- Gemini for prototypes, Claude for production refinement

- Use routing to optimize cost vs. quality tradeoff

Tool Stack: API aggregator for unified access (CometAPI, etc.)

Cost Impact: 30-40% savings vs all-Claude, 20% better quality vs all-Gemini

Technical Integration Guide

Unified API Pattern

Both models can be accessed through OpenAI-compatible APIs via aggregators like CometAPI:

import openai

# Configure client

client = openai.OpenAI(

api_key="your-cometapi-key",

base_url="https://api.cometapi.com/v1"

)

# Use Gemini 3 Pro

gemini_response = client.chat.completions.create(

model="gemini-3-pro",

messages=[{

"role": "user",

"content": "Analyze this video for content violations"

}]

)

# Switch to Claude Opus 4.5 for same interface

claude_response = client.chat.completions.create(

model="claude-opus-4-5-20251101",

messages=[{

"role": "user",

"content": "Refactor this function for production use"

}]

)

Multimodal Input Handling

Gemini 3 Pro Video Analysis:

def analyze_video_gemini(video_path):

with open(video_path, 'rb') as video_file:

video_data = base64.b64encode(video_file.read()).decode()

response = client.chat.completions.create(

model="gemini-3-pro",

messages=[{

"role": "user",

"content": [

{"type": "text", "text": "Summarize this video"},

{

"type": "video",

"video": {

"data": video_data,

"mime_type": "video/mp4"

}

}

]

}]

)

return response.choices[0].message.content

Claude Opus 4.5 Workaround (Not Recommended):

def analyze_video_claude(video_path):

# Extract frames (requires external tool)

frames = extract_frames(video_path, fps=1)

# Transcribe audio (requires Whisper or similar)

transcript = transcribe_audio(video_path)

# Process frames individually

frame_analyses = []

for frame in frames[:100]: # Limit due to context constraints

frame_base64 = encode_image(frame)

analysis = client.chat.completions.create(

model="claude-opus-4-5-20251101",

messages=[{

"role": "user",

"content": [

{"type": "text", "text": "Analyze this frame"},

{"type": "image", "image": frame_base64}

]

}]

)

frame_analyses.append(analysis.choices[0].message.content)

# Manually synthesize results

final_summary = synthesize_analyses(frame_analyses, transcript)

return final_summary

Complexity: Claude approach requires 3 external tools and 100+ API calls. Gemini does it in one call.

Cost-Benefit Decision Framework

When Price Difference Doesn't Matter

- Low-volume applications (< 1M tokens/month): Absolute difference is minimal

- High-stakes decisions: Accuracy > cost (legal, medical, financial)

- Irreplaceable capability: If only one model can do the job (video for Gemini, coding for Claude)

- Developer productivity: If Claude saves 2 hours/week, that justifies the API premium

When Price Difference is Critical

- High-volume B2C: Millions of users = massive cost multiplier

- Tight margins: Startups, non-profits, education

- Commodity tasks: Content summarization, data extraction

- Experimental phase: Testing before production commitment

Future-Proofing Considerations

Model Evolution Trajectories

Gemini Roadmap Indicators:

- Expanding multimodal to more formats

- Longer context windows (2M+ likely in 2026)

- Tighter Google ecosystem integration

- Focus on cost leadership

Claude Roadmap Indicators:

- Enhanced agent capabilities

- Improved coding benchmarks

- Safety/alignment innovations

- Focus on enterprise trust

Platform Lock-In Risks

| Risk Factor | Gemini 3 Pro | Claude Opus 4.5 |

|---|---|---|

| API Compatibility | OpenAI-compatible via proxies | Native + OpenAI-compatible |

| Ecosystem Lock-in | Google Cloud preferred | Multi-cloud (AWS, GCP, Azure) |

| Price Volatility | Google has infrastructure advantage | Anthropic dependent on cloud providers |

| Feature Deprecation | Google history concerning | Anthropic more stable |

Mitigation Strategy: Use unified API abstraction layer to enable easy model switching

Conclusion: The Right Model for Your Needs

Gemini 3 Pro and Claude Opus 4.5 represent two different philosophies of AI development:

Gemini 3 Pro = Broad multimodal intelligence at accessible prices

- Choose when: Video/audio processing, long documents, cost-sensitive, rapid prototyping

- Avoid when: Security-critical, absolute coding reliability needed, agent autonomy required

Claude Opus 4.5 = Deep coding reliability with safety-first design

- Choose when: Mission-critical code, autonomous agents, security-sensitive, quality > cost

- Avoid when: Video processing, massive context needs, budget-constrained scaling

The Optimal Strategy for Most Teams: Use both intelligently

- Route tasks to the model with the strongest capability/cost profile

- Use API aggregators for unified implementation

- Monitor performance and cost metrics to refine routing logic

The AI model landscape has matured beyond “which is best?” to “which is best for this specific task?” Understanding the nuanced trade-offs between multimodal capabilities and pricing economics is key to maximizing ROI from frontier AI in 2025.

Quick Decision Table

| “I need to…” | Best Choice | Estimated Monthly Cost (10M tokens) |

|---|---|---|

| Analyze 10K videos/month | 🏆 Gemini 3 Pro | $20K (vs $45K+ with Claude workaround) |

| Build autonomous coding agent | 🏆 Claude Opus 4.5 | $50K (reliability worth premium) |

| Create customer chatbot | 🏆 Gemini 3 Pro | $14K (vs $30K with Claude) |

| Process legal contracts | 🏆 Claude Opus 4.5 | $50K (accuracy prevents costly errors) |

| Generate blog content | 🏆 Gemini 3 Pro | $12K (vs $25K with Claude) |

| Debug production code | 🏆 Claude Opus 4.5 | Quality > cost for critical systems |

| Prototype MVP quickly | 🏆 Gemini 3 Pro | $8K (vs $20K with Claude) |

| Transcribe meetings | 🏆 Gemini 3 Pro | Native audio = only option |

Access Both Models: CometAPI and similar aggregators provide unified API access to both Gemini 3 Pro and Claude Opus 4.5 at competitive pricing.

Keywords: Gemini 3 Pro vs Claude Opus 4.5, multimodal AI comparison, AI model pricing, video analysis AI, coding AI comparison, AI cost optimization, Claude vs Gemini, frontier AI models 2025, AI model selection guide, enterprise AI strategy