What is DeepSeek V4 Engram Architecture and Why Is It Revolutionary?

DeepSeek V4 with Engram architecture represents a paradigm shift in artificial intelligence design, introducing O(1) memory lookup capabilities that fundamentally solve the “dual-task problem” plaguing traditional Transformer models. Released jointly by DeepSeek and Peking University on January 12, 2026 (arXiv:2601.07372), Engram separates static memory retrieval from dynamic neural computation—eliminating the wasteful practice of using expensive neural networks to repeatedly reconstruct simple patterns like “the capital of France is Paris.” Instead of processing both memorization and reasoning through the same mechanism, Engram uses deterministic hash-based lookups for static patterns (O(1) complexity) while reserving computational resources for genuine reasoning tasks. This architectural innovation, combined with the U-shaped scaling law for optimal parameter allocation and mHC (manifold-constrained Hyperconnection) for stable trillion-parameter training, enables DeepSeek V4 to achieve superior performance at dramatically lower costs—continuing DeepSeek's pattern of challenging the assumption that “bigger models equal better performance.”

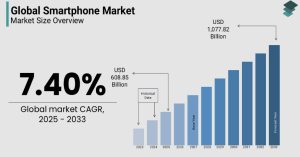

The market impact was immediate and dramatic. On January 27, 2025, NVIDIA lost $60 billion in market value in a single day after DeepSeek demonstrated that their R1 model matched OpenAI's o1 performance using only $294,000 in reinforcement learning costs. With V4's Engram architecture, DeepSeek is poised to further disrupt the AI landscape by proving that algorithmic innovation trumps brute-force computation, potentially reshaping the competitive dynamics between Chinese and Western AI companies.

Understanding the Transformer Dual-Task Problem

Before appreciating Engram's innovation, we must understand the fundamental flaw it addresses in traditional Transformer architectures.

The Core Problem: Mixed Processing of Different Task Types

Transformer models process two fundamentally different types of tasks using the same computational mechanism:

Task 1: Static Memory (Should be O(1))

- Entity names and factual knowledge

- Fixed phrases and common expressions

- Stable linguistic patterns

- Memorized facts requiring simple lookup

Task 2: Dynamic Reasoning (Requires Neural Computation)

- Contextual relationships and dependencies

- Long-range logical reasoning

- Complex compositional understanding

- Novel problem-solving

Current Transformers mix both tasks within the same set of weights, forcing the model to waste massive computational resources repeatedly rebuilding static patterns that should require simple lookup. This is analogous to your browser re-parsing the HTML for “python.org” every single time you visit—completely unnecessary and inefficient.

The Computational Waste

Traditional Transformer attention mechanisms operate with O(n²) complexity where n is sequence length. For every token, the model must:

- Compute attention scores across the entire sequence

- Process both static patterns and dynamic reasoning identically

- Rebuild memorized facts through expensive matrix operations

- Allocate equal computational budget to trivial and complex tasks

This fundamental inefficiency becomes increasingly problematic as context windows expand and models scale to trillions of parameters. Engram's O(1) memory lookup directly addresses this waste.

Engram Architecture: Deep Technical Analysis

Engram introduces a “lookup-compute separation” paradigm that fundamentally reorganizes how language models process information.

Core Concept: Separating Memory from Computation

Engram decomposes language modeling into two independent stages:

- Memory Lookup: Engram module rapidly retrieves static patterns through deterministic hashing

- Neural Computation: MoE (Mixture of Experts) specialists focus exclusively on composition and reasoning

This separation allows each component to optimize for its specific task type, dramatically improving overall efficiency.

Four Key Technical Steps

Step 1: Token Compression

def compress_token(token_id):

normalized = unicodedata.normalize('NFKC', token_id)

return normalized.lower()

Engram applies text normalization based on:

- NFKC (Compatibility Decomposition followed by Canonical Composition) normalization

- Lowercase mapping for case-insensitive matching

- Text equivalence mappings

This reduces the effective vocabulary by 23%, decreasing memory requirements while preserving semantic information.

Step 2: Multi-Head Hashing

class MultiHeadHash:

def hash_ngram(self, ngram, head_id):

combined = xor_reduce(ngram)

return hash(combined + self.seeds[head_id]) % self.table_size

The hashing mechanism works through:

- N-gram Extraction: Captures 2-grams and 3-grams from token suffixes

- Multiple Hash Heads: K independent hash functions map N-grams to embedding table indices

- Deterministic Addressing: Identical N-grams always map to the same index

- Collision Resistance: Layer-specific hash functions, XOR mixing, and prime-sized buckets ensure uniform distribution

Key Properties:

- Retrieves embedding vectors e_{t,n,k} from static memory table E

- Guarantees consistent lookups for recurring patterns

- Distributes patterns uniformly across memory to minimize collisions

Step 3: Context-Aware Gating

def context_gating(h_t, retrieved_embeddings):

α_t = sigmoid(dot(h_t, retrieved_embeddings.T)).mean()

return α_t * retrieved_embeddings

The gating mechanism intelligently filters retrieved memories:

- Query: Current hidden state h_t provides context

- Key/Value: Retrieved memory projections from hash lookup

- Computation: Normalized dot product with Sigmoid produces gating scalar α_t ∈ [0,1]

- Function: When retrieved memories conflict with context, gating suppresses noise

This ensures that static memories only contribute when contextually appropriate.

Step 4: Residual Fusion

def residual_fusion(gated_value, residual_stream):

return residual_stream + causal_conv(gated_value, kernel_size=4)

The final integration:

- Gated values pass through small-depth causal convolution (kernel=4)

- Output fuses into the main residual stream

- Preserves gradient flow while incorporating retrieved memories

Complexity Analysis: Why O(1) Matters

Traditional Transformer:

- Attention: O(n²) where n is sequence length

- Feed-forward: O(n)

Engram:

- Hash lookup: O(1) constant time regardless of sequence length

- Gating: O(d) where d is embedding dimension

- Convolution: O(k) where k is kernel size (constant at 4)

In long-context scenarios, Engram's O(1) lookup frees massive attention budget for global context processing. As sequences extend to 100K+ tokens, the efficiency gains become transformative.

The U-Shaped Scaling Law: Theoretical Foundation

DeepSeek's research introduces a critical theoretical framework for optimal parameter allocation in hybrid architectures.

Mathematical Formulation

Given fixed parameter budget and FLOPs, define allocation ratios:

- r_e: Proportion of sparse capacity allocated to MoE experts

- r_m: Proportion of sparse capacity allocated to Engram memory

Performance function P(r_e, r_m) exhibits U-shaped behavior:

Suboptimal: Pure MoE (r_e = 1, r_m = 0)

- Powerful computational ability

- Wastes parameters rebuilding static patterns

- Poor memory efficiency

Suboptimal: Pure Memory (r_e = 0, r_m = 1)

- Perfect static memory

- Loses compositional and reasoning capabilities

- Cannot handle novel situations

Optimal: Balanced Allocation (r_e ≈ 0.75-0.80, r_m ≈ 0.20-0.25)

- Computation and memory reach optimal balance

- Maximum performance per parameter

- Efficient use of both static and dynamic capabilities

Physical Interpretation

The U-shaped curve reveals fundamental insights:

- Left extreme: Strong at reasoning but inefficient at memory

- Right extreme: Efficient at memory but weak at reasoning

- Sweet spot: Achieves synergy between complementary capabilities

For DeepSeek V4, this translates to approximately 20-25% of sparse parameters allocated to Engram memory, with 75-80% devoted to MoE computational experts.

mHC: Enabling Trillion-Parameter Stable Training

The manifold-constrained Hyperconnection (mHC) solves critical training stability issues that emerge at massive scale.

The Original Problem

Standard hyperconnections suffer from severe issues:

- Broken Identity Mapping: Composite mappings destroy residual properties

- Catastrophic Signal Amplification: Gain reaches 10³ to 10⁵ in deep networks

- Training Instability: Loss spikes and gradient explosions beyond 60 layers

- Memory Overhead: Significant memory access costs

mHC Solution

Update Rule with Constraints:

The residual mixing matrix M must be:

- Doubly Stochastic: Belongs to the Birkhoff polytope

- Non-negative: All entries M_ij ≥ 0

- Normalized: Rows and columns sum to 1

- Optimized: Via Sinkhorn-Knopp algorithm

Pre/post-processing mappings W_pre and W_post must be non-negative mixing mappings with gradient-optimized kernels.

Key Advantages

- Restored Identity Mapping: Maintains residual connection properties at scale

- Prevented Gradient Explosions: Eliminates loss spikes during training

- Trillion-Parameter Support: Enables stable training at unprecedented scales

- Performance Gains: Provides significant improvements in convergence and final quality

- Superior Scalability: Optimized memory management and inter-node communication

This breakthrough makes DeepSeek V4's projected trillion-parameter scale practically achievable.

Empirical Performance: Benchmark Results

DeepSeek's published benchmarks demonstrate Engram's superiority across diverse tasks.

Performance Comparison (Equal Parameters, Equal FLOPs)

| Benchmark | MoE Baseline | Engram-27B | Improvement |

|---|---|---|---|

| MMLU | 75.4% | 78.8% | +3.4% |

| CMMLU | 75.0% | 79.0% | +4.0% |

| BBH | 65.0% | 70.0% | +5.0% |

| ARC-Challenge | 72.3% | 76.0% | +3.7% |

| HumanEval | 85.9% | 88.9% | +3.0% |

| MATH | 55.0% | 57.4% | +2.4% |

| Multi-Query NIAH | 84.2% | 97.0% | +12.8% |

Source: arXiv:2601.07372

Mechanism Analysis

BBH Improvement (+5.0%): Beyond Memory

The substantial gain on Big-Bench Hard tasks reveals that Engram doesn't merely improve memorization—it frees computational resources for reasoning. By offloading static pattern retrieval to O(1) lookup, more neural capacity becomes available for complex logical operations.

Multi-Query NIAH Improvement (+12.8%): Long-Context Mastery

The dramatic improvement on Multi-Query Needle-in-a-Haystack demonstrates Engram's power in long-context scenarios. O(1) memory lookup releases attention budget to handle long-range dependencies, enabling the model to track multiple information threads across extended sequences.

Consistent Gains Across Domains

Improvements span knowledge (MMLU, CMMLU), reasoning (BBH, ARC), coding (HumanEval), mathematics (MATH), and long-context understanding. This breadth confirms that memory-compute separation benefits diverse task types rather than optimizing for specific benchmarks.

DeepSeek V4 Predictions: Architecture and Impact

Based on disclosed information and DeepSeek's development patterns, we can make informed predictions about V4's specifications and performance.

Expected Architecture

Release Timeline:

- Mid-February 2026 (around Chinese New Year)

- Following DeepSeek's pattern of holiday releases

Core Configuration:

- Base Technologies: mHC + MoE + MLA (Multi-head Latent Attention) + Engram

- Total Parameters: Approximately 1 trillion

- Active Parameters: ~32B per token (approximately 3% activation rate)

- Engram Memory: 20-25% of sparse parameters

- MoE Experts: 75-80% of sparse parameters

Performance Projections

| Benchmark | Claude Opus 4.5 | DeepSeek V4 (Estimated) |

|---|---|---|

| HumanEval | 92% | ~90-95% |

| GSM8K | 92% | ~94% |

| SWE-bench Verified | 80.9% | Target >85% |

Training Cost Comparison

| Model | Training Cost |

|---|---|

| DeepSeek R1 RL Phase | $294,000 |

| DeepSeek V3 Full Training | ~$5.58M |

| GPT-4 (Estimated) | >$100M |

| DeepSeek V4 | To be announced |

DeepSeek's algorithmic efficiency suggests V4 will maintain the pattern of achieving competitive performance at 10-20x lower cost than Western counterparts.

Industry Impact and Future Trends

DeepSeek V4's innovations carry profound implications for the AI industry and competitive landscape.

Immediate Market Impact

Challenge to “Bigger is Better” Paradigm

DeepSeek demonstrates that algorithmic innovation can outperform brute-force scaling. This challenges the assumption that only companies with massive computational budgets can compete in frontier AI development.

Geopolitical Implications

The $60 billion NVIDIA market cap loss following DeepSeek R1's release signals investor recognition that U.S. hardware advantages may not guarantee AI dominance. Efficient Chinese models reduce dependence on cutting-edge chips, potentially reshaping AI competition dynamics.

Cost Structure Disruption

If DeepSeek V4 matches or exceeds GPT-4/Claude performance at 1-2% of training cost, this pressures Western AI companies to either:

- Dramatically improve their own efficiency

- Compete on price, compressing profit margins

- Differentiate through other means (safety, deployment, specialized capabilities)

Future Trends (2026-2028)

Trend 1: Conditional Memory as Standard Primitive (2026-2027)

Conditional memory mechanisms like Engram will become foundational building blocks alongside MoE and attention. Future architectures will routinely incorporate:

- Hash-based static pattern retrieval

- Context-aware memory gating

- Memory-compute separation as default design principle

Trend 2: Algorithm-Driven Cost Reduction (2026-2027)

Engram's O(1) lookup reduces computational requirements, while mHC stable training decreases costly retraining from failures. Expect:

- Continued focus on efficiency over raw scale

- Novel architectures challenging current paradigms

- Democratization of frontier AI capabilities

Trend 3: Hardware-Software Co-Design (2027+)

Engram's deterministic computation patterns enable new optimization opportunities:

- Main Memory-GPU Memory Collaboration: Offloading embedding tables to main memory

- Specialized Accelerators: Hardware optimized for hash lookups and memory access patterns

- Custom Silicon: Chips designed specifically for memory-compute separated architectures

Guidance for AI Practitioners

Different stakeholders should prepare differently for the Engram paradigm.

For AI Researchers

Deep Dive into Mechanisms

- Study Engram's hashing mechanisms and collision resolution strategies

- Investigate gating strategies for different task types

- Explore U-shaped scaling law variations across domains

Theoretical Extensions

- Research optimal memory allocation for specialized tasks

- Explore conditional memory fusion with other architectural innovations

- Develop theoretical frameworks for memory-compute tradeoffs

For Architects and Engineers

Application Evaluation

- Assess Engram's applicability to your specific use cases

- Consider main memory-GPU memory collaborative architectures

- Identify opportunities for hardware-software co-optimization

Infrastructure Planning

- Prepare for models with heterogeneous memory requirements

- Design systems supporting both static and dynamic computation

- Optimize for deterministic memory access patterns

For Developers

Stay Current

- Monitor DeepSeek V4's open-source release and API availability

- Learn conditional memory usage patterns

- Experiment with memory-compute separated architectures

Prepare for Paradigm Shift

- Expect more efficient AI development workflows

- Anticipate new optimization techniques specific to hybrid architectures

- Build skills in both traditional neural networks and memory-based systems

Conclusion: The Memory-Compute Separation Revolution

DeepSeek V4 with Engram architecture represents a paradigm shift in AI design—from “brute-force computation” to “intelligent allocation,” from “unified architecture” to “memory-compute separation.”

As DeepSeek founder Liang Wenfeng observed: “MoE solved the problem of ‘how to compute less,' while Engram directly solves the problem of ‘don't compute blindly.'”

Core Innovations Recap

Architectural Innovation

- Engram introduces memory-compute separation, solving Transformer's dual-task problem

- O(1) deterministic lookup for static patterns frees neural capacity for reasoning

Theoretical Guidance

- U-shaped scaling law provides framework for optimal sparse model design

- Guides MoE and Engram allocation for maximum performance per parameter

System Efficiency

- Deterministic addressing enables embedding table offloading to main memory

- Breaks through GPU HBM limitations for massive vocabulary scales

Performance Excellence

- Equal parameters and FLOPs, Engram-27B significantly outperforms MoE baseline

- Gains across knowledge, reasoning, code, math, and long-context tasks

Industry Disruption

- Continuous algorithmic cost reduction challenges compute-scaling assumptions

- Intensifies China-U.S. AI competition through efficiency rather than hardware

The Path Forward

The future belongs to AI architects who can balance memory and computation, theory and practice, innovation and pragmatism. Engram opens a new door for this generation of builders.

For organizations and developers, the message is clear: algorithmic innovation matters as much as computational resources. The next wave of AI advancement may come not from those with the biggest GPU clusters, but from those with the most elegant solutions to fundamental problems.

DeepSeek V4 with Engram architecture demonstrates that the path to AI progress isn't singular. While some pursue ever-larger models trained on ever-larger clusters, others achieve comparable or superior results through architectural elegance and algorithmic efficiency.

As we approach DeepSeek V4's expected February 2026 release, the AI community stands at an inflection point. The question is no longer whether memory-compute separation will become standard, but how quickly the industry will adapt to this new paradigm and what innovations will emerge from this fundamental rethinking of AI architecture.