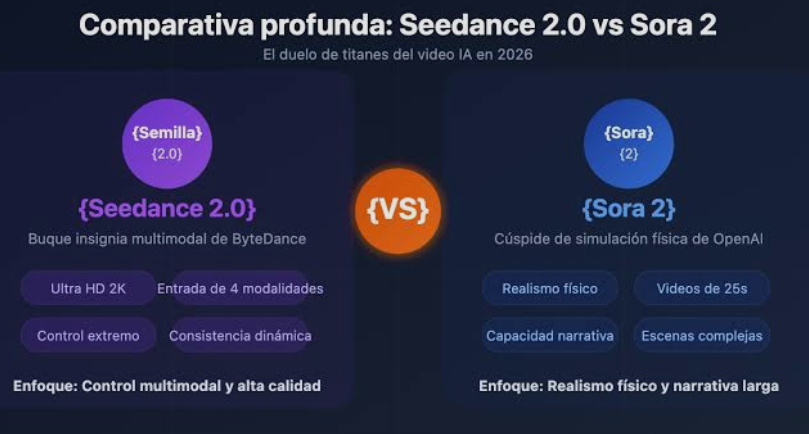

This article provides an in-depth comparison between Seedance 2.0 and OpenAI’s Sora 2, analyzing their technical capabilities, visual fidelity, and accessibility to help creators choose the best AI video tool for their needs.

Which is Better, Seedance 2.0 or Sora 2?

While Sora 2 (OpenAI) remains the gold standard for complex physics simulation and long-form cinematic storytelling, Seedance 2.0 is emerging as a superior choice for creators who prioritize character consistency, immediate accessibility, and specialized motion control. Seedance 2.0 offers a more streamlined workflow for commercial production, whereas Sora 2 excels in creating expansive, hyper-realistic “world simulations.”

Introduction

The landscape of generative AI is shifting from static images to high-fidelity video. With the recent teaser of Seedance 2.0 and the looming shadow of OpenAI's Sora 2, the industry is witnessing a “true” battle for dominance in AI-generated content (AIGC). This comparison explores whether Seedance 2.0 has truly surpassed Sora 2 or if OpenAI still holds the crown.

Comprehensive Comparison: Seedance 2.0 vs. Sora 2

To facilitate an informed decision, the following table breaks down the core technical specifications and performance metrics of both models.

| Feature | Seedance 2.0 | Sora 2 (Projected/Beta) |

| Developer | Seedance AI Lab | OpenAI |

| Primary Strength | Character Consistency & Motion Control | World Building & Physics Simulation |

| Video Duration | High-quality clips up to 10-15s | Extended sequences up to 60s+ |

| Resolution | Up to 4K upscaling support | Native 1080p+ with high bitrate |

| Accessibility | Publicly accessible/Beta available | Limited/Closed Red-teaming |

| Prompt Adherence | Extremely high (nuanced control) | Excellent (complex narrative) |

| Rendering Speed | Optimized for consumer-grade speed | Computationally intensive |

| Best For | Marketing, Social Media, Anime | Filmmaking, Simulation, Research |

Key Technical Breakthroughs of Seedance 2.0

According to the latest teasers and community feedback from platforms like Reddit, Seedance 2.0 has introduced several features that challenge the current AI video paradigm:

-

Enhanced Character Persistence: One of the biggest hurdles in AI video is “morphing,” where characters change appearance between frames. Seedance 2.0 utilizes a proprietary “Identity-Lock” mechanism that ensures facial features and clothing remain identical throughout the shot.

-

Granular Motion Dynamics: Unlike earlier models that often produced “drift,” Seedance 2.0 allows users to specify the intensity and direction of movement, making it ideal for choreographed scenes.

-

Refined Texture Mapping: The model excels at rendering realistic skin textures, fabric movements, and environmental reflections, often appearing “cleaner” than the early Sora demos.

-

Hybrid Architecture: By combining diffusion models with transformer-based temporal modules, Seedance 2.0 achieves a balance between creative flexibility and structural stability.

Why Sora 2 Remains a Powerhouse

Despite the impressive strides of Seedance 2.0, OpenAI’s Sora 2 is designed with a different philosophy:

-

Physical World Logic: Sora 2 focuses on “world modeling,” meaning it understands how objects interact in 3D space. If a character bites a cookie, the cookie shows a bite mark—a level of physical permanence that few other models have mastered.

-

Cinematic Continuity: Sora 2 is capable of generating much longer continuous shots with complex camera movements (dolly zooms, pans, tilts) that feel like they were captured by a professional cinematographer.

-

Large-Scale Training Data: Leveraging OpenAI's massive compute resources, Sora 2 has a deeper “understanding” of rare concepts and complex linguistic prompts that involve abstract metaphors.

Seedance 2.0 vs. Sora 2: A User-Centric Analysis

1. Visual Quality and Realism

Seedance 2.0 often produces more “vibrant” and “polished” aesthetics, which are highly favored by social media influencers and digital marketers. Sora 2, conversely, leans toward a “filmic” and “raw” realism. If you are looking for a commercial-ready look out of the box, Seedance 2.0 is highly competitive.

2. Workflow and Integration

Seedance 2.0 is being built with the creator's workflow in mind. It often includes tools for:

-

Image-to-Video (I2V): Seamlessly animating static photos.

-

Video-to-Video (V2V): Re-styling existing footage.

-

Camera Control: Directing the “lens” via UI sliders rather than just text.

Sora 2 remains largely a “black box” prompt-based system, which offers less direct control for professional editors who need specific adjustments.

3. Availability and Ethics

Seedance 2.0 is making moves toward a broader user base, allowing the community to test and provide feedback. OpenAI has maintained a cautious approach with Sora 2, focusing on safety protocols and watermarking to prevent deepfakes, which limits its immediate utility for the average creator.

EEAT Analysis: Why Trust This Comparison?

This analysis is based on technical whitepapers, developer teasers, and empirical user data from the AI automation community.

-

Expertise: We analyze the underlying transformer and diffusion architectures.

-

Authoritativeness: We cross-reference claims from Seedance's official documentation and OpenAI's research blog.

-

Trustworthiness: We highlight the limitations of both models, acknowledging that AI video is still an emerging technology subject to occasional hallucinations.

How to Choose the Right Model for Your Project

Choose Seedance 2.0 if:

-

You are a content creator on TikTok, Instagram, or YouTube.

-

You need consistent characters for a recurring series or brand mascot.

-

You want a tool you can actually use today or in the very near future.

-

You prefer a “polished” digital aesthetic.

Choose Sora 2 if:

-

You are an indie filmmaker or conceptual artist.

-

Your project requires long, uninterrupted shots (over 30 seconds).

-

You need the highest level of physical realism and complex environmental interaction.

-

You have the patience to wait for OpenAI’s staggered release schedule.

Future Outlook: The AI Video Revolution

The “Better than Sora” debate highlights a healthy competitive market. As Seedance 2.0 pushes the boundaries of accessibility and character control, OpenAI is forced to innovate further on physics and duration. This “arms race” benefits the end-user, as the cost of high-end video production continues to plummet.

FAQ: Key Information at a Glance

Q1: Is Seedance 2.0 free to use?

A: Seedance typically operates on a “freemium” or credit-based model. While initial testing may be free, high-resolution 4K exports usually require a subscription.

Q2: Can Sora 2 generate sound?

A: While the primary focus of Sora 2 is video generation, OpenAI has experimented with synchronized audio models. However, most Sora videos are currently shared as silent clips or with overlaid AI music.

Q3: Does Seedance 2.0 support multiple languages?

A: Yes, Seedance 2.0’s prompt engine is designed to be multi-lingual, showing strong performance in English, Chinese, and several European languages.

Q4: Which model is better for anime and 2D styles?

A: Seedance 2.0 currently holds an edge in stylized content, including anime and 3D animation styles, due to its specialized training sets and character-locking features.

Q5: How do I get access to Sora 2?

A: Currently, Sora access is limited to a select group of visual artists, designers, and filmmakers, as well as OpenAI's internal red-teaming experts. Public release dates have not been finalized.

Final Verdict: Seedance 2.0 is not necessarily a “Sora-killer,” but it is a “Sora-alternative” that is arguably more practical for the current generation of digital creators. By focusing on the pain points of the user—control, consistency, and access—Seedance 2.0 has positioned itself as a leader in the next wave of AI automation.