GLM-4.7 is the superior choice for complex reasoning, logic, and coding tasks, whereas GLM-4-5V is the specialized expert for multimodal vision tasks, OCR, and image analysis. While GLM-4.7 introduces a groundbreaking “Thinking Process” (Chain of Thought) that excels in math and programming, GLM-4-5V is built with a vision-transformer architecture that allows it to “see” and interpret visual data with high precision. For general-purpose chat and bilingual reasoning, GLM-4.7 is the current state-of-the-art flagship from Zhipu AI, while GLM-4-5V serves as the essential tool for developers requiring visual comprehension.

The Evolution of the GLM Series: A 2026 Perspective

The landscape of Large Language Models (LLMs) has shifted from simple text generation to specialized intelligence. Zhipu AI, a leader in the bilingual AI space, has strategically branched its GLM-4 family to address different cognitive needs. In 2026, the distinction between a “reasoning model” like GLM-4.7 and a “vision model” like GLM-4-5V is the key to building efficient, AI-driven workflows. Understanding the architectural differences between these two allows businesses to deploy the right model for the right job, maximizing performance while controlling compute costs.

GLM-4.7 represents the culmination of Zhipu’s efforts to match and exceed the reasoning capabilities of closed-source giants. It is designed to be a “thinking” model, utilizing a refined training set that prioritizes logical density. On the other hand, GLM-4-5V (the “V” standing for Vision) was developed to bridge the gap between natural language processing and computer vision. This specialized focus ensures that neither model is a “jack of all trades, master of none,” but rather a master of its specific domain.

GLM-4.7: The Master of Reasoning and “Thinking”

The standout feature of GLM-4.7 is its integrated “Thinking Process.” Unlike standard models that predict the next token almost instantly, GLM-4.7 is trained to generate an internal monologue—a Chain of Thought (CoT)—before providing a final answer. This deliberative reasoning allows the model to catch its own errors, evaluate multiple paths to a solution, and handle “System 2” thinking tasks that typically trip up faster, more impulsive models.

-

Logical Density: It excels at multi-step problems where the conclusion depends on a series of verified premises.

-

Mathematical Precision: In benchmarks involving complex calculus or symbolic logic, the “Thinking Process” significantly boosts accuracy.

-

Instruction Following: The model has a high adherence rate for complex, multi-part prompts, making it ideal for agentic workflows.

-

Bilingual Mastery: It maintains top-tier performance in both English and Chinese, understanding cultural nuances and technical terminology in both languages natively.

For developers, the GLM-4.7 architecture is a breath of fresh air because of its transparency. Because the reasoning steps are often visible (especially in the Flash and Pro versions), debugging why a model failed a specific logic test becomes much easier. This “glass-box” approach to AI intelligence is a major selling point for industries like finance and law, where the process of reaching a conclusion is as important as the conclusion itself.

GLM-4-5V: The “Eyes” of the AI Ecosystem

While GLM-4.7 handles the logic, GLM-4-5V provides the visual interface. This model is built on a multimodal architecture that can ingest images, diagrams, and even video frames to provide descriptive or analytical output. It is not just a text model with an image-captioning add-on; it is a native multimodal model where visual and linguistic tokens are processed in a shared latent space, allowing for deep “visual reasoning.”

-

Advanced OCR (Optical Character Recognition): It can read handwritten notes, complex tables, and technical blueprints with extreme accuracy.

-

Spatial Reasoning: The model understands the layout of an image, such as the relative distance between objects or the hierarchy of a flowchart.

-

Visual Problem Solving: You can provide a photo of a broken circuit board, and GLM-4-5V can identify the burnt capacitor based on visual cues.

-

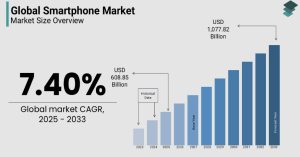

Chart and Graph Interpretation: It can turn a visual bar chart into a structured JSON data table or a written summary of trends.

GLM-4-5V is indispensable for automated document processing (IDP) and accessibility tools. In a corporate setting, it can be used to scan thousands of invoices, extracting line items and matching them against purchase orders. In a creative setting, it can act as a critic or an assistant, describing scenes and helping designers iterate on visual concepts. Its ability to “read” the world makes it the perfect companion to the “thinking” capabilities of its 4.7 sibling.

Performance Benchmarks: A Side-by-Side Comparison

When comparing the two models on standard benchmarks, the strengths of their respective architectures become clear. In text-only reasoning benchmarks like MMLU (Massive Multitask Language Understanding) or HumanEval (Coding), GLM-4.7 consistently outperforms GLM-4-5V. This is expected, as the 4.7 architecture is optimized for high-level cognitive tasks and long-context text processing.

However, in multimodal benchmarks like MMMU (Massive Multi-discipline Multimodal Understanding) or VQAv2 (Visual Question Answering), GLM-4-5V takes a commanding lead. While GLM-4.7 can sometimes “understand” an image if it is provided with a text-based description (via a secondary model), it lacks the native ability of 4-5V to parse raw pixels. Therefore, the choice between them is rarely about which is “better” in a vacuum, but which is better suited for the input data type.

| Capability | GLM-4.7 | GLM-4-5V |

| Logic/Math Reasoning | Elite (with CoT) | Standard |

| Coding Assistance | High Performance | Moderate |

| Image Recognition | N/A | Elite |

| OCR Quality | N/A | Elite |

| Context Window | Up to 128K+ | Standard (~8K-32K) |

| Bilingual Proficiency | Native En/Zh | Native En/Zh |

The “Flash” Factor in GLM-4.7

A significant development in the 4.7 series is the introduction of GLM-4.7-Flash. This version is optimized for speed and cost-efficiency, making high-level reasoning accessible for real-time applications. While the full-scale GLM-4.7 Pro is intended for heavy research and complex architecture, the Flash version is the “workhorse” for 2026. It provides a significant portion of the Pro's reasoning power at a fraction of the latency.

GLM-4.7-Flash is particularly popular in the local AI community (LocalLLaMA) because it can be run on consumer-grade hardware while still providing a coherent “thinking” output. This makes it the go-to choice for personal coding assistants or local privacy-focused chatbots. Users have noted that even at smaller parameter sizes, the 4.7-Flash model maintains a logical consistency that older, larger models often lack.

Use Case Scenarios: When to Choose GLM-4.7

Choosing GLM-4.7 is a strategic move for any task where “getting the facts right” through logic is the priority. Because of its massive context window and reasoning traces, it is a superior tool for information-dense professions.

-

Software Development: Use GLM-4.7 for refactoring code, writing unit tests, and architecting microservices where the logic must be airtight.

-

Legal and Financial Analysis: It is perfect for reviewing long contracts or financial reports, as it can “reason” through the implications of specific clauses.

-

Educational Tutoring: Because it can show its “Thinking Process,” it is an ideal tool for teaching students math or science by explaining the steps to a solution.

-

Content Strategy: For bilingual marketing, it can ensure that the brand voice is consistent and culturally appropriate across different regions.

Use Case Scenarios: When to Choose GLM-4-5V

GLM-4-5V should be the primary choice whenever the input involves visual information. Its ability to translate visual concepts into structured data is its greatest asset.

-

E-commerce Automation: Use GLM-4-5V to automatically generate product descriptions from photos or to categorize inventory based on visual attributes.

-

Medical Document Digitization: It can parse complex medical forms and lab results that include both text and anatomical diagrams.

-

Smart Surveillance and Monitoring: The model can analyze video frames or still images to detect specific events or describe scenes for security purposes.

-

UX/UI Design Feedback: Designers can upload screenshots of their work, and the model can provide critiques on layout, color contrast, and element alignment.

Bilingual Superiority: The Zhipu AI Edge

One area where both models excel over many Western competitors is their native bilingualism. Zhipu AI has deep roots in the Chinese academic and tech community, ensuring that GLM-4.7 and GLM-4-5V are not merely “translating” English logic into Chinese. Instead, they understand the underlying linguistic structures and cultural context of both languages.

In GLM-4.7, this results in reasoning that is equally sharp in both languages, avoiding the “logic degradation” often seen when English-centric models are forced to work in Mandarin. In GLM-4-5V, this means the model can perform OCR on traditional and simplified Chinese characters just as easily as it does on the Latin alphabet, making it a powerful tool for global trade and academic research involving historical Chinese documents.

Infrastructure and Deployment: Local vs. Cloud

For many users, the decision between these models also comes down to how they are deployed. Both GLM-4.7 (specifically the Flash and open-weights versions) and GLM-4-5V can be accessed via the BigModel API, which provides a scalable, cloud-based environment with OpenAI-compatible endpoints. This is the easiest way for businesses to integrate these models into their existing software stacks.

However, for those with privacy concerns or specific hardware setups, local deployment is a growing trend. GLM-4.7-Flash is highly optimized for local inference, requiring relatively modest VRAM to function. In contrast, GLM-4-5V requires more specialized handling due to its vision encoder, but it is increasingly supported by local hosting platforms like Ollama and LM Studio. Being able to run these models locally ensures that sensitive images or proprietary code never leave the user's secure network.

The Future of Zhipu AI’s Model Strategy

Looking forward, the split between reasoning (4.7) and vision (4-5V) is likely to merge into a singular, all-powerful “Omni” model. We are already seeing hints of this in the way Zhipu AI integrates their models into “agents” that can switch between different versions depending on the task at hand. For now, however, the dual-model approach allows for much higher efficiency.

By keeping the vision components separate from the core reasoning engine, Zhipu AI allows users to pay for only the “intelligence” they need. If a user only needs text-based coding help, they aren't forced to pay the “compute tax” of a vision-heavy model. This modularity is a smart business move that reflects a deep understanding of the diverse needs of the global AI market.

Summary Checklist for Model Selection

To help you make the final decision, use this quick checklist based on your project requirements:

-

Choose GLM-4.7 if:

-

Your primary input is text or code.

-

You need the model to “show its work” or think step-by-step.

-

You are analyzing very long documents (up to 128K tokens).

-

You need a fast, local reasoning assistant (via the Flash version).

-

-

Choose GLM-4-5V if:

-

Your input includes images, PDF scans, or diagrams.

-

You need high-accuracy OCR for forms or handwritten notes.

-

You want to analyze visual layouts or charts.

-

You are building an application that needs to “see” and describe the physical world.

-

Conclusion: A Complementary AI Duo

In conclusion, GLM-4.7 and GLM-4-5V are not competitors; they are a complementary duo that defines the cutting edge of the Zhipu AI ecosystem. GLM-4.7 is the “brain,” providing the deep logical reasoning and bilingual mastery required for complex cognitive tasks. GLM-4-5V is the “eyes,” offering the sophisticated visual comprehension needed to translate the visual world into actionable data.

For most advanced users, the best strategy is to use both. By routing multimodal tasks to GLM-4-5V and reasoning-heavy text tasks to GLM-4.7, you can build an AI system that is both incredibly capable and highly efficient. As Zhipu AI continues to innovate, these two models stand as a testament to the power of specialized, high-performance artificial intelligence.