The AI hardware world is booming, and you’re witnessing history in the making. With a market expected to hit $390.91 billion by 2025, companies like NVIDIA, AMD, and Intel are driving innovation. These AI hardware companies are shaping industries, from healthcare to autonomous vehicles, with groundbreaking technology that’s redefining what’s possible.

Key Takeaways

-

NVIDIA is a top company in AI hardware. Its H100 GPU speeds up AI training a lot. This helps industries like healthcare and self-driving cars.

-

AMD's MI300 chips are cheaper and work well for AI. They use less power but still perform great. This makes AI easier for all businesses to use.

-

Intel's Gaudi3 processors are great for big AI projects. They are built to handle large tasks efficiently. This is important for companies with huge AI needs.

NVIDIA

Flagship AI Chips and Hardware

When it comes to AI chips, NVIDIA is the name you can’t ignore. Their flagship H100 GPU has become the gold standard in the AI hardware world. This chip, built on the Hopper architecture, has revolutionized AI training. It’s so efficient that it can reduce model training times from weeks to just days. That’s a game-changer for industries relying on AI, like healthcare and autonomous vehicles.

NVIDIA’s dominance doesn’t stop there. The company is set to launch its Blackwell AI chip in 2025. This chip promises exaflop-level performance, making it one of the most powerful AI chips ever created. Whether you’re training massive language models or running complex simulations, NVIDIA’s hardware is designed to handle it all.

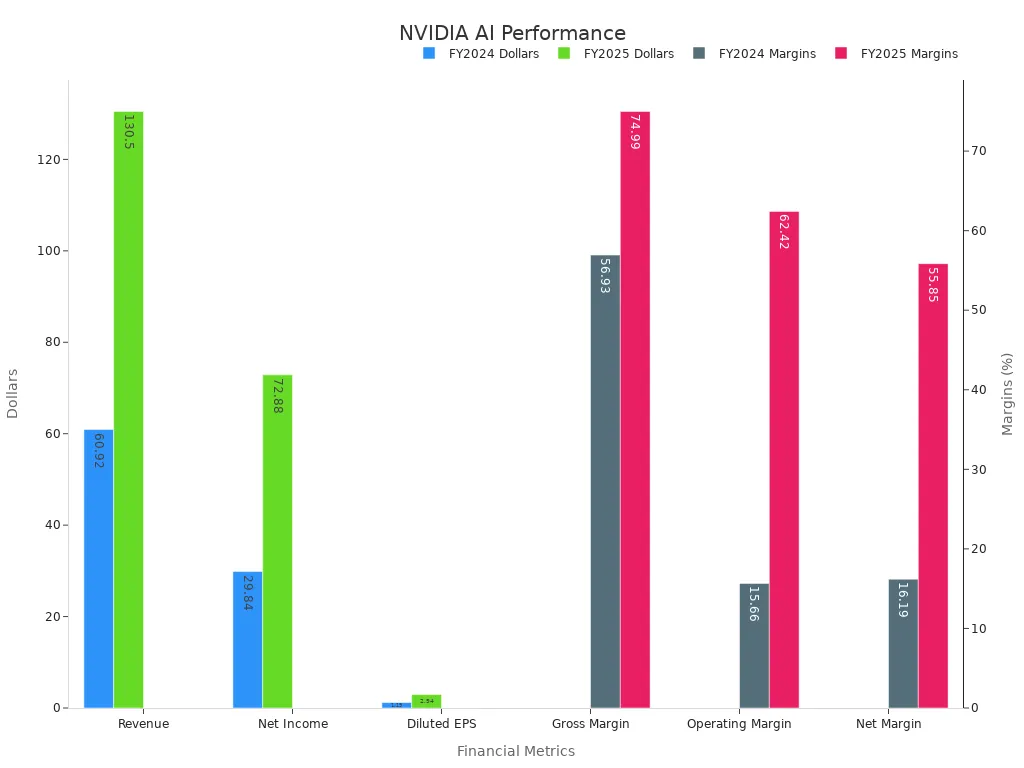

Here’s a quick look at NVIDIA’s financial performance, which reflects its leadership in AI chips and hardware:

|

Metric |

FY 2024 |

FY 2025 |

Year-over-Year Growth |

|---|---|---|---|

|

Revenue |

$60.92 billion |

$130.5 billion |

+114.2% |

|

Net Income |

$29.84 billion |

$72.88 billion |

+144.89% |

|

Diluted EPS |

$1.19 |

$2.94 |

+147.06% |

|

Gross Margin |

56.93% |

74.99% |

N/A |

|

Operating Margin |

15.66% |

62.42% |

N/A |

|

Net Margin |

16.19% |

55.85% |

N/A |

These numbers don’t just show growth; they highlight NVIDIA’s unmatched ability to deliver cutting-edge AI hardware.

Innovations in AI Hardware

NVIDIA isn’t just about making chips; it’s about pushing boundaries. The Hopper architecture, which powers the H100 GPU, has set a new benchmark for AI chip performance. It’s not just faster; it’s smarter, enabling developers to train AI models more efficiently than ever before.

But that’s not all. NVIDIA’s CUDA ecosystem has over 3.5 million developers actively engaged. This community is a huge advantage, as it ensures continuous innovation and support for NVIDIA’s AI hardware. And with the upcoming Blackwell AI chip, NVIDIA is poised to redefine what’s possible in AI computing.

One of the most exciting trends is the growing demand for the H100 GPU. It’s so popular that it’s created a supply crunch, with prices soaring. This demand underscores the chip’s importance in the AI industry and its role in driving innovation.

Market Leadership in 2025

By 2025, NVIDIA has solidified its position as the leader in AI hardware. The company’s ability to innovate and deliver powerful AI chips has set it apart from competitors. Its financial performance speaks volumes, with revenue and net income more than doubling year-over-year.

NVIDIA’s market leadership isn’t just about numbers. It’s about impact. From enabling breakthroughs in AI research to powering the next generation of consumer devices, NVIDIA is at the forefront of the AI revolution. If you’re looking for the company that’s shaping the future of AI, NVIDIA is the one to watch.

AMD

Flagship AI Chips and Hardware

AMD has made a name for itself in the AI hardware space by delivering high-performance chips that balance power and efficiency. Its flagship MI300 series, launched in 2024, has become a favorite among AI developers. These chips combine CPU, GPU, and memory into a single package, creating a powerhouse for AI workloads. You’ll find the MI300 excels in tasks like natural language processing and image recognition, making it a versatile choice for various industries.

What sets AMD apart is its focus on scalability. The MI300 chips are designed to handle everything from small-scale AI applications to massive data center operations. This flexibility has made AMD a go-to option for businesses looking to integrate AI into their operations without breaking the bank.

Here’s a quick comparison of AMD’s MI300 series with its competitors:

|

Feature |

AMD MI300 |

NVIDIA H100 |

Intel Gaudi 3 |

|---|---|---|---|

|

Architecture |

CDNA 3 |

Hopper |

Habana |

|

Memory Bandwidth |

5.2 TB/s |

3.6 TB/s |

2.4 TB/s |

|

Power Efficiency |

High |

Moderate |

Moderate |

|

Target Applications |

Versatile |

Specialized |

Versatile |

This table shows how AMD’s MI300 holds its own against industry giants like NVIDIA and Intel, especially in terms of memory bandwidth and versatility.

Innovations in AI Hardware

AMD isn’t just keeping up with the competition—it’s setting trends. The company has invested heavily in AI hardware innovation, focusing on energy efficiency and performance. Its Infinity Architecture allows seamless communication between CPUs and GPUs, reducing latency and boosting overall system performance. This innovation has been a game-changer for AI developers who need reliable and fast hardware.

Another standout feature is AMD’s use of advanced packaging technologies. By stacking memory and processing units closer together, AMD has managed to reduce power consumption while increasing performance. This approach not only makes their chips more efficient but also more environmentally friendly.

You’ll also appreciate AMD’s commitment to open-source software. The ROCm platform provides developers with the tools they need to optimize AI workloads on AMD hardware. This open ecosystem encourages collaboration and ensures that AMD stays at the forefront of AI innovation.

Market Leadership in 2025

By 2025, AMD has firmly established itself as a leader in the AI hardware market. Its ability to deliver high-performance, cost-effective solutions has earned it a loyal customer base. Companies across industries—from healthcare to finance—are relying on AMD’s hardware to power their AI applications.

AMD’s market strategy focuses on accessibility. While competitors like NVIDIA dominate the high-end market, AMD has carved out a niche by offering affordable yet powerful solutions. This approach has made AI technology more accessible to smaller businesses and startups, democratizing the field.

The numbers speak for themselves. AMD’s revenue from AI hardware has grown by over 80% year-over-year, and its market share continues to climb. If you’re looking for a company that combines innovation with affordability, AMD is the one to watch.

Intel

Flagship AI Chips and Hardware

Intel has been making waves in the AI hardware space with its Gaudi3 processors. These chips are designed to deliver exceptional training efficiency, especially in large-scale cluster environments. What makes Gaudi3 stand out is its built-in Ethernet fabric. This feature allows you to scale training throughput almost linearly as you add more nodes. It’s a game-changer for companies running massive AI workloads.

When compared to competitors like NVIDIA’s H100 and AMD’s MI300X, Intel’s Gaudi3 excels in cluster settings. While NVIDIA leads in training speeds and AMD offers higher memory for larger models, Gaudi3 focuses on efficiency. This makes it a strong contender for businesses prioritizing cost-effective scaling.

Innovations in AI Hardware

Intel isn’t just keeping up; it’s innovating in ways that matter. The company has invested heavily in optimizing its chips for energy efficiency. This focus not only reduces operational costs but also aligns with growing environmental concerns.

Another standout feature is Intel’s use of advanced interconnect technologies. These innovations ensure faster communication between processors, reducing latency and improving overall performance. If you’re looking for hardware that balances power, efficiency, and scalability, Intel’s solutions are worth considering.

Market Leadership in 2025

By 2025, Intel has carved out a unique position in the AI hardware market. Its focus on scalable, efficient solutions has made it a favorite among enterprises. While competitors dominate other niches, Intel’s Gaudi3 processors have become the go-to choice for large-scale AI training. This strategic focus has solidified Intel’s role as a leader in the industry.

Flagship AI Chips and Hardware

Google has been making waves in AI hardware with its Tensor G2 SoC. This chip, introduced in 2022, combines an 8-core CPU with integrated TPU and GPU capabilities. It’s designed to supercharge AI performance in flagship devices like the Pixel series. You’ll notice how it enhances tasks like voice recognition, real-time translation, and advanced photo editing.

The Tensor G2 isn’t just about raw power. It’s about efficiency. Its architecture optimizes AI workloads while keeping energy consumption low. This balance makes it ideal for consumer devices where battery life matters. With the ASIC segment holding a 25% market share in 2024, Google’s focus on specialized AI applications aligns perfectly with industry trends.

Innovations in AI Hardware

Google doesn’t just follow trends—it sets them. The Tensor G2 chip showcases Google’s commitment to integrating AI hardware seamlessly into everyday devices. Its TPU (Tensor Processing Unit) is a standout feature, enabling faster and smarter AI computations. You’ll appreciate how this innovation makes AI more accessible to consumers.

Another exciting development is Google’s push toward edge computing. By embedding AI capabilities directly into devices, Google reduces reliance on cloud processing. This approach not only speeds up operations but also enhances privacy by keeping data local. It’s a win-win for users and developers alike.

Market Leadership in 2025

By 2025, Google has solidified its position as a leader in AI hardware. Its ability to innovate and deliver practical solutions has earned it a loyal following. The Tensor G2 chip has become a benchmark for AI integration in consumer devices, setting Google apart from competitors.

Google’s focus on edge computing and specialized AI applications has reshaped the market. You’ll see its impact in industries ranging from healthcare to smart home technology. With its forward-thinking approach, Google isn’t just keeping up—it’s leading the charge into the future of AI hardware.

Apple

Flagship AI Chips and Hardware

Apple has been quietly revolutionizing AI hardware with its Baltra AI chip. This chip is part of Apple’s broader strategy to reduce reliance on external suppliers like Nvidia and AWS. Baltra is designed to integrate seamlessly into Apple’s ecosystem, offering cost savings and unmatched performance.

Apple’s collaboration with Broadcom is a key highlight. Together, they’re working on advanced 3.5D XDSiP packaging technology. This innovation improves chip-to-chip communication, boosts bandwidth, and reduces power consumption. You’ll notice how these features make Baltra ideal for AI applications in consumer devices like iPhones and Macs.

Here’s a quick comparison of Apple’s transition:

|

Transition |

Previous Supplier |

Apple Solution |

Key Benefits |

|---|---|---|---|

|

AI Chips (In Progress) |

Nvidia, AWS |

Baltra AI Chip |

Cost savings, ecosystem integration |

Innovations in AI Hardware

Apple isn’t just building chips; it’s redefining how AI hardware works. Baltra’s design focuses on efficiency and integration. By leveraging Broadcom’s packaging technology, Apple ensures faster data transfer and lower energy use. This approach aligns perfectly with the growing demand for sustainable tech.

You’ll also appreciate Apple’s focus on user-centric AI. Their chips optimize tasks like facial recognition, voice commands, and real-time translation. These innovations make AI feel more intuitive and accessible in everyday life.

Market Leadership in 2025

By 2025, Apple has cemented its place as a leader in AI hardware. Its ability to innovate while keeping costs down has set it apart. Baltra AI chips have become a cornerstone of Apple’s ecosystem, powering everything from mobile devices to wearables.

Apple’s strategic shift to in-house solutions has reshaped the market. You’ll see its impact in industries like healthcare and entertainment, where AI applications are thriving. With its focus on efficiency and integration, Apple is leading the charge into the future of AI hardware.

Amazon

Flagship AI Chips and Hardware

Amazon has been quietly reshaping the AI hardware landscape with its custom-designed chips. You’ve probably heard of Graviton, Trainium, and Inferentia—each playing a unique role in Amazon’s strategy.

|

Chip Name |

Purpose |

Impact on Market Leadership |

|---|---|---|

|

Graviton |

General-purpose CPUs |

Early entry into chip design, enhancing AWS offerings |

|

Trainium |

AI training |

Cost-effective alternative to Nvidia's H100 chips |

|

Inferentia |

AI inference |

Democratizes AI access for enterprises |

Trainium, in particular, has caught the industry’s attention. It offers computing power similar to Nvidia’s H100 but at a significantly lower cost. By undercutting Nvidia’s pricing by 25%, Amazon has made AI training more accessible for businesses of all sizes. Inferentia chips, on the other hand, focus on AI inference, helping enterprises deploy AI models efficiently. Together, these chips are transforming how companies approach AI workloads.

Innovations in AI Hardware

Amazon isn’t just competing—it’s innovating. Its chips are designed to address real-world challenges like cost and scalability. Trainium chips mitigate GPU shortages, allowing you to train AI models without breaking the bank. Inferentia democratizes AI by making it affordable for smaller enterprises.

Amazon’s focus on cloud-based AI solutions is another game-changer. By integrating its chips into AWS, Amazon enables businesses to scale their AI operations effortlessly. You don’t need to worry about hardware constraints; Amazon’s infrastructure handles it for you. This approach is perfect for companies looking to adopt AI without investing heavily in physical hardware.

Market Leadership in 2025

By 2025, Amazon has emerged as a major player in the AI hardware market. Its strategy of offering cost-effective alternatives to industry giants like Nvidia has paid off. Trainium and Inferentia chips have become staples in cloud-based AI solutions, helping Amazon carve out a significant market share.

Amazon’s ability to innovate while keeping costs low has made AI accessible to a broader audience. Whether you’re a startup or a large enterprise, Amazon’s hardware solutions empower you to harness the power of AI. With its focus on affordability and scalability, Amazon is shaping the future of AI hardware.

Qualcomm

Flagship AI Chips and Hardware

Qualcomm has been a game-changer in AI hardware, especially in mobile and edge computing. Its Snapdragon processors, particularly the Snapdragon 8 Gen 3, have set new benchmarks for AI performance in smartphones. You’ll find these chips powering flagship devices, delivering lightning-fast AI capabilities for tasks like real-time translation, advanced photography, and voice recognition.

But Qualcomm isn’t stopping there. Its Cloud AI 100 chip has made waves in data centers. This chip is designed for AI inference workloads, offering high performance with low power consumption. Whether you’re running AI models on the cloud or at the edge, Qualcomm’s hardware ensures you get the best of both worlds—speed and efficiency.

Innovations in AI Hardware

Qualcomm’s innovations focus on making AI accessible and efficient. One standout feature is its AI Engine, which integrates seamlessly into Snapdragon processors. This engine optimizes AI tasks, so your devices can handle complex computations without draining the battery.

Another exciting development is Qualcomm’s push into on-device AI. By embedding AI capabilities directly into hardware, Qualcomm reduces the need for cloud processing. This approach not only speeds up operations but also enhances privacy by keeping data local.

Tip: If you’re looking for AI hardware that balances performance and energy efficiency, Qualcomm’s solutions are worth exploring.

Market Leadership in 2025

By 2025, Qualcomm has solidified its position as a leader in mobile and edge AI hardware. Its Snapdragon processors dominate the smartphone market, while the Cloud AI 100 chip has gained traction in enterprise applications.

Qualcomm’s focus on energy-efficient, on-device AI has reshaped the industry. You’ll see its impact in everything from smart home devices to autonomous vehicles. With its innovative approach, Qualcomm is driving the future of AI hardware forward.

Graphcore

Flagship AI Chips and Hardware

Graphcore has been making waves in the AI hardware world with its Colossus Mk2 IPU (Intelligence Processing Unit). This chip is a powerhouse, designed to handle massive parallel processing. It’s perfect for complex AI tasks like natural language processing and advanced machine learning models. You’ll find that the Colossus Mk2 excels in efficiency, making it a favorite for researchers and developers working on cutting-edge AI projects.

What sets Graphcore apart is its focus on specialized AI hardware. The Colossus Mk2 isn’t just fast—it’s smart. Its architecture is built to optimize workloads, ensuring you get the best performance without wasting resources. Whether you’re running AI in a cloud data center or at the edge, Graphcore’s hardware delivers.

Innovations in AI Hardware

Graphcore doesn’t just follow trends; it creates them. The Colossus Mk2 IPU is a prime example of this. Its advanced architecture supports massive parallel processing, which is crucial for today’s AI research. This innovation allows you to tackle complex problems faster and more efficiently.

The company’s commitment to pushing boundaries doesn’t stop there. In 2022, Graphcore raised $222 million in Series E funding, bringing its total funding to over $710 million. This investment has fueled the development of new AI chipsets, expanding their reach into cloud data centers and edge computing. It’s clear that Graphcore is all-in on shaping the future of AI hardware.

Market Leadership in 2025

By 2025, Graphcore has cemented its place as a leader in AI hardware. Its Colossus Mk2 IPU has become a go-to solution for businesses and researchers alike. The chip’s ability to handle complex AI workloads with unmatched efficiency has set it apart from competitors.

Graphcore’s strategic focus on innovation and funding has paid off. Its hardware is now a staple in industries ranging from healthcare to autonomous vehicles. If you’re looking for a company that’s redefining AI hardware, Graphcore is one to watch.

Cerebras Systems

Flagship AI Chips and Hardware

Cerebras Systems has taken AI hardware to a whole new level with its Wafer Scale Engine (WSE-3). This chip isn’t just big—it’s revolutionary. Imagine 900,000 AI-optimized cores packed into a single wafer. That’s what makes the WSE-3 stand out. It’s designed to handle massive AI workloads, reducing training times from weeks to just days or even hours.

The CS-3 system, powered by WSE-3, delivers 125 petaflops of AI performance. It can handle models with up to 24 trillion parameters, making it perfect for cutting-edge applications like molecular dynamics and epigenomics. For example, it can simulate 1.1 million steps per second in molecular dynamics—748 times faster than a supercomputer.

Here’s a quick look at what makes Cerebras’ hardware so impressive:

|

Metric |

1TP15التالي |

|---|---|

|

AI-optimized cores in WSE-3 |

900,000 |

|

On-chip SRAM memory |

44 GB |

|

Memory bandwidth |

21 petabytes/second |

|

Training time reduction |

Weeks to days or hours |

|

Price-performance improvement |

Up to 100x better than GPUs |

Innovations in AI Hardware

Cerebras isn’t just building chips; it’s rewriting the rules of AI hardware. The WSE-3’s wafer-scale design is a game-changer. It eliminates bottlenecks by integrating memory and processing units directly on the chip. This innovation boosts memory bandwidth to a staggering 21 petabytes per second.

Another standout feature is its near-linear scalability. You can connect multiple WSE-3 chips to create a Wafer-Scale Cluster, which scales effortlessly as your AI needs grow. This flexibility makes Cerebras ideal for industries like healthcare and scientific research.

Tip: If you’re tackling massive AI models or simulations, Cerebras’ hardware can save you time and resources.

Market Leadership in 2025

By 2025, Cerebras Systems has become a leader in specialized AI hardware. Its focus on wafer-scale technology has set it apart from competitors. You’ll see its impact in industries that demand high-performance computing, like pharmaceuticals and climate modeling.

Cerebras’ ability to deliver unmatched speed and efficiency has earned it a loyal customer base. Its hardware isn’t just faster—it’s smarter, offering up to 100x better price-performance than traditional GPUs. If you’re looking for a company that’s redefining AI hardware, Cerebras Systems is leading the charge.

Groq

Flagship AI Chips and Hardware

Groq has been making waves in the AI hardware world with its innovative chip architecture. Its chips are designed to deliver real-time AI inference speeds that set a new industry benchmark. You’ll find these chips excelling in applications where speed and precision are critical, like autonomous vehicles and real-time analytics.

What makes Groq’s hardware unique is its simplicity. Instead of relying on traditional multi-core designs, Groq uses a single-threaded architecture. This approach eliminates bottlenecks and ensures consistent performance, even under heavy workloads. If you’re working on AI models that demand low latency and high throughput, Groq’s chips are a perfect fit.

Innovations in AI Hardware

Groq isn’t just keeping up with the competition—it’s redefining the game. Its novel chip design focuses on real-time processing, which is a game-changer for industries like healthcare and finance. You’ll appreciate how Groq’s architecture simplifies AI model deployment, making it easier to scale your operations.

The competitive landscape in AI hardware is fierce, with significant investments from both startups and established players. Groq stands out by focusing on efficiency and speed. Here’s a quick look at how Groq is shaping the market:

|

Evidence Description |

Source Link |

|---|---|

|

Groq has introduced a novel chip architecture that delivers real-time AI inference speed, setting a new benchmark in the industry. |

|

|

The competitive landscape shows significant investments from both established companies and startups, indicating a dynamic market. |

Market Leadership in 2025

By 2025, Groq has positioned itself as a leader in AI hardware. Its focus on real-time inference and simplified architecture has earned it a loyal customer base. You’ll see Groq’s chips powering everything from autonomous drones to advanced robotics.

Groq’s ability to innovate while addressing real-world challenges has set it apart. Its hardware isn’t just fast—it’s reliable and scalable. If you’re looking for cutting-edge AI solutions, Groq is a name you can trust.

Industry Trends in AI Hardware

Rise of Custom AI Chips

You’ve probably noticed how AI hardware is becoming more specialized. Custom AI chips are leading this shift, designed to handle specific tasks like high-performance inference or training large-scale generative models. These chips are tailored for deep learning and other AI applications, making them faster and more efficient than general-purpose processors.

The AI chip market is growing rapidly. The inference segment alone is expected to grow at a CAGR of 30.38%, showing how much demand there is for custom solutions. Generative AI technology is also driving this trend, holding a 24% market share. This growth reflects the increasing complexity of AI models and the need for accelerators that can handle them.

Focus on Energy Efficiency

Energy efficiency is a big deal in AI infrastructure. Companies are racing to create energy-efficient chips that reduce costs and environmental impact. NVIDIA, for example, has improved the energy efficiency of its GPUs by an incredible 45,000 times over the last eight years. Innovations like TensorRT-LLM can cut energy use for large language model inference by three times.

Switching from CPU-only systems to GPU-accelerated ones can save over 40 terawatt-hours of energy annually. That’s enough to power nearly 5 million U.S. homes! These advancements aren’t just about saving energy—they’re also about boosting AI performance while keeping operations sustainable.

Integration of AI Hardware in Consumer Devices

AI hardware is becoming a part of your everyday life. From smartphones to smart home devices, AI products are everywhere. In 2023, smartphones captured over 36.5% of the Edge AI hardware market. Consumer electronics also held a significant share, exceeding 21.3%.

This trend is all about making AI accessible. Devices powered by AI accelerators can perform tasks like real-time translation and advanced photography. These features make your gadgets smarter and more intuitive, enhancing your daily experiences.

Growth of AI in Edge Computing

Edge computing is transforming how AI solutions are deployed. Instead of relying on cloud servers, AI models now run directly on devices. This approach reduces latency and improves privacy by keeping data local.

The Edge AI market is booming. It’s projected to grow from USD 19 billion in 2023 to USD 163 billion by 2033, with a CAGR of 24.1%. Industries like automotive, healthcare, and manufacturing are driving this growth. They rely on edge AI infrastructure for real-time data processing and decision-making.

Edge computing isn’t just a trend—it’s the future. It’s making AI faster, more reliable, and more secure, paving the way for innovative applications across industries.

The top AI hardware companies have reshaped innovation in 2025. From NVIDIA’s exaflop-level GPUs to Apple’s specialized Neural Engines, each company has driven breakthroughs. Together, they’ve powered industries like healthcare and robotics. Looking ahead, advancements like Qualcomm’s energy-efficient chips and Intel’s faster processors promise a future where AI hardware transforms everyday life.

|

Company |

Contribution |

Key Product/Feature |

|---|---|---|

|

Alphabet |

Focus on powerful AI chips for large-scale projects |

Cloud TPU v5p with 8,960 chips, TPU v6e with 4.7x performance increase, Willow quantum chip with 105 qubits |

|

AMD |

New CPU microarchitecture and AI GPU accelerators |

Zen 5 architecture, Ryzen 9000 Series, Instinct MI300 Series with 6 TBps bandwidth |

|

Apple |

Development of specialized AI cores and chips |

M1 chip with 3.5x performance increase, M4 chip with 3x faster Neural Engine |

|

Tenstorrent |

Creation of scalable AI hardware products |

Wormhole processors and Galaxy servers for network AI |

Note: The future of AI hardware lies in faster, energy-efficient solutions. Qualcomm’s Snapdragon X Plus and Samsung’s HBM3E memory chips are just the beginning of a new era in AI innovation.

التعليمات

What is the most energy-efficient AI hardware in 2025?

NVIDIA’s H100 GPU and AMD’s MI300 chips lead in energy efficiency. They reduce power consumption while delivering top-tier performance for AI workloads. 🌱

How do custom AI chips differ from general-purpose processors?

Custom AI chips are designed for specific tasks like deep learning. They’re faster and more efficient than general-purpose processors, which handle a broader range of operations.

Which company focuses on AI hardware for consumer devices?

Apple and Qualcomm specialize in consumer AI hardware. Apple’s Baltra chip and Qualcomm’s Snapdragon processors power smartphones, wearables, and other smart devices. 📱