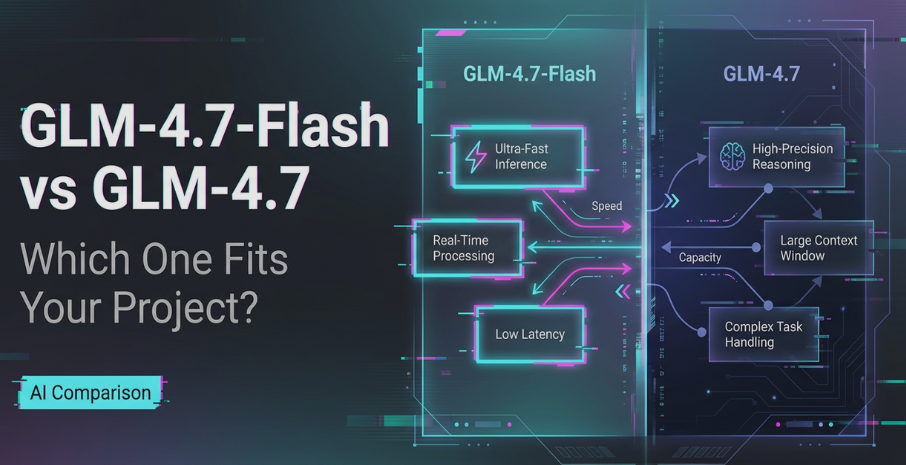

The clear answer for developers and enterprises in 2026 is that GLM-4.7, particularly its “Flash” variant, represents the most significant breakthrough in open-weights AI reasoning and local coding assistance. Developed by Zhipu AI, GLM-4.7 distinguishes itself by integrating a sophisticated “Thinking Process” (Chain of Thought) natively into its inference cycle. This allows the model to “pause and reason” through complex logical, mathematical, and coding problems before delivering an output. For those seeking an alternative to closed-source models like GPT-4o or Claude 3.5, GLM-4.7 Flash offers a near-frontier experience with the added benefits of local deployment, bilingual superiority in English and Chinese, and a massive context window optimized for 2026 hardware.

The Evolution of the General Language Model (GLM) 4.7

The release of GLM-4.7 marks a pivotal moment in the AI landscape, moving away from simple “next-token prediction” toward “deliberative reasoning.” While previous iterations of the GLM series were already respected for their bilingual capabilities, version 4.7 introduces architectural refinements that prioritize logical density over raw parameter count. By optimizing the way the model handles attention and internal “scratchpads,” Zhipu AI has created a model that doesn't just chat—it solves.

In the competitive environment of 2026, where model efficiency is as important as accuracy, GLM-4.7 sits at the intersection of high performance and low latency. The model has been trained on a curated dataset that emphasizes high-quality reasoning traces, allowing it to mimic the “System 2” thinking process of humans. This makes it particularly effective for tasks that require multi-step planning, such as software architecture design or complex legal analysis.

Understanding the “Thinking Process” (Chain of Thought)

The standout feature of GLM-4.7 is its visible “Thinking” mode, which sets it apart from traditional “Instant Response” models. When presented with a difficult prompt, the model generates an internal monologue where it breaks the problem into sub-tasks, identifies potential pitfalls, and iterates on its own logic. This process is not just a UI gimmick; it is a fundamental part of the model’s weight architecture that drastically reduces hallucinations.

For the end-user, this “Thinking” phase provides transparency that was previously missing in AI interactions. If the model is tasked with a complex coding bug, the user can see the model “considering” different libraries or “recalling” specific syntax rules. This transparency builds trust and allows developers to pinpoint exactly where an AI’s logic might have diverged from the intended goal, making the human-AI collaborative loop much tighter.

GLM-4.7 Flash: The Ultimate Local Coding Assistant

The “Flash” variant of GLM-4.7 is specifically designed for the 2026 developer who values speed and privacy. While the full GLM-4.7 model is a heavyweight contender for enterprise servers, the Flash version is optimized to run on consumer-grade hardware while maintaining surprisingly high reasoning scores. It is currently the top recommendation for local IDE integrations (like VS Code or Cursor) where low-latency code completion is mandatory.

-

Logic-Heavy Coding: Unlike smaller models that often “guess” syntax, GLM-4.7 Flash uses its reasoning engine to ensure code is syntactically correct and logically sound.

-

Reduced “Lazy Coding”: The model is tuned to provide complete, production-ready functions rather than the “placeholders” that plagued earlier AI assistants.

-

Bilingual Documentation: It excels at generating documentation in both English and Chinese, making it a favorite for global engineering teams.

-

Low VRAM Footprint: Thanks to advanced 4-bit and 6-bit quantization techniques available in 2026, the Flash model can run comfortably on GPUs with 12GB to 16GB of VRAM.

Context Window and Long-Form Processing

In 2026, the “Context Window Wars” have stabilized, and GLM-4.7 enters the fray with a massive capacity to handle long-form data. Whether you are feeding it an entire documentation library or a 50-file codebase, the model maintains a high “needle-in-a-haystack” retrieval accuracy. This means it doesn't just “remember” that a piece of information exists at the beginning of a prompt; it can use that information to inform reasoning at the very end of its output.

This expanded context is particularly useful for RAG (Retrieval-Augmented Generation) workflows. Instead of needing to aggressively chunk your data into tiny pieces, you can provide larger “blocks” of context to GLM-4.7. This preserves the semantic relationships between different parts of your data, leading to much more coherent and contextually aware answers than models limited to smaller windows.

Comparative Benchmarks: GLM-4.7 vs. The World

When comparing GLM-4.7 to its 2026 peers, the data reveals a model that punches significantly above its weight class. In standard reasoning benchmarks like MMLU (Massive Multitask Language Understanding) and HumanEval (Coding), GLM-4.7 Flash often rivals the performance of models twice its size. Specifically, in math-heavy evaluations, its “Thinking” process allows it to outperform models that lack explicit Chain of Thought training.

-

vs. Llama 3.x: While Llama remains the king of raw throughput, GLM-4.7 is often cited as being “smarter” for complex, instruction-heavy tasks.

-

vs. GPT-4o-mini: GLM-4.7 Flash offers a more robust “reasoning” experience, particularly in technical fields where GPT-4o-mini might prioritize brevity over depth.

-

vs. Qwen 2.5/3: The competition between Zhipu AI and Alibaba’s Qwen is fierce; however, GLM-4.7 is generally preferred for its unique “Thinking” output which aids in debugging.

Native Bilingualism: A Strategic Advantage

For organizations operating in both Western and Eastern markets, GLM-4.7 is the gold standard. Most models are “English-first,” with other languages treated as secondary translations. Zhipu AI has trained GLM-4.7 with a deep, native understanding of both English and Chinese cultural nuances, idioms, and technical terminologies.

This bilingualism extends to coding as well. The model can seamlessly understand comments written in Mandarin and output code in Python, or vice versa, without the logic becoming “lost in translation.” For companies with cross-border development teams, this removes a significant friction point in the code-review and documentation process, ensuring that the intent of the code is preserved regardless of the language used by the original author.

Local Deployment: Ollama, LM Studio, and Beyond

The 2026 AI movement is defined by the shift toward local execution, and GLM-4.7 is a “first-class citizen” in this ecosystem. Because it is an open-weights model, it has been rapidly integrated into popular local hosting tools. Running GLM-4.7-Flash locally ensures that your proprietary code or sensitive personal data never leaves your machine, a requirement for many corporate security policies.

-

Ollama Integration: You can pull the model with a simple

ollama run glm4.7-flashcommand, making it accessible to even non-technical users. -

LM Studio Compatibility: For those who prefer a GUI, LM Studio supports the GGUF versions of GLM-4.7, allowing for easy adjustment of system prompts and context limits.

-

API Parity: Zhipu AI’s BigModel API provides a “drop-in” replacement for OpenAI-style API calls, allowing developers to switch from cloud to local (and back) with minimal code changes.

The Hardware Reality for 2026 Local AI

While GLM-4.7-Flash is efficient, running “Reasoning” models locally still requires a baseline of modern hardware. In 2026, the standard for a smooth experience is an NVIDIA RTX 40-series or 50-series GPU, or an Apple Silicon Mac with at least 32GB of Unified Memory. Because the “Thinking” process generates more tokens (the internal monologue), the speed of the GPU's memory bandwidth is the primary bottleneck for performance.

If you are running the model on a Mac, the Unified Memory architecture allows GLM-4.7 to access larger portions of RAM, enabling much larger context windows than a typical PC with a single GPU. For PC users, utilizing “dual-GPU” setups or the latest “AI PCs” with dedicated NPUs (Neural Processing Units) is becoming the standard way to ensure that the model’s “Thinking” doesn't introduce too much lag into the coding workflow.

Best Practices for Prompting GLM-4.7

To get the most out of GLM-4.7’s reasoning capabilities, your prompting strategy should shift from “asking” to “directing.” Because the model has a native Chain of Thought, you can explicitly tell it how to think. Using phrases like “Think step-by-step,” “Evaluate three different approaches,” or “Identify potential security flaws before writing the code” activates the model’s deeper weights.

Another 2026 best practice is the “System Prompt” optimization. By defining the model as a “Senior Staff Engineer” or a “Rigorous Security Auditor” in your local configuration, you influence the “Thinking” monologue. A model defined as a “Staff Engineer” will spend more time in its internal monologue considering system scalability, whereas a “Junior Dev” persona might focus more on immediate syntax correctness.

Key Use Cases for GLM-4.7 in 2026

The versatility of the 4.7 series allows it to be deployed across a range of professional scenarios where “standard” AI often falls short.

-

Complex Refactoring: Handing the model a “spaghetti code” legacy function and asking it to refactor it into clean, modular, and typed code.

-

Mathematical Proofs: Its reasoning engine is highly capable of handling the symbolic logic required for advanced mathematics.

-

Legal & Compliance Analysis: Feeding the model a 200-page contract and asking it to “think” through the potential liabilities based on specific jurisdiction rules.

-

Autonomous Agents: Using GLM-4.7 as the “brain” for an agent that needs to navigate a file system, execute terminal commands, and verify its own results.

Security and Privacy in the Local Era

One of the primary drivers for the popularity of GLM-4.7-Flash is the increasing demand for data sovereignty. In 2026, many companies have banned the use of cloud-based AI for proprietary codebase analysis. GLM-4.7 offers a “Privacy-First” alternative. When you run the model locally via Ollama or a private server, you eliminate the risk of your intellectual property being used to train the next generation of a competitor's model.

Furthermore, because GLM-4.7 is transparent in its “Thinking,” it is easier to audit for security. You can see if the model is considering an “insecure” path in its internal logic and interject before it outputs a vulnerable code snippet. This “Man-in-the-Middle” debugging of the AI’s thought process is a new security paradigm that is only possible with models that support visible Chain of Thought.

Conclusion: Why GLM-4.7 is the Model to Watch

As we navigate the AI landscape of 2026, GLM-4.7 stands out not just for its benchmarks, but for its utility. It represents a shift toward a more intelligent, deliberative, and trustworthy form of AI. By choosing GLM-4.7—especially the Flash variant for local work—developers are equipping themselves with a tool that doesn't just provide answers, but understands the logic behind them.

Whether you are a solo developer building the next great app, or an enterprise architect securing a global infrastructure, the reasoning capabilities and bilingual mastery of GLM-4.7 provide a competitive edge. It is a model that encourages a higher standard of collaboration, pushing the boundaries of what is possible with open-weights technology.

Summary Checklist for Getting Started with GLM-4.7

-

Hardware: Ensure you have at least 16GB of VRAM (PC) or 32GB Unified Memory (Mac).

-

Software: Download Ollama or LM Studio to host the model locally.

-

Model Selection: Use GLM-4.7-Flash for daily coding and GLM-4.7 (Full) for heavy-duty architecture and research.

-

Prompting: Experiment with “System Prompts” that explicitly invoke the “Thinking” process.

-

Integration: Connect the model to your IDE via an OpenAI-compatible API bridge.