Introduction: The AI Model Wars Heat Up

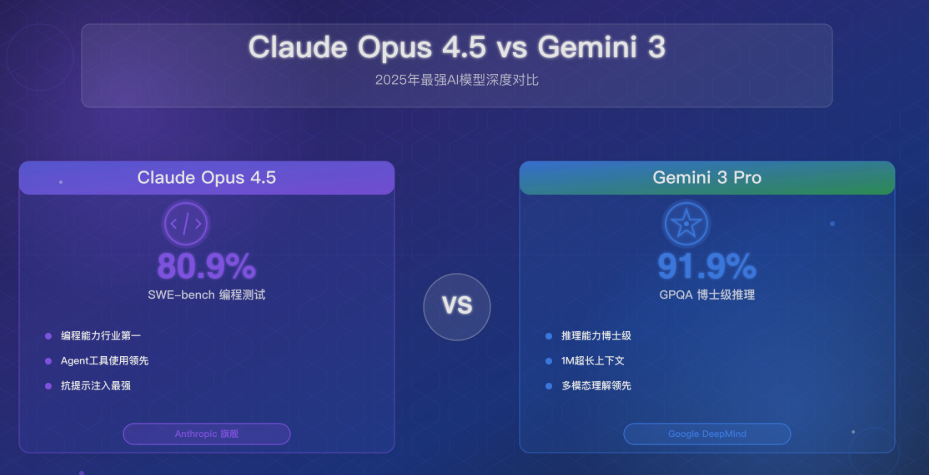

In a remarkable display of competitive AI development, December 2025 witnessed two flagship model releases within days of each other: Google's Gemini 3 Pro (December 5) and Anthropic's Claude Opus 4.5 (November 24). Both companies claim world-leading performance, but in distinctly different domains. This comprehensive analysis examines their benchmarks, capabilities, and real-world performance to help developers and enterprises make informed decisions.

TL;DR: Gemini 3 Pro dominates multimodal vision tasks and general knowledge, while Claude Opus 4.5 leads in coding, agentic workflows, and tool use. Your choice depends on whether you prioritize visual understanding or autonomous task execution.

Executive Summary: Head-to-Head Comparison

| الفئة | Winner | Key Metric |

|---|---|---|

| Overall Intelligence | Gemini 3 Pro | 73 vs 70 on Artificial Analysis Index |

| Coding Performance | Claude Opus 4.5 | 80.9% vs ~75% on SWE-bench Verified |

| Vision Understanding | Gemini 3 Pro | SOTA on MMMU Pro, Video MMMU |

| Agentic Tasks | Claude Opus 4.5 | 62.3% vs 43.8% on Scaled Tool Use |

| Document Processing | Gemini 3 Pro | 80.5% on CharXiv Reasoning |

| Computer Use | Claude Opus 4.5 | Best OSWorld performance |

| Knowledge/Hallucination | Gemini 3 Pro | 13 vs 10 on AA-Omniscience Index |

| Cost Efficiency | Gemini 3 Pro | $2-4 vs $5 per million input tokens |

| Context Window | Tie | Both 200,000 tokens |

| Safety/Alignment | Claude Opus 4.5 | 5% prompt injection success vs higher |

Part 1: Gemini 3 Pro Vision – The Multimodal Champion

Architecture and Release

Released: December 5, 2025

Developer: Google DeepMind

Positioning: “The frontier of vision AI” and “best model in the world for multimodal capabilities”

Gemini 3 Pro represents what Google calls “a generational leap from simple recognition to true visual and spatial reasoning.” The model was specifically architected to handle the most complex multimodal tasks across document, spatial, screen, and video understanding.

Core Capabilities Breakdown

1. Document Understanding Excellence

Gemini 3 Pro sets new standards in processing real-world documents that are messy, unstructured, and filled with interleaved images, illegible handwritten text, nested tables, and complex mathematical notation.

Key Features:

- Derendering capability: Reverse-engineers visual documents into structured code (HTML, LaTeX, Markdown)

- 18th-century handwritten text: Successfully converted an Albany Merchant's Handbook into complex tables

- Mathematical precision: Transforms raw images with mathematical annotations into precise LaTeX code

- Chart reconstruction: Recreated Florence Nightingale's original Polar Area Diagram into interactive charts

Benchmark Performance:

- CharXiv Reasoning: 80.5% (notably outperforms human baseline)

- Document Q&A: State-of-the-art on multi-page complex reports

Real-World Example: When analyzing the 62-page U.S. Census Bureau report, Gemini 3 Pro:

- Located and cross-referenced Gini Index data across multiple tables

- Identified causal relationships (ARPA policy lapses, stimulus payment endings)

- Performed numerical comparisons across time periods

- Synthesized findings into coherent conclusions

This demonstrates true multi-step reasoning across tables, charts, and narrative text—not just information extraction.

2. Spatial Understanding Breakthrough

Gemini 3 Pro delivers the strongest spatial reasoning capability to date, enabling it to make sense of the physical world.

Capabilities:

- Pointing precision: Outputs pixel-precise coordinates for object location

- Pose estimation: Sequences 2D points for complex human pose tracking

- Open vocabulary references: Identifies objects and intent using natural language

- Trajectory tracking: Reflects movements over time

Applications:

- Robotics: “Given this messy table, come up with a plan on how to sort the trash” with spatially grounded execution

- AR/XR devices: “Point to the screw according to the user manual” with precise visual anchoring

- Quality assurance: Automated inspection with spatial verification

3. Screen Understanding for Agent Automation

Gemini 3 Pro excels at understanding desktop and mobile OS screens, making it ideal for computer use agents that automate repetitive tasks.

Use Cases:

- QA testing automation

- User onboarding flows

- UX analytics and journey mapping

- Automated UI interaction

The model perceives UI elements and clicks with high precision, enabling robust automation even on complex interfaces.

4. Video Understanding Revolution

Gemini 3 Pro represents a massive leap in video comprehension—processing the most complex data format with temporal, spatial, and contextual density.

Advanced Features:

- High frame rate processing: Optimized for >1 FPS (up to 10 FPS) to capture rapid actions

- Video reasoning with “thinking” mode: Traces cause-and-effect relationships over time, understanding why events happen, not just what

- Long-form extraction: Converts video knowledge into functioning apps or structured code

Example: Golf swing analysis at 10 FPS—catching every swing and weight shift for deep biomechanical insights.

5. Real-World Application Domains

Education:

- Visual reasoning puzzles (Math Kangaroo competitions)

- Complex chemistry and physics diagrams

- Middle school through post-secondary curriculum support

- Powers Nano Banana Pro's generative capabilities for homework assistance

Medical/Biomedical:

- MedXpertQA-MM: State-of-the-art on expert-level medical reasoning

- VQA-RAD: Leading performance on radiology imagery Q&A

- MicroVQA: Top scores on microscopy-based biological research

Law and Finance:

- Dense report analysis with charts and tables

- Legal document reasoning

- Financial compliance document processing

Gemini 3 Pro Vision Benchmarks

| Benchmark | Gemini 3 Pro Score | الفئة |

|---|---|---|

| MMMU Pro | SOTA | Complex visual reasoning |

| Video MMMU | SOTA | Video understanding |

| CharXiv Reasoning | 80.5% | Document reasoning |

| MedXpertQA-MM | SOTA | Medical imaging |

| VQA-RAD | SOTA | Radiology Q&A |

| MicroVQA | SOTA | Microscopy research |

| Artificial Analysis Intelligence Index | 73 | Overall intelligence |

| CritPt | 9% | Frontier physics (highest) |

| AA-Omniscience Index | 13 | Knowledge/hallucination |

Media Resolution Control Innovation

Gemini 3 Pro introduces granular control over visual processing:

- High resolution: Maximizes fidelity for dense OCR and complex documents

- Low resolution: Optimizes cost/latency for simple recognition

- Native aspect ratio: Preserves original image proportions for quality improvements

Part 2: Claude Opus 4.5 – The Agentic Powerhouse

Architecture and Release

Released: November 24, 2025

Developer: Anthropic

Positioning: “Best model in the world for coding, agents, and computer use”

Claude Opus 4.5 represents Anthropic's strategic focus on high-stakes cognitive labor, particularly coding, long-horizon agentic workflows, and office productivity—rather than pursuing an “omni-model” approach.

Core Capabilities Breakdown

1. Coding Supremacy

Claude Opus 4.5 achieves state-of-the-art performance across software engineering benchmarks, surpassing both Gemini 3 Pro and GPT-5.1.

Benchmark Dominance:

- SWE-bench Verified: 80.9% (highest score globally)

- LiveCodeBench: +16 percentage points over Sonnet 4.5

- Terminal-Bench Hard: +11 percentage points improvement

- WebDev LMarena: 1493 Elo (top position as of Nov 26, 2025)

Real-World Testing: Anthropic tested Opus 4.5 on their internal performance engineering take-home exam (2-hour time limit). Result: Scored higher than any human candidate ever.

Developer Testimonials:

- GitHub's Mario Rodriguez: “Surpasses internal coding benchmarks while cutting token usage in half”

- Cursor users: “Notable improvement over prior Claude models with improved pricing and intelligence”

- 50-75% reduction in tool calling errors and build/lint errors

Autonomous Development: Over one weekend, Opus 4.5 working through Claude Code produced:

- 20 commits

- 39 files changed

- 2,022 additions

- 1,173 deletions

- Multiple large-scale refactorings

2. Agentic Workflow Leadership

Claude Opus 4.5 was specifically optimized for multi-turn, tool-using workflows—the future of AI automation.

Agentic Performance:

- Scaled Tool Use: 62.3% (vs 43.8% for next best, also Claude Sonnet 4.5)

- τ²-Bench Telecom: +12 percentage points over Sonnet 4.5

- MCP Atlas: Leading performance on Model Context Protocol

- OSWorld: Best computer use performance

Self-Improving Agents: Opus 4.5 demonstrated breakthrough capability in autonomous refinement:

- Achieved peak performance in 4 iterations

- Other models couldn't match that quality after 10 iterations

- Learns from experience across technical tasks

- Stores insights and applies them later

Multi-Agent Orchestration: The model excels at managing teams of subagents, coordinating complex distributed workflows.

3. Computer Use: Industry Leader

Anthropic claims Opus 4.5 is “the best model in the world for computer use”—interacting with software interfaces like a human.

Capabilities:

- Button clicking with precision

- Form filling automation

- Website navigation

- Desktop task automation

- Enhanced zoom tool for screen inspection

Context Management:

- Automatic summarization of earlier context for long-running agents

- Context compaction to prevent hitting limits mid-task

- Thinking blocks from previous turns preserved in model context

4. Office Productivity & Deep Research

Everyday Tasks: “Meaningfully better” at:

- Creating spreadsheets

- Building presentations

- Drafting documents

- Conducting deep research

Research Capability: ~15% performance increase on deep research evaluations

Example Test: Generate a report on “Old English words that survive today but are not commonly used, and how these kinds of words have changed over time.”

- Completion time: 7 minutes

- Quality: Impressive writing, organization, depth of research

5. Advanced Developer Features

Effort Parameter (Beta): Revolutionary control over computational allocation:

- Low: 76% token efficiency at comparable performance

- Medium: Balanced approach

- High: Maximum performance

This enables developers to balance performance against latency and cost for specific use cases.

Programmatic Tool Calling: Execute tools directly in Python for more efficient, deterministic workflows.

Tool Search: Dynamically discover tools from large libraries without consuming context window space.

Hybrid Reasoning: Choose between instant responses or extended thinking based on task requirements.

Claude Opus 4.5 Benchmarks

| Benchmark | Claude Opus 4.5 Score | الفئة |

|---|---|---|

| SWE-bench Verified | 80.9% | Coding (highest) |

| LiveCodeBench | +16 p.p. over Sonnet 4.5 | Coding |

| Terminal-Bench Hard | +11 p.p. over Sonnet 4.5 | Tool use |

| Scaled Tool Use | 62.3% | Agentic (highest) |

| τ²-Bench Telecom | +12 p.p. over Sonnet 4.5 | Tool workflows |

| MCP Atlas | SOTA | Model Context Protocol |

| OSWorld | SOTA | Computer use |

| CORE-Bench | 95% | Scientific reproducibility |

| Artificial Analysis Intelligence Index | 70 | Overall intelligence |

| AA-Omniscience Index | 10 | Knowledge/hallucination |

| Artificial Analysis Agentic Index | Top rank | Agentic capabilities |

| CritPt | 5% | Frontier physics |

| Prompt Injection Resistance | 5% single-attempt success | Safety |

Part 3: Direct Head-to-Head Comparison

Intelligence & Reasoning

Winner: Gemini 3 Pro (+3 points)

- Gemini 3 Pro: 73 on Artificial Analysis Intelligence Index

- Claude Opus 4.5: 70 on the same index

Both models represent frontier intelligence, but Gemini edges ahead in general reasoning benchmarks. However, the gap is narrow—GPT-5.1 (high) ties Claude at 70, while Grok 4 scores 65.

Context: The 3-point difference matters less than domain-specific strengths. Gemini excels at knowledge-intensive benchmarks requiring breadth of training data and reasoning over facts (GPQA Diamond, MMMLU), while Claude dominates execution-focused tasks.

Coding & Software Engineering

Winner: Claude Opus 4.5 (decisive)

| Metric | Claude Opus 4.5 | Gemini 3 Pro | Advantage |

|---|---|---|---|

| SWE-bench Verified | 80.9% | ~75% | Claude +5.9 p.p. |

| LiveCodeBench | Top tier | Strong | Claude leads |

| Real-world coding | 20+ commits/weekend | N/A | Claude proven |

| Token efficiency | 50% reduction | Unknown | Claude advantage |

Claude's dominance in coding isn't marginal—it represents the strongest software engineering model available globally as of December 2025.

Vision & Multimodal Understanding

Winner: Gemini 3 Pro (decisive)

| Capability | Gemini 3 Pro | Claude Opus 4.5 | Verdict |

|---|---|---|---|

| Document OCR | SOTA, derendering | Good | Gemini clear winner |

| Spatial understanding | Pixel-precise pointing | Limited | Gemini clear winner |

| Video reasoning | 10 FPS, causal logic | N/A | Gemini only player |

| Image analysis | MMMU Pro SOTA | Vision capable | Gemini specialized |

| Chart/table extraction | Outstanding | Good | Gemini leads |

Claude Opus 4.5 is described as “Anthropic's best vision model,” but this is relative to previous Claude models. Gemini 3 Pro was purpose-built for vision excellence and achieves qualitatively superior results.

Agentic Workflows & Tool Use

Winner: Claude Opus 4.5 (dominant)

| Benchmark | Claude Opus 4.5 | Next Best | Gap |

|---|---|---|---|

| Scaled Tool Use | 62.3% | 43.8% (Claude Sonnet 4.5) | +18.5 p.p. |

| τ²-Bench Telecom | Top | Lower | Significant |

| MCP Atlas | SOTA | Lower | Clear lead |

| Self-improvement | 4 iterations | 10+ iterations | 2.5x faster |

The agentic advantage is Claude's defining characteristic. No model comes close to its tool-using, multi-step workflow capabilities.

Computer Use & UI Automation

Winner: Claude Opus 4.5 (clear)

- OSWorld: Anthropic claims “best model in the world for computer use”

- Screen understanding: Both strong, but Claude more reliable

- Automation robustness: Claude designed specifically for this

- Long-running tasks: Claude's context management superior

Gemini 3 Pro has screen understanding capabilities but isn't positioned as a computer use specialist.

Knowledge & Hallucination

Winner: Gemini 3 Pro (+3 points)

- AA-Omniscience Index:

- Gemini 3 Pro: 13

- Claude Opus 4.5: 10

- Hallucination Rate:

- Claude Opus 4.5: 58% (4th-lowest)

- Gemini 3 Pro: Higher rate

Interpretation: Gemini has broader embedded knowledge, but Claude demonstrates stronger alignment and factual accuracy when it does provide information. This reflects Google's massive training corpus advantage versus Anthropic's focus on truthfulness.

Safety & Alignment

Winner: Claude Opus 4.5 (substantial)

Prompt Injection Resistance:

- Claude Opus 4.5: 5% single-attempt success rate

- 10 attempts: ~33% success rate

- Industry comparison: “Harder to trick than any other frontier model”

Alignment Testing: Anthropic states Opus 4.5 is “the most robustly aligned model we have released to date and, we suspect, the best-aligned frontier model by any developer.”

Concerning Behavior Scores: Lowest among frontier models

Context: While no model is immune to adversarial attacks, Claude Opus 4.5 represents the current safety state-of-the-art. Gemini 3 Pro's safety characteristics weren't emphasized in release materials.

Pricing & Cost Efficiency

Winner: Gemini 3 Pro (significant savings)

| Model | Input Cost | Output Cost | Context Window |

|---|---|---|---|

| Gemini 3 Pro | $2/M tokens | $12/M tokens | 200K |

| Gemini 3 Pro (>200K) | $4/M | $18/M | Up to 2M |

| Claude Opus 4.5 | $5/M | $25/M | 200K |

| Claude Sonnet 4.5 | $3/M | $15/M | 200K |

| GPT-5.1 family | $1.25/M | $10/M | 128K |

Analysis:

- Gemini 3 Pro: $2 input (60% cheaper than Claude Opus 4.5)

- Gemini 3 Pro: $12 output (52% cheaper than Claude Opus 4.5)

However: Claude Opus 4.5 represents a 67% price reduction from the previous Opus generation ($15/$75), making frontier performance more accessible.

Token Efficiency Consideration: Claude's “effort parameter” enables 76% token savings at low settings, potentially closing the cost gap in practice.

Context & Output Limits

Tie:

- Both models: 200,000 token context window

- Claude Opus 4.5: 64,000 token output limit

- Gemini 3 Pro: Extended context available for larger tasks

Both provide industry-leading context handling for complex documents and codebases.

Part 4: Use Case Recommendations

Choose Gemini 3 Pro Vision If You Need:

✅ Document-Heavy Workflows

- Legal contract analysis

- Financial report processing

- Medical record interpretation

- Research paper comprehension

✅ Visual Understanding Tasks

- Product cataloging from images

- Medical imaging analysis

- Educational content with diagrams

- Video content analysis

✅ Spatial Reasoning Applications

- Robotics planning

- AR/XR development

- Quality assurance with visual inspection

- Architectural analysis

✅ Cost-Sensitive Operations

- High-volume image processing

- Startup/SMB budgets

- Prototyping and experimentation

✅ Multimodal Consumer Applications

- Educational apps with rich media

- Content moderation across image/video

- Accessibility tools for visual content

Example Industries: Healthcare (imaging), Legal (document review), Education (visual learning), Media (content analysis)

Choose Claude Opus 4.5 If You Need:

✅ Software Development

- Code generation and refactoring

- Legacy system migration

- Complex debugging

- Autonomous coding agents

✅ Agentic Automation

- Office task automation

- Multi-tool workflows

- Self-improving AI systems

- Long-running autonomous processes

✅ Computer Use & UI Automation

- QA testing automation

- RPA (Robotic Process Automation)

- Data entry automation

- Web scraping and interaction

✅ High-Stakes Enterprise Workflows

- Mission-critical code deployment

- Security-sensitive operations

- Prompt injection resistance requirements

- Reliable multi-step reasoning

✅ Research & Analysis

- Deep research tasks

- Scientific reproducibility (95% CORE-Bench)

- Complex reasoning chains

- Document creation/presentation

Example Industries: Software development, Financial services (compliance), Enterprise IT (automation), Research institutions

Part 5: Technical Specifications Comparison

Model Architecture

| Specification | Gemini 3 Pro | Claude Opus 4.5 |

|---|---|---|

| Architecture | Multimodal transformer | Proprietary (likely transformer-based) |

| Training Focus | Vision + general reasoning | Coding + agentic workflows |

| Context Window | 200,000 tokens (expandable) | 200,000 tokens |

| Output Limit | Not specified | 64,000 tokens |

| Knowledge Cutoff | Not specified | March 2025 |

| Input Modalities | Text, images, video, audio, code | Text, images, PDFs, code |

| Frame Rate (Video) | Up to 10 FPS | N/A |

Developer Experience

| Feature | Gemini 3 Pro | Claude Opus 4.5 |

|---|---|---|

| API Access | Google AI Studio, Vertex AI | Claude API, AWS Bedrock, Azure, GCP |

| Playground | Google AI Studio | claude.ai, Anthropic Console |

| SDK Support | Python, Node.js, Go | Python, TypeScript, Java, Go |

| Streaming | Yes | Yes |

| Function Calling | Yes | Yes (enhanced programmatic) |

| Batch Processing | Yes | Yes (50% savings) |

| Prompt Caching | Yes | Yes (90% savings) |

Platform Integration

Gemini 3 Pro:

- Native Google Workspace integration

- Vertex AI for enterprise

- Google AI Studio for developers

- Android/iOS Gemini app

Claude Opus 4.5:

- GitHub Copilot (all paid tiers)

- Microsoft Foundry (Azure)

- Amazon Bedrock

- Google Cloud Vertex AI

- Cursor IDE integration

- Claude Code for autonomous development

Part 6: Benchmark Deep Dive

The Artificial Analysis Perspective

According to independent testing by Artificial Analysis (which tracks 250+ models):

Intelligence Ranking (December 2025):

- Gemini 3 Pro: 73 points

- Claude Opus 4.5 (Thinking): 70 points (tied with GPT-5.1 high)

- Kimi K2 Thinking: 67 points

- Grok 4: 65 points

- Claude Sonnet 4.5 (Thinking): 63 points

Biggest Uplifts for Opus 4.5 (vs. Sonnet 4.5):

- LiveCodeBench: +16 percentage points

- Terminal-Bench Hard: +11 p.p.

- τ²-Bench Telecom: +12 p.p.

- AA-LCR (Long Context Reasoning): +8 p.p.

- Humanity's Last Exam: +11 p.p.

Where Gemini 3 Pro Leads:

- GPQA Diamond (graduate-level science)

- MMMLU (multilingual knowledge)

- Vision benchmarks (MMMU Pro, Video MMMU)

- Document reasoning (CharXiv)

Where Claude Opus 4.5 Leads:

- SWE-bench Verified (coding)

- All agentic benchmarks (Terminal, τ²-Bench, MCP Atlas)

- OSWorld (computer use)

- CORE-Bench (scientific reproducibility)

Coding Benchmarks in Detail

| Benchmark | Claude Opus 4.5 | Gemini 3 Pro | GPT-5.1-Codex-Max | الوصف |

|---|---|---|---|---|

| SWE-bench Verified | 80.9% | ~75% | ~78% | Real-world GitHub issues |

| LiveCodeBench | Top | Strong | Strong | Live coding competition |

| HumanEval | Not disclosed | Not disclosed | Not disclosed | Classic code generation |

| WebDev LMarena | 1493 Elo | Lower | Lower | Full-stack development |

Verdict: Claude Opus 4.5 is the undisputed coding champion across multiple benchmarks.

Vision Benchmarks in Detail

| Benchmark | Gemini 3 Pro | Claude Opus 4.5 | الوصف |

|---|---|---|---|

| MMMU Pro | SOTA | Good | Graduate-level multimodal |

| Video MMMU | SOTA | N/A | Video understanding |

| CharXiv Reasoning | 80.5% | Not tested | Document + chart reasoning |

| MedXpertQA-MM | SOTA | N/A | Medical expert exams |

| VQA-RAD | SOTA | N/A | Radiology Q&A |

Verdict: Gemini 3 Pro dominates vision-centric benchmarks where Claude wasn't designed to compete.

Agentic Benchmarks in Detail

| Benchmark | Claude Opus 4.5 | Gemini 3 Pro | GPT-5.1 | الوصف |

|---|---|---|---|---|

| Terminal-Bench Hard | Top | Lower | Lower | Command-line automation |

| τ²-Bench Telecom | Top | Lower | Lower | Multi-step tool workflows |

| MCP Atlas | SOTA | Not tested | Good | Model Context Protocol |

| OSWorld | SOTA | Good | Lower | Desktop automation |

| CORE-Bench | 95% | Not tested | Lower | Scientific reproducibility |

Verdict: Claude Opus 4.5's agentic superiority is overwhelming—often by 15-20 percentage points.

Part 7: Real-World Testing Insights

Coding Performance (Claude Opus 4.5)

Developer: Simon Willison (sqlite-utils project)

- Timeframe: One weekend

- Results: Alpha release with several large-scale refactorings

- Stats: 20 commits, 39 files, 2,022 additions, 1,173 deletions

- Assessment: “Excellent new model… clearly a meaningful step forward”

GitHub Integration:

- Mario Rodriguez (GitHub CPO): “Surpasses internal coding benchmarks while cutting token usage in half”

- “Especially well-suited for tasks like code migration and code refactoring”

Document Processing (Gemini 3 Pro)

Test: U.S. Census Bureau 62-Page Report

- Task: Complex multi-step analysis of Gini Index across tables and figures

- Performance:

- Located data across multiple non-contiguous sections

- Identified causal relationships in policy text

- Performed accurate numerical comparisons

- Synthesized coherent conclusions

Result: Exceeds human baseline on CharXiv Reasoning (80.5%)

Agentic Workflows (Claude Opus 4.5)

Self-Improvement Test:

- Opus 4.5: Peak performance in 4 iterations

- Other models: Couldn't match quality after 10 iterations

- Implication: 2.5x faster agent refinement

CORE-Bench (Scientific Reproducibility):

- Initial score (CORE-Agent scaffold): 42%

- With Claude Code (better scaffold): 78%

- After manual review fixes: 95%

- Key insight: Model-scaffold coupling matters enormously

Vision Tasks (Gemini 3 Pro)

Handwriting to Table Conversion:

- Successfully converted 18th-century Albany Merchant's Handbook into complex structured tables

- Handled illegible cursive handwriting

- Maintained relational data integrity

Mathematical Reconstruction:

- Transformed raw images with mathematical annotations into precise LaTeX code

- Preserved complex equation structures

- Generated compilable, accurate output

Interactive Chart Recreation:

- Rebuilt Florence Nightingale's original Polar Area Diagram

- Made it interactive with toggles

- Preserved historical data accuracy

Part 8: Cost Analysis & ROI

Pricing Comparison Matrix

| Use Case | Gemini 3 Pro Cost | Claude Opus 4.5 Cost | Savings with Gemini |

|---|---|---|---|

| 1M token analysis (input-heavy) | $2 | $5 | 60% |

| 1M token generation (output-heavy) | $12 | $25 | 52% |

| 100K context + 10K output | $2.20 | $5.25 | 58% |

| Cached prompts (90% savings) | $0.20 | $0.50 | 60% |

| Batch processing (50% savings) | $1 | $2.50 | 60% |

Total Cost of Ownership Considerations

Gemini 3 Pro Advantages:

- Lower base pricing

- No extra charges for vision features (built-in)

- Google Cloud credits often available

- Volume discounts at enterprise scale

Claude Opus 4.5 Advantages:

- Effort parameter: 76% token reduction at low settings

- Fewer retries needed (higher first-attempt success rate in coding)

- Token efficiency in coding (50% reduction vs. previous Opus)

- Prompt caching: 90% savings on repeated context

Real-World Cost Scenarios

Scenario 1: Document Processing Service (1B tokens/month)

- Gemini 3 Pro: $2,000 input + $12,000 output = $14,000/month

- Claude Opus 4.5: $5,000 input + $25,000 output = $30,000/month

- Savings with Gemini: $16,000/month (53%)

Scenario 2: Coding Agent (500M tokens/month, mostly output)

- Gemini 3 Pro: $1,000 input + $6,000 output = $7,000/month

- Claude Opus 4.5: $2,500 input + $12,500 output = $15,000/month

- But with effort parameter at low: $2,500 + $3,000 = $5,500/month

- Winner: Claude Opus 4.5 with optimization (21% savings)

Scenario 3: Vision-Heavy Application (2B tokens/month)

- Gemini 3 Pro: $4,000 input + $24,000 output = $28,000/month

- Claude Opus 4.5: Vision not primary strength; would need Sonnet 4.5

- Claude Sonnet 4.5: $6,000 input + $30,000 output = $36,000/month

- Winner: Gemini 3 Pro (22% savings + better vision capability)

Key Insight: For pure document/vision workflows, Gemini 3 Pro offers better ROI. For coding/agentic tasks, Claude's efficiency features can offset higher base pricing.

Part 9: Limitations & Weaknesses

Gemini 3 Pro Limitations

❌ Not Specialized for Coding

- While capable, doesn't match Claude Opus 4.5's software engineering performance

- SWE-bench scores lag by ~5-10 percentage points

- Less effective at autonomous coding workflows

❌ Agentic Capabilities Less Mature

- Tool-use benchmarks significantly below Claude

- Multi-step agent workflows not as robust

- Less reliable for long-running autonomous tasks

❌ Computer Use Secondary

- Screen understanding present but not flagship feature

- Desktop automation less reliable than Claude

- Fewer developer tools for agent scaffolding

❌ Safety Characteristics Unclear

- Prompt injection resistance not prominently featured

- Alignment testing less transparent

- Red teaming results not published

❌ Token Efficiency Controls Limited

- No equivalent to Claude's “effort parameter”

- Less granular control over compute allocation

- May use more tokens for same task

Claude Opus 4.5 Limitations

❌ Vision Capabilities Second-Tier

- Can't match Gemini 3 Pro's multimodal performance

- Video understanding not present

- Spatial reasoning less sophisticated

- Document derendering less accurate

❌ Knowledge Breadth Narrower

- AA-Omniscience score 10 vs. Gemini's 13

- Smaller training corpus

- Less embedded factual knowledge

- May need more web searches for general questions

❌ Higher Base Pricing

- $5 input vs. Gemini's $2 (2.5x more expensive)

- $25 output vs. Gemini's $12 (2.1x more expensive)

- Only mitigated by efficiency features

❌ Physics/Math Reasoning Gap

- CritPt score 5% vs. Gemini's 9%

- Frontier physics problems more challenging

- Graduate-level science (GPQA) trails Gemini

❌ Consumer Integration Limited

- No native Android/iOS app with Opus 4.5

- Requires Pro/Max subscription ($20-40/month)

- Less accessible for casual users

Part 10: Future Outlook & Development Trajectory

Gemini 3 Pro's Likely Evolution

Strengths to Amplify:

- Further vision capability improvements (already SOTA)

- Expanded video understanding (frame rates, length)

- Enhanced spatial reasoning for robotics

- Better document derendering accuracy

Gaps to Address:

- Agentic workflow capabilities

- Coding benchmark performance

- Tool-use sophistication

- Computer use reliability

Strategic Direction: Google is likely to maintain multimodal leadership while gradually improving agentic capabilities. Expect tighter integration with Google Workspace, Android, and Cloud Platform.

Claude Opus 4.5's Likely Evolution

Strengths to Amplify:

- Extended thinking mode improvements

- Even better tool use and agent capabilities

- Longer context windows (>200K)

- More sophisticated self-improvement

Gaps to Address:

- Vision capabilities (though not core focus)

- Knowledge breadth

- Consumer accessibility

- Pricing competitiveness

Strategic Direction: Anthropic will likely double down on agentic AI and coding, potentially releasing specialized sub-models. Expect continued safety leadership and enterprise focus.

Market Positioning Predictions

Gemini 3 Pro:

- Becomes default for vision-centric applications

- Dominates medical imaging, education, media sectors

- Maintains cost leadership

- Expands into robotics and AR/XR

Claude Opus 4.5:

- Solidifies position as premier coding model

- Becomes standard for AI agents in enterprise

- Leads in safety-critical applications

- Powers next generation of autonomous software development

Convergence vs. Specialization: Rather than converging to identical capabilities, expect continued specialization—Gemini for multimodal, Claude for agentic execution. This benefits the ecosystem by offering clear differentiation.

Part 11: Final Verdict & Recommendations

The Nuanced Answer

Neither model is universally “better”—they excel in different domains by design. Your choice depends entirely on your primary use case.

Decision Framework

Choose Gemini 3 Pro Vision if:

- ✅ Visual content processing is core to your application

- ✅ You need document understanding at scale

- ✅ Cost efficiency matters for high-volume operations

- ✅ Medical, legal, or educational domains

- ✅ Video analysis capabilities required

Choose Claude Opus 4.5 if:

- ✅ Software development is your primary use case

- ✅ Building autonomous AI agents

- ✅ Computer use automation needed

- ✅ Safety and alignment are critical

- ✅ Multi-step reasoning over execution matters most

Hybrid Strategy

Many enterprises will use both models:

- Gemini 3 Pro for document intake, visual processing, knowledge retrieval

- Claude Opus 4.5 for code generation, agent orchestration, execution

This plays to each model's strengths and creates a more robust AI architecture.

The Bottom Line

Best Overall Multimodal Model: Gemini 3 Pro

- Superior vision, spatial reasoning, document processing

- Broader knowledge base

- More cost-effective for general use

Best Coding & Agentic Model: Claude Opus 4.5

- Unmatched software engineering performance

- Leading tool use and computer automation

- Best safety and alignment characteristics

Best Value: Gemini 3 Pro (for most use cases)

Best Reliability: Claude Opus 4.5 (for mission-critical coding/agents)

Final Recommendation Score

| الفئة | Gemini 3 Pro | Claude Opus 4.5 |

|---|---|---|

| Vision & Multimodal | ⭐⭐⭐⭐⭐ | ⭐⭐⭐ |

| Coding | ⭐⭐⭐⭐ | ⭐⭐⭐⭐⭐ |

| Agentic Workflows | ⭐⭐⭐ | ⭐⭐⭐⭐⭐ |

| Document Processing | ⭐⭐⭐⭐⭐ | ⭐⭐⭐⭐ |

| Cost Efficiency | ⭐⭐⭐⭐⭐ | ⭐⭐⭐ |

| Safety & Alignment | ⭐⭐⭐⭐ | ⭐⭐⭐⭐⭐ |

| Knowledge Breadth | ⭐⭐⭐⭐⭐ | ⭐⭐⭐⭐ |

| Computer Use | ⭐⭐⭐ | ⭐⭐⭐⭐⭐ |

Conclusion: A New Era of Specialized AI Excellence

December 2025 marks an inflection point: rather than racing toward identical “do-everything” models, leading AI companies are pursuing specialized excellence. Gemini 3 Pro and Claude Opus 4.5 represent two masterfully executed strategies—multimodal understanding versus agentic execution.

For developers and enterprises, this specialization is advantageous. Clear differentiation enables better tool selection, and both models push their respective frontiers to unprecedented levels.

The real winner? The AI development community, which now has access to two world-class models optimized for different, complementary tasks.

Start building today:

- Gemini 3 Pro: Google AI Studio | Vertex AI

- Claude Opus 4.5: Claude Console | AWS Bedrock | Azure Foundry

The frontier of AI has never been more exciting—or more specialized.