Embodied AI achieved remarkable breakthroughs in 2025. Companies like Tesla, Meta, and 1X introduced humanoid robots capable of natural walking, object manipulation, and real-world job performance. Drones now react faster than people, surpassing human reflexes in complex environments.

Real-world adoption surged as Duolingo Max and Notion AI transformed education and productivity, while industries saw AI agents automate claims processing, healthcare administration, and IT support.

-

Nations and organizations accelerated progress:

-

Vietnam, Korea, and Malaysia pushed AI alliances and talent development.

-

Nigeria and Ghana invested in AI skills and inclusivity.

These advances reshape daily life and redefine industries worldwide.

Key Takeaways

-

Humanoid robots now perform real-world tasks with human-like movement and superhuman reflexes, improving industries like healthcare and logistics.

-

Advanced world models help AI understand and predict environments, enabling faster learning and better decision-making in robots.

-

Multimodal AI and agent loops allow robots to process multiple types of information and self-improve, making them more reliable and adaptable.

-

Embodied AI boosts efficiency in healthcare, manufacturing, and smart environments, saving costs and enhancing safety and productivity.

-

The future of embodied AI includes smarter, more agile robots, human augmentation, and ethical development, but challenges like safety and fairness remain.

Embodied AI Breakthroughs

Humanoid Robots

Humanoids have moved from laboratory prototypes to active roles in real-world environments. Companies such as Tesla, 1X, and Figure have deployed humanoid robots in manufacturing, logistics, and service industries. These robots now perform tasks like sorting packages, assembling components, and assisting with inventory management. Their ability to walk naturally, manipulate objects, and interact with people has improved dramatically.

Humanoids now demonstrate superhuman reflexes in dynamic settings. Drones equipped with advanced robotics systems outperform human operators in obstacle avoidance and rapid response scenarios.

|

Application Area |

Metric Description |

Quantitative Result |

|---|---|---|

|

Surgical instrument detection |

Area Under Precision-Recall Curve (AUPR) |

Up to 0.972 |

|

Surgical instrument detection |

Processing speed (Frames Per Second, FPS) |

38.77–87.44 FPS |

|

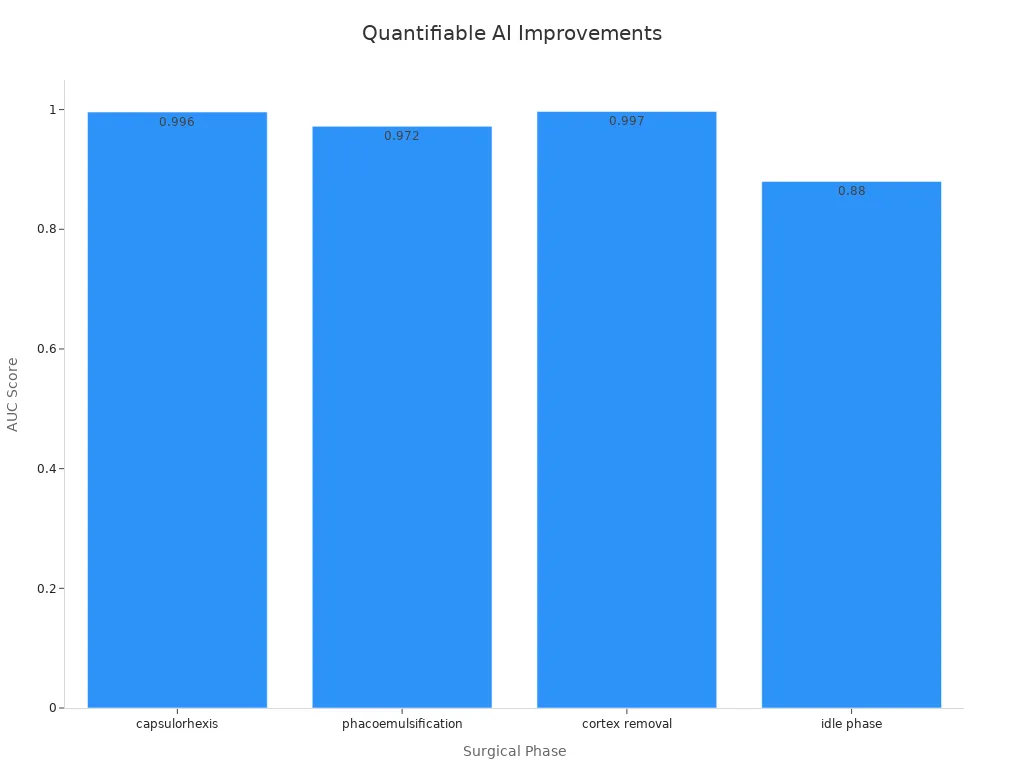

Surgical phase recognition |

Area Under ROC Curve (AUC) for capsulorhexis phase |

0.996 |

|

Surgical phase recognition |

AUC for phacoemulsification phase |

0.972 |

|

Surgical phase recognition |

AUC for cortex removal phase |

0.997 |

|

Surgical phase recognition |

AUC for idle phase recognition |

0.880 |

|

Autonomous robotic navigation |

Positional accuracy |

Sub-millimeter accuracy |

|

Autonomous disease screening |

Sensitivity in detecting referable posterior pathologies |

95% |

|

Autonomous disease screening |

Specificity in detecting referable posterior pathologies |

76% |

|

Robotic surgical task autonomy |

Adaptation to surgeon preferences via RL and imitation learning |

Demonstrated personalized procedural execution |

These metrics show how embodied ai and robotics have reached new levels of precision, speed, and adaptability. Humanoids now support surgeons, automate warehouse operations, and even provide assistance in healthcare settings.

World Models

World models form the cognitive backbone of embodied ai. These models allow robots and ai agents to understand, predict, and interact with their environments. Recent advancements have transformed embodied intelligence systems by enabling machines to simulate and reason about the world in ways that resemble human cognition.

Meta AI's Joint Embedding Predictive Architecture (JEPA) stands out as a major leap. JEPA reduces GPU power usage by two to ten times compared to traditional ai methods. It operates in an abstract representation space, which means it can understand the meaning behind actions and adapt quickly to new tasks with less data. The Video JEPA (V-JEPA) model further improves training and sample efficiency by 1.5 to 6 times, predicting missing parts of videos in abstract space and ignoring unpredictable noise. These advances allow embodied ai to learn faster, adapt to new scenarios, and support causal reasoning.

The integration of world models with robotics and physical systems expands their application. Robots now use these models to navigate complex environments, manipulate objects, and interact with humans more naturally.

World models have become essential for research and deployment, supporting everything from autonomous vehicles to assistive humanoids in hospitals.

Multimodal & Agent Loops

Multimodal ai and agent loops have accelerated the deployment and effectiveness of embodied ai. These systems process information from multiple sources—such as vision, sound, and touch—enabling robots to make better decisions in real time. Agent loops use feedback to self-improve, allowing robots and ai agents to adapt to changing environments and user needs.

Recent advancements focus on simplifying deployment and speeding up the testing process. Generative feedback loops allow databases and agents to self-adapt, supporting full CRUD (Create, Read, Update, Delete) operations. This capability enhances agent responsiveness and reliability. Faster real-time processing and decision-making have become standard, making embodied ai suitable for mainstream use.

The establishment of robust evaluation metrics ensures that performance remains consistent from early prototypes to production environments.

Multimodal capabilities and agent loops now drive progress in robotics, healthcare, and smart environments. These improvements help embodied ai systems learn from experience, interact with people, and solve complex problems across industries.

Industry Applications

Healthcare & Assistance

Healthcare organizations now use embodied ai to improve patient care and support clinical staff. Robots help with rehabilitation, monitor vital signs, and assist with daily tasks for elderly patients. A recent survey found that 23% of people have used ai-enabled health services, and 55% feel familiar with these devices. Researchers used statistical models to show that people are more likely to adopt ai in healthcare when they see clear benefits. Clinical decision support systems powered by embodied ai reduce workload for emergency physicians and increase satisfaction. Chatbots and virtual agents help children and adults manage mental health and answer health questions.

|

Methodology |

Sample Size |

Key Statistical Evidence |

|

|---|---|---|---|

|

Improve usability of Clinical Decision Support System (CDSS) |

Experimental simulation and observation |

32 clinicians |

Emergency physicians reported lower workload and higher satisfaction with human factors–based CDSS vs traditional CDSS |

|

Develop risk prediction tool for medication-related harm |

Mathematical/numerical analysis |

1280 elderly patients |

Risk prediction tool achieved C-statistic of 0.69 using 8 variables |

|

Assess usability and acceptability of AI symptom checker |

Survey measuring ease of use |

523 patients |

Majority found system easy to use; 425 patients reported no change in care-seeking behavior |

|

Evaluate embodied conversational agent's impact on perception |

Interview study with Acosta and Ward Scale |

20 patients |

Older male agent perceived as more authoritative (P=0.03); no added value seen in health app |

|

Evaluate chatbot for adolescent mental well-being |

Experimental, participatory design, survey |

20 children |

16 found chatbot useful; 19 found it easy to use |

|

Usability of smartphone camera for oral health self-diagnosis |

Interview study using NASA-TLX |

500 volunteers |

Experts agreed on acceptable results; app increased oral health knowledge |

|

Feasibility of mobile sensor system for pulmonary obstruction severity |

Mathematical/numerical data |

91 patients, 40 healthy controls |

Patients rated smartphone assessment tool comfortable (mean 4.63/5, σ=0.73) |

|

Evaluate deep learning eye-screening system |

Observation and interviews |

13 clinicians, 50 patients |

Challenges included increased workload, low image quality, and internet speed issues |

Manufacturing & Logistics

Manufacturers and logistics companies rely on embodied ai to boost efficiency and cut costs. Robots sort packages, load trucks, and manage inventory with high accuracy. Companies like UPS and Amazon report major savings and productivity gains. For example, UPS uses an ai system that saves $300-$400 million each year and reduces fuel use by 10 million gallons. Warehouse automation lowers labor costs by up to 40% and improves safety by up to 90%. DHL’s sorting robots increase capacity by 40%, while Amazon’s Sequoia system speeds up inventory processing by 75%.

|

Specific Metrics and Examples |

|

|---|---|

|

Cost Savings |

UPS ORION system saves $300-$400 million annually; early AI adopters reduce logistics costs by 15%; warehouse operational costs cut by up to 50% |

|

Fuel Consumption Reduction |

UPS saves 10 million gallons annually; 10-15% fuel savings reported; Maersk achieves up to 15% fuel cost savings |

|

Labor Cost Reduction |

Warehouse labor costs reduced by up to 40% through automation |

|

Inventory Optimization |

Inventory levels reduced by 35%; holding costs cut by 10-30% |

|

Service Level Improvements |

Service levels increased by 65% among early AI adopters |

|

Throughput/Productivity Gains |

DHL sorting robots increase capacity by 40%; picking robots boost productivity by 30-180%; Amazon's Sequoia system speeds inventory processing by 75% |

|

Error Reduction |

Demand forecasting errors reduced by 20-50%; manual data entry errors cut by 60% |

|

Safety Improvements |

Predictive maintenance reduces equipment failures by 35%; warehouse automation improves safety by up to 90% |

Smart Environments

Smart environments use embodied ai to create safer, more efficient spaces. Google’s smart grid optimization cut energy use by 15%. IBM’s water monitoring improved water quality by 20%. Robots help with elderly care, disaster response, and space exploration. They also support education and home tasks. Boston Dynamics’ robots use computer vision to move over rough ground. Tesla’s Optimus robot helps in factories and homes. Soft robots from Carnegie Mellon University learn to fold laundry and prepare food. Social robots engage children and support people with autism through gestures and adaptive behavior.

-

Elderly care robots assist with lifting, fetching, and emotional support.

-

Disaster response robots find survivors and assess risk zones.

-

Manufacturing robots sort packages and adapt to changing environments.

-

Healthcare robots help with mobility exercises and monitor health.

-

Space robots work on the Moon and Mars.

-

Large language models help robots understand complex instructions.

-

Boston Dynamics’ robots perform complex tasks in rough terrain.

-

Tesla’s Optimus robot supports factory and home tasks.

-

Soft robots learn dexterous tasks like folding laundry.

-

Social robots engage children and support autism therapy.

Research & Prototypes

AI Index Highlights

Recent research shows rapid progress in autonomous ai agents. These agents now combine large language models with software tools to complete tasks in complex environments. The length of tasks they handle doubles about every seven months, which mirrors the growth seen in Moore’s Law. Benchmarks like RE-Bench reveal that ai agents outperform humans in short, well-defined tasks. They solve problems faster and achieve higher scores. In longer and more complex challenges, human experts still lead. This trend highlights the shift from passive language models to embodied ai agents that interact with the real world. The field of robot research and development benefits from these advances, as embodied ai systems become more capable and adaptable.

Researchers see a clear path from language-based models to embodied agents that can work in hospitals, factories, and homes.

Open Projects

Open projects play a key role in advancing ai research. Many projects focus on auditing and evaluating the fairness, accuracy, and transparency of ai systems. For example, one project tests large language models in high-stakes decisions, such as predicting recidivism. Another project creates tools for auditing medical ai systems. These efforts use both qualitative and quantitative methods to measure performance and reliability.

|

Project Name |

الوصف |

Type of Evidence Provided |

|---|---|---|

|

FATE |

Evaluates LLMs in decision-making, compares human and ai predictions |

Statistical and qualitative analyses |

|

Medical AI Auditing |

Develops ethics-based auditing tools |

Conceptual framework, prototype tool |

|

Motion Capture Audits |

Assesses hardware and data collection in ai systems |

Empirical assessment |

|

Responsible AI Maturity |

Audits organizations on ai ethics and practices |

Empirical strategies, assessment tools |

|

Audit4SG |

Builds customizable ai auditing tools for social good |

Ontology, tool prototype, framework |

OpenAI’s guide to A/B testing shows how startups use live data to compare ai models. This approach uncovers challenges like cost and statistical errors, helping teams improve their prototypes.

Customization

Customization stands at the center of embodied ai research. Developers and users measure adaptability using several metrics. Performance-related measurements track task success rates and completion times. Standardized functional tests, such as the 10 Meter Walk Test, appear often in medical applications. Subjective usability attributes, like comfort and intuitiveness, are gathered through interviews and questionnaires.

|

Metric Type |

Examples / Details |

Usage / Notes |

|---|---|---|

|

Performance-Related |

Task success/failure, time for task, sensorimotor integration |

Most popular; used in 70% of studies |

|

Functional Measures |

10 Meter Walk Test, Box and Block Test |

Common in medical ai research |

|

Usability Attributes |

Comfort, ergonomics, adaptability |

Collected via interviews, surveys |

|

Questionnaires |

SUS, NASA TLX, custom scales |

73% use custom questionnaires |

These metrics help researchers and developers understand how users modify and adapt embodied ai prototypes. They ensure that ai systems meet real-world needs and support ongoing improvements.

Societal Impact

Safety & Trust

Safety and trust remain top concerns as embodied ai enters homes, workplaces, and public spaces. Many people worry about job loss, especially in roles that involve repetitive tasks. Economic risks include job displacement and growing inequality, as only some groups can access advanced ai systems. Over-reliance on embodied ai may weaken human connections, leading to social isolation. In healthcare, patients sometimes feel uneasy when ai agents replace human providers. Cultural attitudes also shape trust. Some communities accept robots more easily, while others hesitate. Weak legal frameworks and unclear rules make it hard to hold ai systems accountable. These factors combine to create uncertainty about public safety and trust in embodied ai.

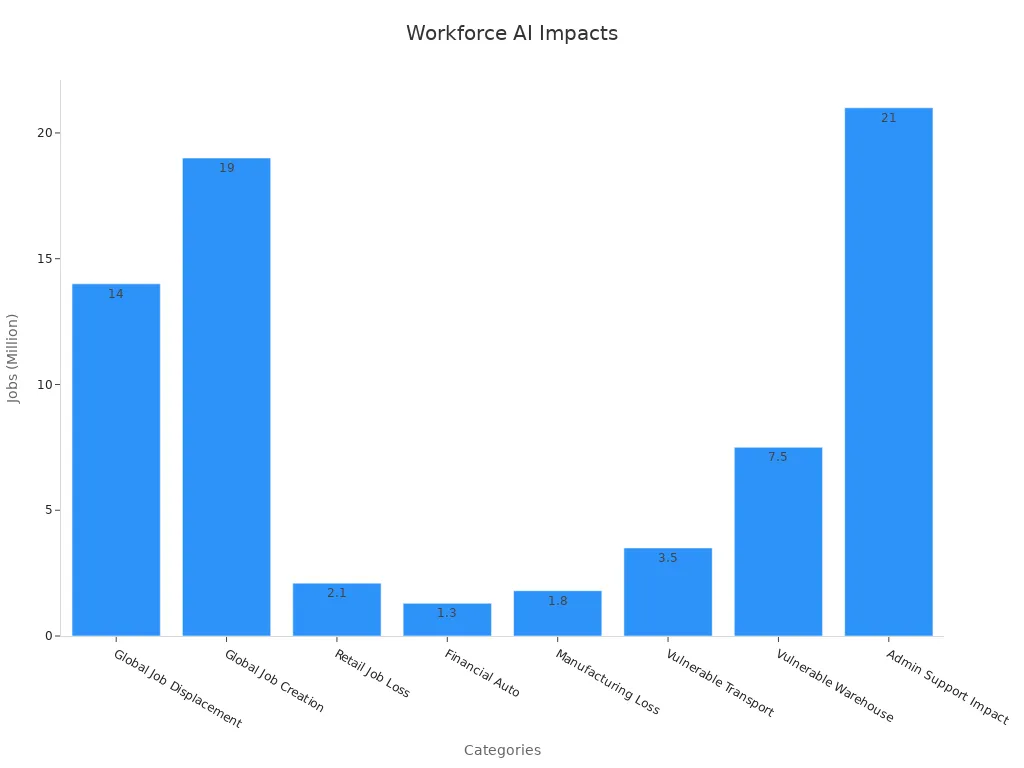

Workforce Change

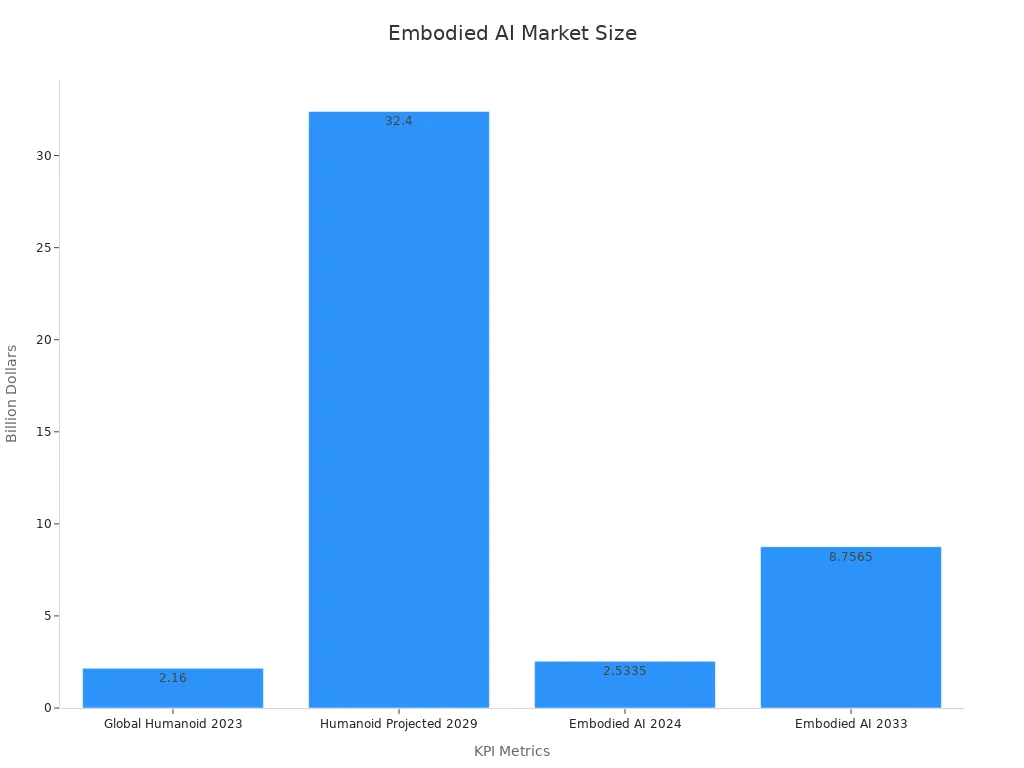

The workforce faces rapid change as embodied ai spreads across industries. Businesses use ai to boost efficiency and productivity. By 2025, ai will remove many jobs in data entry, assembly, and customer service. At the same time, new roles will appear in ai development, data science, and robotics. Workers need to learn new skills to keep up. Some groups, like older adults and those without college degrees, face higher risks of job loss and slower re-employment. The chart below shows how different sectors experience job changes due to ai:

Companies report that ai simplifies daily tasks and helps with decision-making. However, up to 30% of the global workforce may need to switch careers by 2030. This shift brings both challenges and opportunities.

Ethics & Privacy

Ethics and privacy shape how society adopts embodied ai. People want to know who controls their data and how robots use personal information. In mental health and social care, some worry that ai agents may not show enough empathy or respect privacy. Laws and regulations differ by country. Some nations set strict rules for ai use, while others move more slowly. Global cooperation grows as governments and organizations work to create fair and safe standards. Human-robot interaction raises new questions about consent, transparency, and accountability. Society must balance innovation with respect for individual rights.

Future of Embodied AI

Trends Ahead

The future of embodied ai promises rapid growth and new possibilities. Experts expect robots to gain general knowledge and perform multi-step tasks by connecting with large language models. This integration will allow robots to ask questions and learn new skills quickly. The industry also moves toward horizontal robotics platforms, similar to smartphone ecosystems. These platforms could standardize hardware and software, making innovation faster and more affordable.

Robots will become a normal part of daily life. People will see specialized robots in supermarkets, hospitals, and homes. These machines will blend into the background, much like smartphones today. Breakthroughs in robot hands and legged robots will bring human-level dexterity and agility. Swarm robotics will enable groups of robots to work together on complex tasks. Human augmentation will advance, with ai-powered exoskeletons and brain-computer interfaces offering seamless support and remote telepresence. Ethical frameworks and open datasets continue to guide responsible development. For example:

-

Robots with general knowledge will use large language models to perform new tasks.

-

Robotics platforms will standardize tools and speed up progress.

-

Specialized robots will appear in everyday places, becoming as common as smartphones.

-

Dexterous robot hands and agile legged robots will match or surpass human abilities.

-

Swarm robotics will handle complex group tasks.

-

Human augmentation, such as exoskeletons and brain-computer interfaces, will improve assistance and telepresence.

-

Open datasets and ethical partnerships will shape safe and fair growth.

-

Sanctuary AI’s Phoenix robot completed 110 tasks in one week, showing real progress.

-

Brain-computer interfaces for telepresence robots may arrive within a decade.

Challenges

Despite these advances, embodied ai faces many challenges. Recent studies highlight technical, ethical, and practical obstacles. The table below summarizes key issues and their impact:

|

Challenge Category |

Description and Quantification Examples |

|---|---|

|

Modality Misalignment in LVLMs |

Incorrect image-text matching, errors in classification and object detection. |

|

Temporal Reasoning |

Difficulty linking event sequences, such as actions in videos. |

|

Adversarial Multimodal Noise |

Small changes in input cause misclassification, reducing reliability. |

|

Bias and Fairness |

Training data biases lead to unfair outcomes in human-robot interaction. |

|

Robustness to Distribution Shifts |

Performance drops in new or changing environments, affecting safety. |

|

Ambiguity and Uncertainty |

Overconfident outputs despite unclear inputs, risking unsafe actions. |

|

Lack of Explainability |

Black-box systems make error diagnosis and trust difficult, especially in safety-critical uses. |

|

Scalability and Computational Constraints |

High computational needs limit real-time use and large-scale deployment. |

|

Security Vulnerabilities |

Attacks on input data can cause recognition and decision failures. |

|

Transfer from Simulation to Real World |

Policies trained in simulation often fail in real environments. |

|

Multi-Agent Collaboration |

Communication and coordination among different robots remain complex. |

|

Low-Level Control Limitations |

Language models plan well but struggle with precise navigation and manipulation. |

Researchers continue to address these barriers. They develop new methods to improve reliability, fairness, and safety. The field of embodied ai will need ongoing collaboration and innovation to overcome these hurdles.

The breakthroughs of 2025 have reshaped technology and society.

-

Economic and labor transformation signals new policies and job roles.

-

Social and cultural shifts emerge as education and daily life adapt.

-

Governance, medical innovation, and security evolve with new frameworks.

|

الفئة |

Key Metrics & Impact |

|---|---|

|

Financial Performance |

Revenue growth, margin improvements, ROI increases (Nubank, Adobe) |

|

Operational Efficiency |

Cycle time reduction (30-60%), productivity gains (+15-25%), defect reduction (30-50%) |

|

Innovation & Adaptability |

Faster time-to-market (30-50%), increased experimentation (+10,000%), adaptive response (+40-60%) |

|

Customer Impact |

NPS improvement (+15-30), churn reduction (20-40%), market share growth (1.5-2.5% annually) |

|

Organizational Capability |

Productivity (+20-35%), faster learning (2-3x), improved decision outcomes (+15-30%) |

The rapid growth of embodied ai markets and new commercial products highlights the need for everyone to stay informed. As industries change, people face both opportunities and challenges. The future promises innovation, improved well-being, and new ways to connect.