You need to use chatgpt ai detector tools with care because misleading claims can cause real harm.

-

Companies have claimed 98% accuracy for gpt detectors, but tests revealed only 53% accuracy.

-

Some ai detection tools now reach over 99% accuracy, yet false positives still occur, especially with chatgpt-generated text.

You should always combine your judgment with what chatgpt, gpt, or any detector reports.

Key Takeaways

-

Use multiple AI detection tools and always review their results yourself to avoid mistakes like false positives or negatives.

-

Combine AI detector results with your own judgment and human review to catch errors and understand the context better.

-

Prepare and edit your text before checking it with AI detectors to reduce the chance of being wrongly flagged.

Common Mistakes with ChatGPT AI Detector

Over-Relying on Chat Gpt Detectors

You might think that using a chatgpt ai detector gives you a clear answer every time. In reality, depending only on chat gpt detectors can lead to many mistakes. These tools often miss the context of a draft or misinterpret the intent behind the writing. For example, if you copy and paste a draft from chatgpt without making any edits, the detector may flag it as ai-generated content, even if you later add your own ideas.

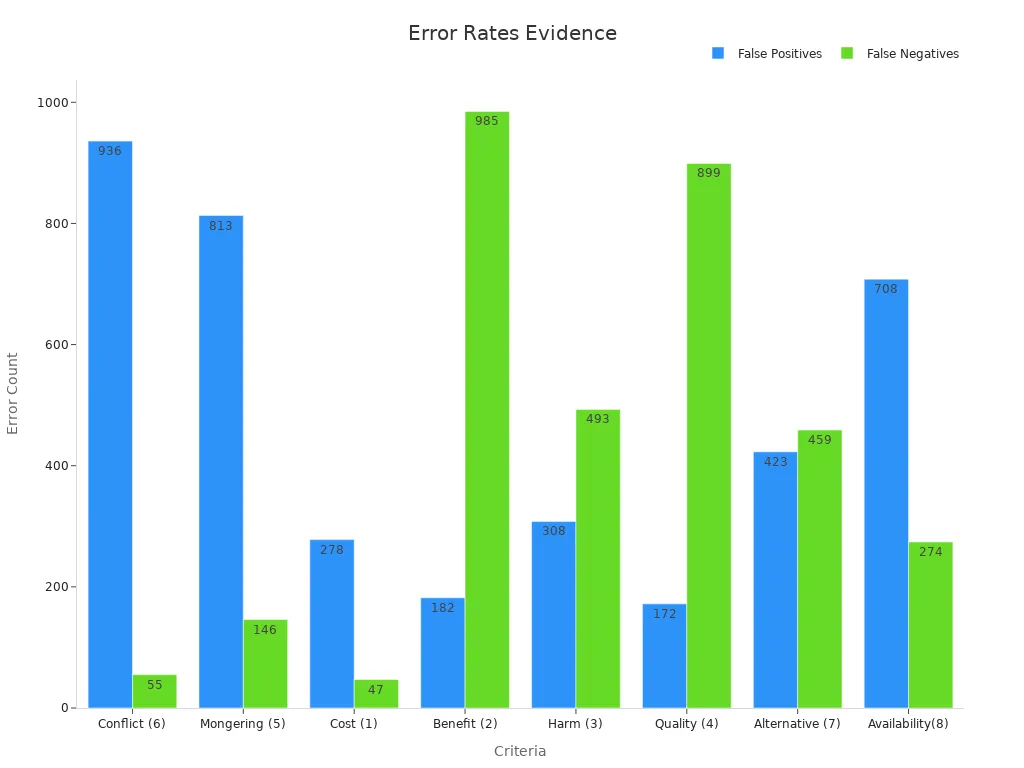

The numbers show why you should not trust a single gpt detection tool alone. Look at the table below. It shows how often chatgpt detection tools make errors, such as false positives (flagging human-written text as AI) and false negatives (missing actual ai-generated content):

|

Criterion |

False Positives (FP) |

False Negatives (FN) |

Notes on Errors and Impact on Accuracy |

|---|---|---|---|

|

Conflict (6) |

936 |

55 |

High FP indicates many incorrect conflict disclosures missed, leading to misclassification |

|

Mongering (5) |

813 |

146 |

Large FP and FN show frequent misjudgments in sensationalism detection |

|

Cost (1) |

278 |

47 |

Errors include misinterpretation of costs, affecting accuracy |

|

Benefit (2) |

182 |

985 |

High FN suggests failure to recognize valid benefits cited |

|

Harm (3) |

308 |

493 |

Misclassification of harms and side effects noted |

|

Quality (4) |

172 |

899 |

Overlooking study limitations and evidence quality |

|

Alternative (7) |

423 |

459 |

Errors in recognizing alternative strategies |

|

Availability(8) |

708 |

274 |

Misunderstanding availability of treatments |

This chart makes it clear: chat gpt detectors can make hundreds of mistakes in a single batch of drafts. If you want to avoid chat gpt detection errors, you must use more than one tool and always check the results yourself.

Misreading Detection Results

You may see a red or yellow flag from a gpt detection tool and think it means your draft is low quality or that you are cheating. This is a common misunderstanding. The color codes and scores only show the likelihood that the text is ai-generated content, not whether it is good or bad writing.

Many students and teachers misinterpret these results. For example:

Non-native English speakers often get flagged by chatgpt ai detector tools because their drafts use simple words and grammar, which look similar to ai-generated content.

Surveys show that 20% of students have been wrongly accused of using chatgpt or other gpt tools.

Black students are twice as likely as white or Latino students to be flagged for ai-generated content, showing a bias in some chat gpt detectors.

Some students try to avoid chat gpt detection by adding typos or running their drafts through several gpt detection tools before submitting.

If you want to avoid chat gpt detection mistakes, always read the results carefully. Do not panic if you see a warning. Instead, review your draft and look for ways to add your own voice and ideas.

Ignoring Human Review

You should never skip human review when using chatgpt ai detector tools. AI can spot patterns, but it cannot always understand the meaning or purpose behind a draft. Human reviewers can catch errors, bias, or context that gpt detection tools miss.

Here are some real-world examples that show why human review matters:

-

In healthcare, AI tools sometimes misjudge risk for Black patients, leading to unfair treatment.

-

In finance, the 2010 Flash Crash happened because algorithms made decisions without human checks.

-

In legal systems, AI tools have wrongly flagged people based on biased data.

A study found that when only AI reviews a draft, 27% of candidates would be selected by AI but rejected by humans. Adding human review reduces these errors and helps ensure fair results.

Skipping Text Preparation

If you copy and paste a draft from chatgpt or another gpt tool without editing, you increase the risk of being flagged by a chatgpt ai detector. Many gpt detection tools look for repetitive phrases, lack of personal voice, or unnatural sentence structure. You should always review your draft before submitting. Add your own ideas, check for errors, and make sure your writing sounds like you.

Tip: Read your draft out loud. If it sounds robotic or too formal, try rewriting some sentences in your own words. This helps you avoid chat gpt detection and makes your work more authentic.

Failing to prepare your text can also lead to false positives. Even if your draft is human-written, using too many AI-powered tools like Grammarly can trigger a detector. Always check your draft for these issues before you submit.

Best Practices for Detecting AI-Generated Content

Choose Reliable Chat Gpt Detectors

You need to start with the right tools when you want to spot ai writing. Not all chatgpt detection tools work the same way. Some focus on accuracy, while others offer more features or support different languages. You should look for ai content detectors that show clear results and have a strong track record.

Here is a table comparing some popular gpt detection tools and their reliability:

|

Tool |

Claimed Accuracy |

False Positive Rate |

Key Reliability Criteria and Features |

|---|---|---|---|

|

GPTZero |

Up to 99% (96% for mixed texts) |

N/A |

Uses perplexity and burstiness; Chrome/Docs extensions; plagiarism detection; popular with educators |

|

Copyleaks |

Over 99% |

N/A |

Combines AI logic with plagiarism scanning; supports 30+ languages |

|

Originality.ai |

Up to 99% |

Under 1% to 3% |

Multiple detection modes; multilingual; plagiarism and fact-checking; low false positives |

|

Pangram |

Most reliable; outperforms experts |

N/A |

Detects multiple LLMs; resists humanizer tech; supports 20 languages; third-party verified |

|

ZeroGPT |

98–99% |

N/A |

DeepAnalyse™ algorithm; analyzes structure, perplexity, burstiness; summarizer and paraphraser included |

|

Smodin |

91% AI detection; 99% human |

N/A |

Supports 100+ languages; rewriting, summarizing, plagiarism checking; less robust on paraphrased text |

|

QuillBot |

78–80% (varies with edits) |

N/A |

Good on full AI text; less reliable on edited content |

You should choose a gpt detection tool that matches your needs. Pangram, for example, receives praise from users and experts for its high accuracy and minimal false positives. Many users say it even outperforms trained human experts. When you select a tool, check if it can detect outputs from different gpt models, supports multiple languages, and resists tricks like humanizer technology. Reliable tools help you catch cheaters and maintain fairness.

Cross-Check and Verify

You should never trust just one gpt detection result. Each tool uses its own methods, so results can vary. To improve detection accuracy, you need to cross-check your draft with several chatgpt detection tools. This approach helps you avoid mistakes like false positives or negatives.

Tip: Use at least two or three gpt detection tools for every draft. Compare their results and look for patterns.

Researchers find that combining evidence from different sources, such as text, tables, and images, leads to better fact-checking. You can apply this idea by checking your draft with multiple tools and reviewing the context. This step-by-step process helps you tell if something is written by an ai writing tool or a human. Experts call this the “Swiss cheese” approach—layering different checks to cover gaps in each tool.

You should also review the technical metrics that measure how well gpt detection tools work. Here are some key metrics:

|

Metric Category |

Model Type |

Key Metrics |

What It Measures |

|---|---|---|---|

|

Technical Metrics (Direct) |

Classification Models |

Precision, Recall, F1 Score, False Positive Rate |

Measures accuracy of predicted labels against actual labels, balancing false positives and false negatives |

|

Technical Metrics (Direct) |

Regression Models |

RMSE, Squared Error, MAE |

Measures deviation between predicted and actual continuous values, emphasizing error magnitude |

|

Technical Metrics (Direct) |

Any AI Model |

Accuracy, Model Loss, AUC-ROC, Confusion Matrix |

Overall model performance and prediction confidence |

You should look for tools that share these metrics. This transparency helps you understand how reliable the results are and supports authenticity checks.

Combine AI and Human Judgment

You cannot rely on gpt detection tools alone. AI can flag suspicious drafts, but only you can judge the real writing style and context. When you combine AI results with your own review, you increase your chances of catching ai-generated content and avoiding mistakes.

A recent study shows that when people and AI work together, detection reliability improves. The correlation between AI and human assessments rises, which means you get more accurate results. You should read flagged drafts carefully. Look for telltale signs like repetitive phrasing, lack of personal voice, or unnatural sentence structure. These clues help you spot ai writing that a tool might miss.

Note: Always trust your instincts. If a draft feels off, review it again. AI can help, but your judgment matters most.

You should also update your skills as evolving ai detection techniques appear. New gpt models and ai writing tool updates can change how ai-generated content looks. Stay informed about the latest detection tools and best practices.

Detect AI Writing Ethically

You must use gpt detection tools responsibly. Ethical guidelines stress the need for transparency, fairness, and respect for privacy. When you check a draft, you should explain your process and avoid making unfair accusations. Always protect the privacy of writers and respect intellectual property.

Responsible detection means you do not use gpt detection to punish or embarrass someone. Instead, you use it to support learning and maintain trust. Many organizations now use ethical frameworks that focus on human dignity and personal integrity. You should follow these values when you detect ai writing.

Remember: Ethical detection builds trust. Always combine accuracy with fairness and accountability.

You should also keep your detection tools updated. New gpt models appear often, so you need to use the latest tools to keep up. Regular updates help you maintain high detection accuracy and ensure you can catch new types of ai-generated content.

You achieve the best results when you balance AI detection tools with your own judgment. AI speeds up your work, but your insight ensures quality and fairness. Studies show a 40% improvement in quality and a 23% rise in satisfaction when you combine both.

|

Benefit |

Statistic |

|---|---|

|

Quality Improvement |

40% higher |

|

Satisfaction Increase |

23% increase |

Stay ethical, update your tools, and always review results carefully.

التعليمات

How accurate are ChatGPT AI detectors?

You can expect most detectors to reach 90% accuracy or higher. False positives and negatives still happen. Always double-check results for important decisions.

Can AI detectors identify edited or paraphrased AI text?

You may find that detectors struggle with heavily edited or paraphrased text. Human review helps spot subtle AI writing patterns that tools might miss.

What should you do if a detector flags your human-written work?

Stay calm. Review your draft for repetitive phrases or unnatural language. Add personal details or examples. Ask a teacher or expert for a second opinion.