What is GPT-5.3 “Garlic”?

GPT-5.3, internally codenamed “Garlic,” is the rumored next-generation iteration of OpenAI's flagship language model series, positioned as a high-density, ultra-efficient successor to the GPT-5 and GPT-5.2 line. Following an internal “Code Red” at OpenAI triggered by the rapid advancement of Google’s Gemini 3 and Anthropic’s Claude 4.5, “Garlic” represents a fundamental shift from “bigger is better” to “smarter and denser.”

-

Release Timeline: Rumors point to a broad rollout in early 2026, following the limited enterprise launch of GPT-5.2 in late 2025.

-

Key Technical Edge: It utilizes Enhanced Pre-Training Efficiency (EPTE) and high-density training to pack “GPT-6 level” reasoning into a smaller, faster architecture.

-

Performance Benchmarks: Internal leaks suggest it outperforms competitors in complex coding, multi-step logical reasoning, and long-context retention.

-

Major Features: Expect a 400,000-token context window, native agentic reasoning tokens, and a drastically reduced hallucination rate.

-

The “Garlic” Vision: The codename reflects a model that is “small but powerful”—designed to be a highly concentrated “flavor” of intelligence that can run more cost-effectively than previous massive models.

The 2026 AI Arms Race: Why “Garlic” Matters Now

The artificial intelligence landscape in early 2026 is significantly more crowded than it was just a year ago. With Google’s Gemini 3 dominating multimodal benchmarks and Anthropic’s Claude 4.5 becoming the darling of enterprise software development, OpenAI found itself in a defensive position. Project “Garlic” is the strategic counter-move.

Unlike previous updates that focused primarily on increasing parameter counts, GPT-5.3 is built on the philosophy of computational efficiency. The goal is no longer just to build a bigger brain, but to build a more “wrinkled” one—increasing the density of connections and the quality of the data used during the pre-training phase.

Decoding the Codename: From “Strawberry” to “Garlic”

OpenAI has a history of using food-based codenames for its internal milestones. We saw “Strawberry” (which became the o1 reasoning series) and “Lemon.” The choice of “Garlic” is reportedly a nod to the model's architecture.

-

Concentrated Power: Just as a small clove of garlic can define the flavor of an entire dish, this model is designed to provide massive intelligence without requiring the massive compute overhead of earlier models.

-

Efficiency First: It signals a departure from the “Shallotpeat” project, which was rumored to be a larger, more cumbersome model that faced scaling challenges.

-

Versatility: Garlic is intended to be the foundation for everything from mobile-integrated assistants to enterprise-grade autonomous agents.

High-Density Training: The Secret Sauce

The most significant technical breakthrough associated with GPT-5.3 is High-Density Training. In the past, LLMs were trained on trillions of tokens from the open web, much of which was “noisy” or low-quality.

-

Curated Data Pipelines: Garlic was reportedly trained on a much smaller, but vastly more sophisticated dataset consisting of verified scientific papers, high-level code repositories, and synthetic data generated by previous reasoning models.

-

Parameter Efficiency: By focusing on data quality over quantity, OpenAI has managed to achieve reasoning scores that exceed GPT-5 while potentially reducing the inference cost.

-

Auto-Routing Reasoning: The model features an internal “auto-router” that determines the complexity of a prompt. For simple tasks, it uses a lightning-fast “reflex” mode; for complex problems, it automatically engages deeper reasoning tokens.

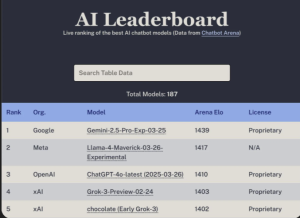

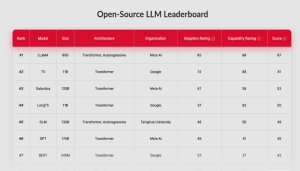

Benchmarking the Giant: Garlic vs. The Competition

Leaked internal evaluations suggest that GPT-5.3 is reclaiming the lead in the “Big Three” AI war.

| Metric | GPT-5.3 (Garlic) | Google Gemini 3 | Claude 4.5 |

| Reasoning (GDP-Val) | 70.9% | 53.3% | 59.6% |

| Coding (HumanEval+) | 94.2% | 89.1% | 91.5% |

| Context Window | 400K Tokens | 2M Tokens | 200K Tokens |

| Inference Speed | Ultra-Fast | Moderate | Fast |

While Google still holds the crown for the raw “length” of its context window, GPT-5.3 focuses on contextual accuracy—the ability to find a specific “needle in a haystack” within that 400k window with 99.9% reliability.

Key Features of GPT-5.3

OpenAI has integrated several “agent-first” features into the Garlic architecture to move beyond simple chat interactions.

-

Massive Output Capacity: Rumors suggest a 128,000-token output limit, allowing the model to generate entire software applications or 100-page technical manuals in a single pass.

-

Native Tool-Calling: Unlike previous versions that required external “wrappers,” Garlic has a native understanding of APIs and software environments, making it far more reliable at executing multi-step tasks.

-

Self-Verification Logic: Before delivering a response, the model performs a “hidden” reasoning step to check its own logic for contradictions, significantly reducing the “r” in “garlic” style errors.

-

Knowledge Cutoff: The training data reportedly includes information up to August 31, 2025, making it the most current model in the GPT-5 family.

The Impact on Developers and Enterprises

For the tech industry, GPT-5.3 represents a shift toward autonomous coding. With its improved reasoning and massive context, developers can feed the model an entire codebase for refactoring.

-

Infrastructure Optimization: Because the model is denser and more efficient, API costs are expected to drop for cached inputs, making it more viable for startups to build complex products.

-

CI/CD Integration: The model is designed to sit inside deployment pipelines, automatically reviewing code, suggesting security patches, and writing documentation as the code is committed.

-

Agentic Workflows: Rather than just answering questions, Garlic is built to act as a “Project Manager” that can delegate sub-tasks to smaller models (like o4-mini) and synthesize the results.

Community Reactions and Skepticism

Despite the “Code Red” hype, the Reddit community (specifically r/OpenAI and r/Singularity) remains divided.

-

The Hype: Many believe Garlic is the precursor to “true” agentic AI—software that can actually do work rather than just talk about it.

-

The Skepticism: Some users point out that we have entered a phase of “diminishing returns” where the jump between 5.2 and 5.3 might be imperceptible to the average user, even if the benchmarks look impressive.

-

The “Fraud” Debate: As always, some critics argue that these incremental updates are a marketing strategy to keep OpenAI in the news while they struggle with the true “GPT-6” breakthrough.

How to Prepare for the GPT-5.3 Rollout

If you are a business owner or developer, you don't need to wait for the official release to start preparing.

-

Structure Your Data: Since Garlic thrives on high-density, structured input, begin organizing your internal documentation and codebases into RAG-friendly (Retrieval-Augmented Generation) formats.

-

Audit Your Current API Usage: If you are currently using GPT-4o or 5.2, look for areas where latency is hurting your user experience. Garlic is designed specifically to solve the “slowness” of deep reasoning.

-

Experiment with Agentic Frameworks: Start building small “agent” prototypes using LangChain or OpenAI’s Assistants API. The shift in 5.3 toward agentic tokens will make these prototypes much more powerful overnight.

Conclusion: Is Garlic the Final Step Toward AGI?

While GPT-5.3 “Garlic” may not be Artificial General Intelligence (AGI) itself, it represents the most significant step toward autonomous functional intelligence we have seen. By solving the “Code Red” crisis and delivering a model that is both faster and smarter, OpenAI is proving that the future of AI isn't just about scale—it's about the quality of thought.

Whether it’s called GPT-5.3, 5.5, or simply “Garlic,” this model is set to redefine what we expect from a digital assistant in 2026. It's no longer just a chatbot; it's a teammate.