How Three Generations of Claude Models Reveal the Rapid Acceleration of AI Development

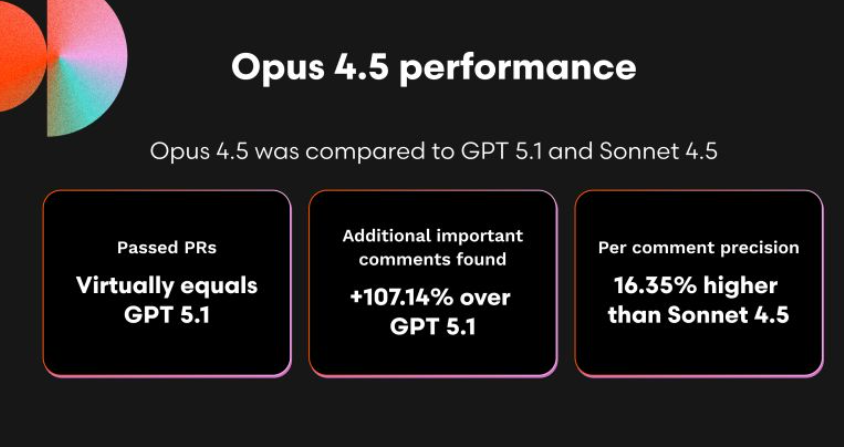

When Anthropic released Claude Opus 4.5 on November 24, 2025, it completed a remarkable trilogy of model releases spanning just two months. Claude Sonnet 4.5 arrived in late September, followed by Claude Haiku 4.5 in October, and finally Opus 4.5 in November. This rapid-fire release schedule represents more than aggressive product iteration—it reveals fundamental shifts in how AI capabilities evolve, how companies compete, and what these powerful models can actually accomplish.

But here's what makes this story particularly fascinating: for months, Anthropic had been in an awkward position where its mid-tier Sonnet 4.5 model often outperformed the older, more expensive Opus 4.1. This created a pricing paradox where users had little incentive to pay premium rates for the flagship model. Opus 4.5 needed to not just improve—it needed to restore the natural hierarchy of Anthropic's three-tier model family while justifying its existence in an increasingly competitive market.

The result is a model that Anthropic boldly claims is “the best in the world for coding, agents, and computer use.” But how does it actually compare to its siblings? Let's dive deep into the technical details, real-world performance, pricing dynamics, and practical implications of these three distinct approaches to AI capabilities.

The Model Family: Understanding the Three Tiers

Before comparing performance, it's essential to understand Anthropic's strategic positioning for each model tier.

Claude Haiku 4.5: Speed and Efficiency

The smallest and fastest model in the family, Haiku excels at tasks requiring quick responses and lower latency. Priced at $1 per million input tokens and $5 per million output tokens, it targets use cases where speed and cost matter more than maximum capability.

Claude Sonnet 4.5: The Balanced Powerhouse

Released in September 2025, Sonnet 4.5 quickly became Anthropic's most popular model. Priced at $3 per million input tokens and $15 per million output tokens, it offered what many developers considered the optimal balance of capability, speed, and cost. For months, it delivered performance that rivaled or exceeded the previous Opus 4.1 at a fraction of the price.

Claude Opus 4.5: Frontier Intelligence

The newest addition to the family, Opus 4.5 represents Anthropic's attempt to reclaim the crown after challenges from OpenAI's GPT-5.1-Codex-Max and Google's Gemini 3 Pro. At $5 per million input tokens and $25 per million output tokens, it sits at the premium tier—but notably costs just one-third of what Opus 4.1 charged.

Head-to-Head Performance Comparison

Coding Capabilities: Where Opus 4.5 Dominates

| Benchmark | Opus 4.5 | Sonnet 4.5 | Opus 4.1 | Significance |

|---|---|---|---|---|

| SWE-bench Verified | 80.9% | 77.2% | 74.5% | Real-world software engineering tasks |

| Terminal-bench 2.0 | 59.3% | 50.0% | 46.5% | Command-line coding proficiency |

| MCP Atlas (Scaled Tool Use) | 62.3% | 43.8% | 40.9% | Complex multi-tool workflows |

| OSWorld (Computer Use) | 66.3% | 61.4% | 44.4% | Desktop automation capabilities |

Opus 4.5 achieved 80.9% on SWE-bench Verified, outperforming Sonnet 4.5's 77.2% and Opus 4.1's 74.5%. This benchmark measures the ability to solve real-world software engineering problems—the exact tasks professional developers face daily.

The progression is striking: each model represents a meaningful step forward, with Opus 4.5 using dramatically fewer tokens than its predecessors to reach similar or better outcomes. This efficiency gain compounds at scale, making Opus 4.5 not just more capable but more cost-effective per successful task completion.

Agentic Workflows: The Hierarchy Restored

One of Opus 4.5's most significant advantages emerges in long-horizon, autonomous tasks—scenarios where AI agents work independently over extended periods.

| Capability | Opus 4.5 | Sonnet 4.5 | Opus 4.1 | Key Difference |

|---|---|---|---|---|

| Agentic Tool Use (τ2-bench-lite) | 88.9% | 86.2% | 86.8% | Retail scenario handling |

| Complex Tool Use (tau2-bench) | 98.2% | 98.0% | 71.5% | Telecom multi-step tasks |

| Token Efficiency | High | Medium | Low | Fewer tokens for same quality |

| Iteration Speed | 4 iterations | 6-8 iterations | 10+ iterations | Time to peak performance |

Anthropic emphasizes that Opus 4.5 reaches peak performance in just four iterations when refining its approach to complex tasks, while competing models require up to ten attempts to achieve similar quality.

This efficiency matters enormously for practical deployment. Fewer iterations mean faster results, lower costs, and more predictable performance.

Reasoning and Understanding: The Competitive Landscape

| Benchmark | Opus 4.5 | Sonnet 4.5 | Opus 4.1 | What It Measures |

|---|---|---|---|---|

| GPQA Diamond | 87.0% | 83.4% | 81.0% | Graduate-level reasoning |

| MMMU | 80.7% | 77.8% | 77.1% | Multimodal understanding |

| MMLU | 90.8% | 89.1% | 89.5% | Multilingual Q&A |

| ARC-AGI-2 | 37.6% | 13.6% | — | Novel problem-solving |

The ARC-AGI-2 results reveal something particularly interesting about model capabilities. On ARC-AGI-2, which tests novel problem-solving abilities that can't be memorized from training data, Opus 4.5 achieves 37.6% while Sonnet 4.5 scores just 13.6%. This nearly 3x performance gap suggests Opus 4.5 possesses qualitatively different reasoning capabilities, not merely incremental improvements.

The Pricing Revolution: Making Frontier AI Accessible

Perhaps the most significant story in this model comparison isn't performance—it's economics.

Cost Comparison Across Generations

| Model | Input (per 1M tokens) | Output (per 1M tokens) | Cost vs. Opus 4.1 |

|---|---|---|---|

| Opus 4.1 | $15 | $75 | Baseline |

| Opus 4.5 | $5 | $25 | 67% reduction |

| Sonnet 4.5 | $3 | $15 | 80% reduction |

| Haiku 4.5 | $1 | $5 | 93% reduction |

The pricing is a big relief: $5 million for input and $25 million for output. This is a lot cheaper than the previous Opus at $15 $75.

This dramatic price reduction transforms Opus 4.5's value proposition. At $15/$75, Opus 4.1 was reserved for only the most critical tasks. At $5/$25, Opus 4.5 becomes viable for regular development work, code reviews, and complex problem-solving that previously would have defaulted to cheaper models.

Additional Cost Savings

The headline pricing doesn't tell the full story. Anthropic offers additional mechanisms to reduce costs:

- Prompt Caching: Up to 90% cost savings for repeated queries using similar context

- Batch Processing: 50% cost reduction for non-time-sensitive workloads

- Token Efficiency: Opus 4.5 uses up to 65% fewer tokens than competing models while achieving higher pass rates on held-out tests

These compounding efficiencies mean real-world costs can be dramatically lower than headline pricing suggests.

Real-World Testing: What Users Actually Experience

Benchmarks provide objective comparisons, but they don't always predict real-world performance. Several fascinating experiments reveal how these models actually behave in practice.

Simon Willison's sqlite-utils Refactoring

Prominent technologist Simon Willison had early access to Opus 4.5 and put it through extensive real-world testing. Using Claude Code with Opus 4.5 resulted in a new alpha release of sqlite-utils that included several large-scale refactorings—Opus 4.5 was responsible for most of the work across 20 commits, 39 files changed, 2,022 additions and 1,173 deletions in a two day period.

But here's where things get interesting. Willison's preview access expired mid-project, forcing him to switch back to Sonnet 4.5. The result? He switched back to Claude Sonnet 4.5 and kept on working at the same pace he'd been achieving with the new model.

This experience reveals something crucial about evaluating AI models: Production coding like this is a less effective way of evaluating the strengths of a new model than expected. For well-defined refactoring tasks with clear specifications, Sonnet 4.5's capabilities proved sufficient.

The Anthropic Hiring Test: Superhuman Performance

Perhaps the most striking demonstration of Opus 4.5's capabilities came from Anthropic's internal testing. Anthropic gave Opus 4.5 the same test it gives prospective performance engineering candidates who want to work at the company. This test, which solely focuses on technical ability, has a two-hour time limit and Opus 4.5 scored higher than any of Anthropic's job candidates ever did.

Using parallel test-time compute—a technique that aggregates multiple attempts and selects the best result—Opus 4.5 exceeded human performance within the time constraint. Without time limits, it matched the best human candidate ever tested.

This raises profound questions about AI's role in software engineering. If frontier models can exceed human performance on technical assessments, what does that mean for the profession? Anthropic acknowledges the test doesn't measure collaboration, communication, or the intuition that develops over years of experience—but the technical capability threshold has clearly been crossed.

Partner Feedback: Consistent Excellence

Early access partners provided remarkably consistent feedback about Opus 4.5:

GitHub reported that Opus 4.5 delivers high-quality code and excels at powering heavy-duty agentic workflows, with early testing showing it surpasses internal coding benchmarks while cutting token usage in half.

Cursor found the model especially well-suited for complex tasks like code migration and refactoring—scenarios where understanding system architecture matters as much as writing correct syntax.

Warp observed that Opus 4.5 delivered an impressive refactor spanning two codebases and three coordinated agents, being very thorough in helping develop a robust plan, handling the details and fixing tests.

The pattern across partner testimonials: Opus 4.5 shines specifically in scenarios that defeated or slowed down Sonnet 4.5.

Context and Capabilities: The Technical Details

Context Windows and Knowledge Cutoffs

| Model | Context Window | Output Limit | Knowledge Cutoff |

|---|---|---|---|

| Opus 4.5 | 200,000 tokens | 64,000 tokens | March 2025 |

| Sonnet 4.5 | 200,000 tokens | 64,000 tokens | January 2025 |

| Haiku 4.5 | 200,000 tokens | 64,000 tokens | February 2025 |

The core characteristics of Opus 4.5 are a 200,000 token context (same as Sonnet), 64,000 token output limit (also the same as Sonnet), and a March 2025 reliable knowledge cutoff (Sonnet 4.5 is January, Haiku 4.5 is February).

Interestingly, all three models share identical context window specifications. The differentiation comes from capability, not capacity—what the models can accomplish within that context, not how much they can hold.

The New Effort Parameter

Opus 4.5 has a new effort parameter which defaults to high but can be set to medium or low for faster responses. This allows developers to trade thoroughness for speed depending on the task at hand—use high effort for critical production code, medium for prototyping, and low for quick exploratory queries.

Partner feedback on this feature has been enthusiastic. The effort parameter is brilliant. Claude Opus 4.5 feels dynamic rather than overthinking, and at lower effort delivers the same quality needed while being dramatically more efficient.

Enhanced Computer Use

Opus 4.5 introduces improved computer control capabilities, including a zoom tool which can be provided to Opus 4.5 to allow it to request a zoomed in region of the screen to inspect. This enables more precise interaction with user interfaces, particularly useful for accessibility testing, UI automation, and detailed visual inspection tasks.

When to Choose Each Model: A Practical Guide

The three models serve distinct purposes, and understanding when to use each maximizes both performance and cost-effectiveness.

Choose Claude Opus 4.5 When You Need:

Complex, Multi-System Problems

Testers noted that Claude Opus 4.5 handles ambiguity and reasons about tradeoffs without hand-holding. They told us that, when pointed at a complex, multi-system bug, Opus 4.5 figures out the fix.

For problems spanning multiple codebases, requiring deep reasoning about system architecture, or involving intricate dependencies, Opus 4.5's superior reasoning capabilities justify the premium cost.

Autonomous Agent Workflows

Opus 4.5 excels at long-horizon, autonomous tasks, especially those that require sustained reasoning and multi-step execution. If you're building agents that work independently for extended periods—researching, planning, implementing, and testing—Opus 4.5's ability to maintain context and reason through complex workflows makes it the clear choice.

Critical Production Code

When code quality absolutely matters—production systems, security-critical components, or high-stakes refactoring—Opus 4.5's higher accuracy and more reliable execution reduce risk.

Document and Presentation Creation

Anthropic stresses that the model can now produce documents, spreadsheets and presentations with consistency, professional polish, and domain awareness. For enterprise knowledge workers creating client deliverables or strategic documents, Opus 4.5's step-change improvement justifies its use.

Choose Claude Sonnet 4.5 When You Need:

Everyday Development Tasks

For routine coding, documentation updates, code reviews, and other common development activities, Sonnet 4.5 delivers excellent results at significantly lower cost. Willison's experience demonstrates that well-defined refactoring projects work perfectly well with Sonnet.

Balanced Performance and Cost

At $3/$15 per million tokens, Sonnet provides 40% savings compared to Opus 4.5 while still delivering strong performance across most benchmarks. For projects with budget constraints or high-volume applications, this balance is optimal.

Rapid Iteration

Sonnet's slightly lower latency makes it ideal for interactive development where you're rapidly trying different approaches and need quick feedback.

Choose Claude Haiku 4.5 When You Need:

Speed-Critical Applications

For user-facing applications where latency matters more than maximum capability, Haiku's faster response times improve user experience.

High-Volume Processing

At $1/$5 per million tokens, Haiku makes economic sense for batch processing large volumes of data where individual task complexity is low.

Simple, Well-Defined Tasks

For straightforward queries, basic code generation, or simple transformations, Haiku's lower capability level is sufficient while delivering dramatic cost savings.

The Security Question: Prompt Injection Robustness

One critical dimension of comparison involves security, specifically resistance to prompt injection attacks—malicious instructions hidden in data sources that attempt to hijack the model's behavior.

With Opus 4.5, Anthropic made substantial progress in robustness against prompt injection attacks, which smuggle in deceptive instructions to fool the model into harmful behavior. Opus 4.5 is harder to trick with prompt injection than any other frontier model in the industry.

However, Willison raises an important caveat: Single attempts at prompt injection still work 1 20 times, and if an attacker can try ten different attacks that success rate goes up to 1 3.

This represents improvement over previous models, but the fundamental challenge remains. As Willison notes, designing applications under the assumption that motivated attackers will find ways to trick models remains essential, regardless of which Claude model you choose.

The Evaluation Challenge: Why Benchmarks Don't Tell the Whole Story

Willison's experience highlights a broader challenge in AI model evaluation. With hindsight, production coding like this is a less effective way of evaluating the strengths of a new model than expected.

Traditional benchmarks measure performance on standardized tests, but they don't always predict which tasks will benefit from frontier capabilities. Willison suggests a different approach: Concrete examples of tasks they can solve that the previous generation of models from the same provider were unable to handle—”Here's an example prompt which failed on Sonnet 4.5 but succeeds on Opus 4.5″—would excite users more than single digit percent improvements on benchmarks.

This insight matters for practical model selection. The question isn't “which model scores higher on GPQA Diamond” but “which specific tasks in my workflow does the premium model enable that the cheaper model cannot handle?”

Platform Integration and Availability

All three models are available across Anthropic's expanding ecosystem:

Consumer Applications:

- Claude apps (Pro, Max, Team, Enterprise plans)

- Claude Code (now available in desktop apps)

- Claude for Chrome (expanding to all Max users)

- Claude for Excel (available to Max, Team, Enterprise users)

Developer Platforms:

- Claude API

- Amazon Bedrock

- Google Cloud Vertex AI

- Microsoft Foundry

- GitHub Copilot (Opus 4.5 available to paid plans)

Usage Limits: Anthropic is updating usage limits to make sure users are able to use Opus 4.5 for daily work. These limits are specific to Opus 4.5. Max users now receive significantly more Opus usage than before—as much as they previously received for Sonnet.

Infinite Conversations: A particularly welcome improvement: lengthy conversations no longer hit context limits. Claude automatically summarizes earlier context as needed, allowing chats to continue indefinitely. This benefits all three models but particularly enhances Opus 4.5's utility for extended agentic workflows.

The Competitive Context: Why Opus 4.5 Matters Now

Anthropic had been in an interesting position lately, where the latest version of its mid-tier Sonnet model often outperformed the older Opus 4.1 model, giving users very few reasons to use the more expensive model in their day-to-day work.

This created both a business problem and a positioning challenge. If your premium model doesn't meaningfully exceed your mid-tier offering, why would customers pay for the premium tier?

Opus 4.5 restores the natural hierarchy. As Alex Albert, Anthropic's head of developer relations, explained: “What's interesting about this release is that it is not necessarily like: ‘Oh, everybody needs to now shift to Opus,' but it does enable this new tier of possibilities”.

The idea isn't that every user should abandon Sonnet 4.5—it's that Opus 4.5 unlocks capabilities previously unavailable at any price point. Tasks that were near-impossible for Sonnet 4.5 just a few weeks ago are now within reach.

The Broader Implications: What This Rapid Evolution Means

The progression from Opus 4.1 to Sonnet 4.5 to Opus 4.5 in just months reveals several important trends:

Acceleration is Accelerating

The time between meaningful capability improvements continues shrinking. What once took years now takes months or weeks. This pace shows no signs of slowing.

Price-Performance Ratios Keep Improving

Opus 4.5 delivers superior performance to Opus 4.1 at one-third the cost. This trend—better capabilities at lower prices—fundamentally changes who can build with frontier AI and what they can accomplish.

Differentiation Through Specialization

Unlike Google, Anthropic has never focused on image manipulation or video creation, but has stuck squarely to its strength in coding and productivity use cases. This focused strategy allows Anthropic to advance more rapidly in their chosen domains than competitors pursuing broader capabilities.

The Three-Tier Strategy Works

Having Haiku (fast/cheap), Sonnet (balanced), and Opus (powerful) models serves distinct use cases. Smart developers use different models for different tasks, optimizing both performance and cost.

Conclusion: Choosing Among the Claude Family

The comparison between Opus 4.5, Sonnet 4.5, and Opus 4.1 reveals a clear evolutionary pattern. Each model represents a meaningful step forward in capabilities, but the improvements aren't uniform across all tasks.

Key Takeaways:

- Opus 4.5 excels at complexity: When tasks involve ambiguity, multi-system reasoning, or long autonomous workflows, Opus 4.5 delivers meaningfully better results than Sonnet 4.5 or Opus 4.1.

- Sonnet 4.5 handles most everyday work: For well-defined development tasks, Sonnet provides excellent performance at lower cost. Many users won't need Opus 4.5 most of the time.

- Price-performance has dramatically improved: Opus 4.5's 67% price reduction while delivering superior performance represents a quantum leap in accessible frontier capabilities.

- Token efficiency compounds savings: Opus 4.5's ability to solve problems with fewer tokens means real-world costs are even lower than headline pricing suggests.

- The hierarchy is restored: Opus 4.5 successfully re-establishes the premium tier's value proposition, enabling tasks that defeat Sonnet 4.5 while remaining cost-competitive with alternatives.

The rapid evolution from Opus 4.1 to Sonnet 4.5 to Opus 4.5 demonstrates both the opportunities and challenges of AI development's breakneck pace. Capabilities that seemed cutting-edge months ago now feel routine. Models that once commanded premium prices face pressure from more capable, cheaper successors.

For developers and businesses, the message is clear: the three-tier Claude family provides genuine choice. Use Opus 4.5 for your hardest problems, Sonnet 4.5 for everyday development, and Haiku 4.5 for high-volume simple tasks. This strategic approach maximizes capabilities while managing costs.

As AI capabilities continue advancing, the question won't be whether to use AI in software development—it will be which specific model to choose for each specific task. The Claude family's clearly differentiated tiers make that choice more straightforward, even as the underlying capabilities grow more sophisticated with each passing month.

Claude Opus 4.5, Sonnet 4.5, and Haiku 4.5 are available now via the Claude API, on all major cloud platforms, and through Anthropic's consumer applications. Pricing starts at $1/$5 per million tokens for Haiku, $3/$15 for Sonnet, and $5/$25 for Opus.