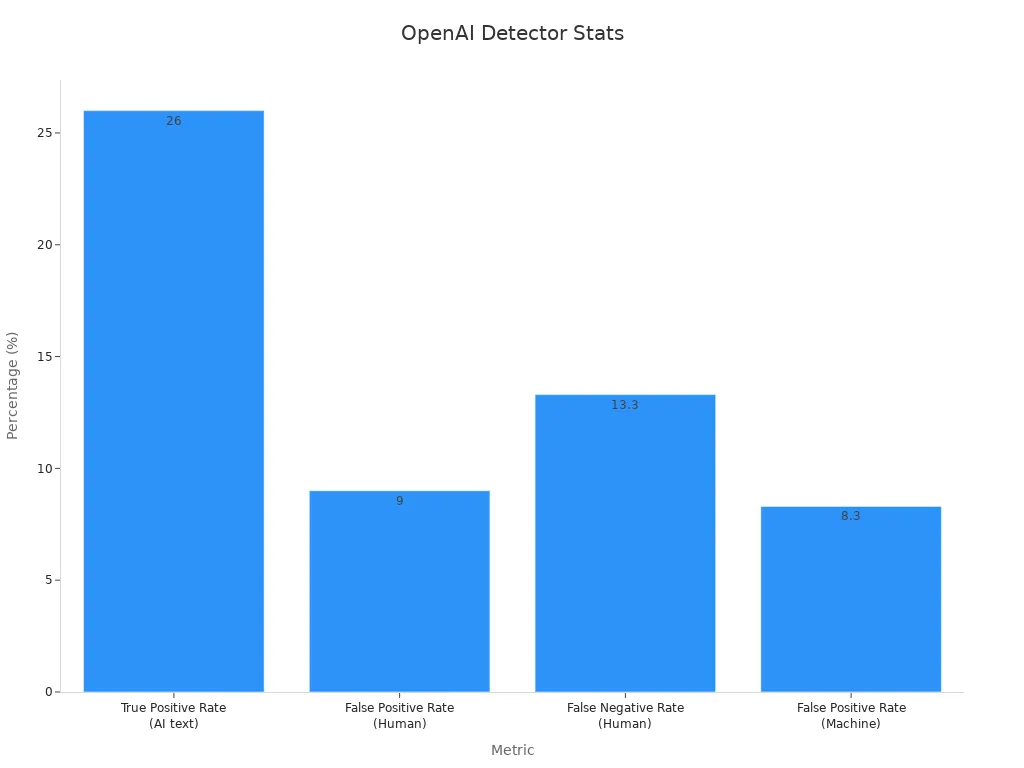

Openai stopped using its ai detector because it was not accurate enough for real life. In one study, the openai ai detector only got 26% of ai-made text right. It also said that 9% of human writing was ai content by mistake. Openai said the detector made too many wrong guesses. For school, work, and checking content, an ai detector must be very accurate. People need tools they can trust, but the openai ai detector did not do this.

Key Takeaways

-

OpenAI’s AI detector is not very accurate. It sometimes says human writing is made by AI. It also misses a lot of real AI writing. Many people think the detector is not reliable. It is even worse for texts that are not in English. People also worry about privacy and not knowing how it works. If someone changes or edits AI writing, it is hard to catch. But new ways are making it easier to find these changes. Some tools like Originality.ai work better and are more accurate. They also check for plagiarism and have more features. AI detectors are helpful in schools and businesses. But people should also check the results to avoid mistakes and be fair.

OpenAI AI Detector Accuracy

Accuracy Results

Openai made many versions of its openai ai detector and openai text classifier. Each new version says it is more accurate and better at finding ai-made writing. But in real life, these tools do not always work as well as they say. One time, the openai ai detector only found 26% of ai-made writing. This low number caused many mistakes. It sometimes said human writing was ai-made or missed ai writing. This made the tool hard to trust for important jobs.

Newer models do better in tests. The table below shows how different openai ai detector versions compare for accuracy and mistakes:

|

Model Version |

Date |

Accuracy |

False Positive Rate |

False Negative Rate |

Notes |

|---|---|---|---|---|---|

|

3.0 Turbo |

Feb 2024 |

2.8% |

N/A |

Trained on latest LLMs; improved accuracy and slightly reduced false positives |

|

|

1.0.0 Lite |

July 2024 |

98% |

<1% |

~2% |

Suitable for lightly AI-edited content; low false positives; good for academic use |

|

3.0.1 Turbo |

Oct 2024 |

99%+ |

<3% |

N/A |

Best for zero tolerance AI content policies; robust against bypassing attempts |

Even with high scores, openai ai detector still has problems in real life. The openai text classifier sometimes calls human writing ai-made by mistake. This makes people worry about trusting ai detection tools, especially in school and work.

Openai also tested its openai text classifier on TOEFL essays and new models. It got 99.66% right and did not call any human essays ai-made. For GPT-4 models, it got 99.18% right. These results show openai ai detectors can be very good, but real life is not always the same as tests.

User Reviews

People who use the openai ai detector and openai text classifier have mixed feelings. Many say the tools do not work well and are not accurate, especially for languages that are not English. The table below shows what users say:

|

Aspect |

User Feedback Summary |

|---|---|

|

Success Rate |

Only 26% success rate reported, indicating low reliability for important tasks. |

|

Plagiarism Detection |

Feature absent, users find this a significant limitation. |

|

Language Support |

Not reliable for non-English texts; results inconsistent and unpredictable. |

|

Pricing Transparency |

Lack of public pricing causes budgeting difficulties and uncertainty about affordability and scalability. |

|

Customer Service |

Poor support with ignored emails and difficult refund/cancellation processes. |

|

Subscription Model |

Monthly credit expiration frustrates users; prepaid plans do not offer bonus credits. |

|

Positive Aspects |

Intensive training on diverse sources, free access, and straightforward, user-friendly setup. |

Many users worry about privacy when using openai ai detectors. They are afraid that people might see their private data during training. Some wonder if openai gives data to other companies or uses it for ads. These worries make it hard for people to trust the openai ai detection tool.

Detecting Content Generated by AI

Finding out if something was made by ai is still hard for openai ai detectors and other tools. Even the best models can miss new types of ai-made writing. For example, tools like GPTSniffer try to find code made by ai, but do not always work well. On Java code, it only gets 32.26% right. On Python, it gets 44.85% right. These numbers show that ai detectors have trouble working on all kinds of writing.

-

Ai detection tools do not work well on new data they did not see before.

-

The type of ai model or settings does not always change the results.

-

Sometimes, ai detectors say human-written school papers are ai-made, especially if the writing is simple or uses ai rewording tools.

Studies show that some ai detection tools, like GPTZero, can do worse than guessing when checking medical writing. This can hurt writers who do not speak English as their first language and make ai detection less useful. Using both ai detection and human review can help stop mistakes, but training for each subject is still needed.

Openai keeps working to make its openai ai detector and openai text classifier better. But how well these tools work depends on more testing and updates. People should be careful with claims about ai detection accuracy, especially for big decisions.

AI Content Detector Features

Language and Content Types

AI content detectors now work with many languages and document types. They use smart features to find AI-made writing in essays, articles, and web pages. Some tools, like TraceGPT, do well in different languages.

-

Spanish: 96% accuracy

-

Chinese: 92% accuracy

-

Arabic: 93% accuracy

-

Japanese: 95% accuracy

AI content detection tools use hybrid models. These models mix language-specific and general methods. This helps them check documents with more than one language. The system also looks at language details, idioms, and tone. For example, it can notice too much formal tone or strange wording in Spanish AI writing. These features help the ai content detector work better for people around the world.

Handling Paraphrased Text

Paraphrased text is a big problem for ai content detectors. Many ai detection tools do not work well when writers use strong paraphrasing models. The table below shows how different detectors do on original and paraphrased AI writing:

|

Detector / Method |

Detection Accuracy on Original AI Text |

Detection Accuracy on Paraphrased Text |

False Positive Rate on Human Text |

|---|---|---|---|

|

DetectGPT |

4.6% |

1% |

|

|

OpenAI's Text Classifier |

Significantly reduced accuracy |

Significantly reduced accuracy |

1% |

|

Retrieval-based Defense Method |

N/A |

80% to 97% |

1% |

Paraphrasing makes it harder for an ai content detector to work. But retrieval-based methods bring back much of the lost accuracy. These features help ai detectors keep up with new ways of writing.

False Positives and Negatives

AI content detection tools often make mistakes called false positives and false negatives. These mistakes make it hard to trust the detector. The numbers below show how often these errors happen:

|

Metric |

Value |

Description |

|---|---|---|

|

True Positive Rate (AI text) |

Percentage of AI-written text correctly identified as AI-generated |

|

|

False Positive Rate (Human) |

9% |

Percentage of human writing incorrectly labeled as AI-generated |

|

False Negative Rate (Human) |

13.3% |

Percentage of human-written essays mistakenly identified as AI-generated |

|

False Positive Rate (Machine) |

8.3% |

Percentage of machine-generated essays mistakenly identified as human-written |

These numbers show that ai detectors can get both ai and human writing wrong. People should be careful when looking at ai content detection results. No ai detection tool is perfect, so humans still need to check important work.

OpenAI AI Detector Limitations

Common Issues

The openai ai detector has many problems that make it less useful.

-

Openai stopped using the detector in February 2023 because it did not work well and sometimes caused harm.

-

The detector often said human writing was ai-made, which led to mistakes.

-

Openai said the tool was not very good and told people to be careful with its results.

-

There are no easy ways to tell ai writing from human writing, so it is hard to detect.

-

The openai ai detector uses black-box algorithms, so people cannot see how it decides.

-

Studies show the detector makes lots of errors and can be unfair, especially for people who do not speak English as their first language or have disabilities.

-

New ai models and tricks make it even harder to spot ai writing.

Even the people who make smart ai models find it hard to build good detectors. These problems show that ai detector accuracy is still a big worry.

Transparency

Transparency is a big problem for the openai ai detector. Openai did not share much about how the detector works. Users could not know how it made choices or why it picked certain answers. Without clear information, people found it hard to trust the tool.

If there is no transparency, people and groups might not understand the results.

The CLeAR Documentation Framework says good transparency needs clear and full information. Openai closed the detector, which shows they knew about these problems.

Scalability

Scalability is another problem for the openai ai detector. As more people use ai, it gets harder to check all the writing. The openai text classifier and other tools have trouble with new kinds of ai writing. Turnitin’s ai detector, for example, made more mistakes than expected when checking millions of essays.

-

Mistakes like false positives can cause big problems in schools and jobs.

-

Experts say some detectors are not better than guessing in some cases.

-

Because of how ai writing works, mistakes like false positives cannot be stopped completely.

Openai’s ai detector cannot always work well for big groups, so it is not great for large companies.

OpenAI AI Detector Alternatives

Comparison with Other AI Content Detectors

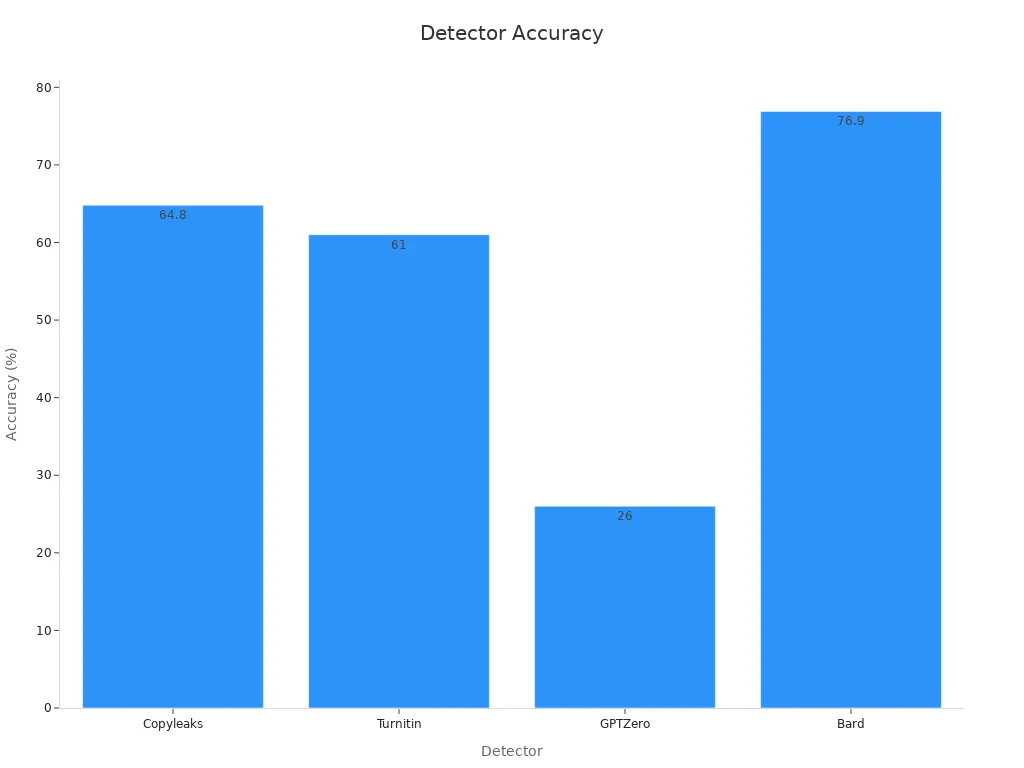

Many people want other options because the openai ai detector is not accurate enough for 2025. When people compare detectors, Originality.ai does much better. It finds ai-made text right 79% of the time. The openai ai detector only gets it right 26% of the time. Originality.ai can also check for plagiarism, but openai cannot. The table below shows how these detectors are different:

|

Detector |

AI Detection Accuracy |

False Positive Rate |

Additional Features |

|---|---|---|---|

|

OpenAI Text Classifier |

26% |

9% |

No plagiarism detection, five-level likelihood scale |

|

Originality.ai |

79% |

Not specified |

Plagiarism detection, percentage-based AI content scores |

Other ai content detectors, like Copyleaks and Turnitin, also do better than openai. Copyleaks has a 64.8% accuracy score. Turnitin gets 61%. But some detectors do not work as well if writers try to hide ai writing.

Strengths and Weaknesses

Openai ai detector alternatives have some good points. Many, like Originality.ai, are more accurate and work better with changed text. They often have extra tools, like checking for plagiarism. But all detectors have problems. Some, like Turnitin, do not work as well if people change the words to hide ai use. Every ai content detector can still make mistakes.

No detector is perfect. People should always check results again, especially for important things.

Best Use Cases

Openai ai detector alternatives are best when accuracy is very important. Schools and colleges use tools like Originality.ai and Copyleaks to check student work. Businesses use them to look at reports and ads. Writers use them to make sure their work is new. Openai’s tool can be used first, but most people pick other tools for final checks.

Practical Use and Concerns

Academic Integrity

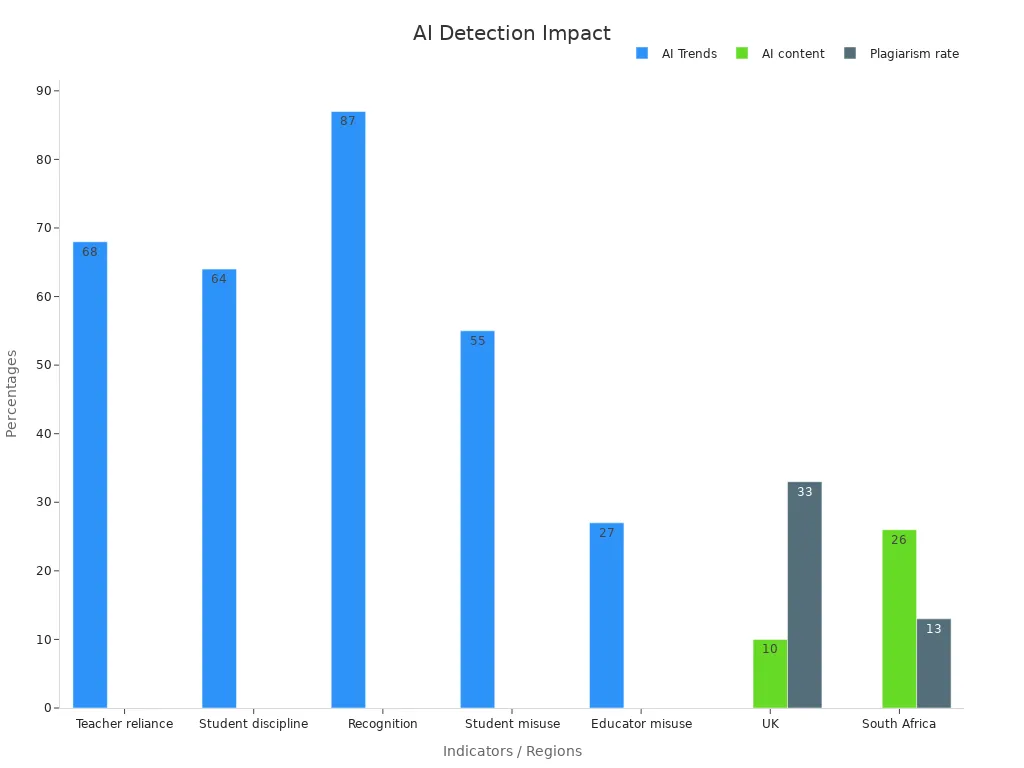

Schools and colleges use ai detection tools to help keep students honest. More teachers use these tools now. The number went up by 30 points, so now 68% of teachers use them. More students get in trouble for using ai to cheat. The rate went from 48% to 64%. Most students and teachers, about 87%, think ai detection is important for fairness. But 55% of students and 27% of teachers say they have used ai the wrong way for schoolwork. In the UK, 10% of school work is ai-made and 33% is copied. In South Africa, 26% is ai-made and 13% is copied. These facts show that schools need good ai tools to check for cheating and copied work.

|

Indicator |

Statistic / Trend |

Source |

|---|---|---|

|

Teacher reliance on AI detection tools |

68% usage with a 30 percentage point increase |

K-12 Dive |

|

Student discipline rates for AI-related plagiarism |

Increased from 48% to 64% |

GovTech |

|

Recognition of AI detection importance |

87% of students and educators agree on importance |

Forbes |

|

Student admission of AI misuse |

55% of students admit misuse |

G2 |

|

Educator admission of AI misuse |

27% of educators admit misuse |

G2 |

|

Regional AI-generated content and plagiarism rates |

UK: 10% AI content, 33% plagiarism; South Africa: 26% AI content, 13% plagiarism |

Multiple |

Business Applications

Many businesses use ai to make content, save time, and help with choices. Tech companies build new ai tools and services. Banks use ai to spot risks and stop fraud. Hospitals use ai to help patients and do research. Stores use ai to send ads and manage supplies. Factories use ai to fix machines before they break. Schools and government groups use ai to make new things and help people.

-

Optimization: ai finds problems and makes work better.

-

Productivity enhancement: ai does boring jobs faster.

-

Training and coaching: ai helps people learn and gives tips right away.

-

Decision support: ai looks at data and helps workers choose what to do.

These uses show that ai works well for business. But companies still need good tools to check for copied work and ai-made content.

Trust and Reliability

People still worry about trusting ai detection tools. Tests show that false positives can be as low as 0.8% or as high as 7.6%. True positives can be from 19.8% up to 98.4%. Even the best tool, Originality.ai Lite, has a 1% false positive rate and a 2% false negative rate. Hard tests show that ai detectors have trouble with tricky writing. This makes them less useful. The Federal Trade Commission says not to believe claims of perfect accuracy. People should know that no ai detector is always right or works in every case.

Openai’s ai detector is not accurate enough for 2025. It does not work well when the results really matter. The tool gives different results on different tests. F1-scores and KL divergence numbers change a lot with each dataset. Many detectors, like openai’s, do not pass quality checks. They have trouble working the same way every time. Openai did not share its watermarking tool. They worry it might not be fair or could cause problems. People should use better ai content detectors. Do not trust just one detector for big choices.

-

F1-scores go from 0.562 to 0.96, so accuracy changes a lot.

-

Bad datasets make it hard to believe the results.

-

Every dataset tested fails at least one quality check.

FAQ

How accurate was OpenAI’s AI detector?

OpenAI’s AI detector got AI text right only 26% of the time. It often called human writing AI-made by mistake. Many people thought the tool was not good for important jobs.

Why did OpenAI discontinue its AI detector?

OpenAI stopped using the AI detector because it made too many mistakes. The company said the tool was not reliable enough for real life.

Can AI detectors identify paraphrased or edited AI content?

Most AI detectors have trouble with paraphrased or changed AI writing. Strong paraphrasing tools can trick the detectors. People should not use just one detector to make big choices.

Are there better alternatives to OpenAI’s AI detector?

Yes. Tools like Originality.ai and Copyleaks are more accurate and have extra features like plagiarism checks. Many schools and companies like these tools better for important checks.

Is it safe to trust AI detectors for academic or business use?

AI detectors can help, but they are not perfect. Always use both AI detection and human review for important work. Only using AI tools can cause mistakes.