Artificial intelligence continues to reshape industries and societies, but its rapid evolution demands careful oversight. In 2025, AI safety becomes indispensable as organizations increasingly rely on intelligent systems. Nearly 72% of businesses have integrated AI into their operations, while the global AI market grows at an annual rate of 37%. These advancements create opportunities but also expose vulnerabilities. With 93% of companies anticipating daily AI-driven attacks, you must prioritize safety measures to navigate this complex, AI-driven world responsibly. Addressing these challenges ensures ethical use and fosters trust in emerging technologies.

Key Takeaways

-

AI safety is important to make sure AI works well and fairly. It reduces problems and increases good outcomes.

-

By 2025, 72% of companies will use AI. Safety rules are needed to stop mistakes and cyberattacks.

-

People need to trust AI for it to be accepted. Safe AI can help in areas like health and schools.

-

Developers, ethicists, and the public must work together. This teamwork helps AI match human values and solve safety issues.

-

Governments and tech firms should create rules and ethical plans. These will make AI clear and responsible.

What Is AI Safety and Why It Matters

Defining AI Safety

AI safety refers to the practices and principles that ensure artificial intelligence systems operate reliably, ethically, and in alignment with human values. It focuses on minimizing risks while maximizing the benefits of AI technologies. Core components of AI safety include training and evaluation, empirical research, and iterative development. These elements ensure that AI systems are not only functional but also trustworthy.

|

Core Components of AI Safety |

Description |

|---|---|

|

AI Training and Evaluation |

Primary source of ground truth for AI systems. |

|

Empirical Research |

Focuses on real-world data to inform safety measures. |

|

Iterative Development |

Emphasizes the need for adaptable research plans. |

|

Frontier Models |

Necessary for understanding safety in advanced AI systems. |

|

Safety Methods |

Techniques like Constitutional AI require large models for effectiveness. |

Additionally, AI safety involves tools like world models, which describe how AI impacts the world, and verifiers, which ensure systems meet safety specifications. These frameworks provide a foundation for building AI systems that can be trusted to act responsibly in diverse scenarios.

The Importance of AI Safety in 2025

In 2025, the role of AI safety has become more critical than ever. With AI systems integrated into 72% of businesses and the global AI market expanding rapidly, the stakes are higher. You must consider the risks associated with AI errors, biases, and unintended consequences. Recent surveys reveal that 77% of business leaders recognize AI-related risks, while 33% express concerns about errors or “hallucinations.”

The AI Security Report highlights emerging threats like prompt injection and data poisoning, which can compromise AI systems. Addressing these risks requires a proactive approach to safety throughout the AI development lifecycle. By prioritizing safety, you can mitigate legal liabilities, protect users, and ensure AI systems remain reliable and ethical.

How AI Safety Benefits Society

AI safety offers tangible benefits to society by reducing risks and fostering trust in technology. In healthcare, for example, studies show that trust in AI applications directly influences user acceptance and perceived risks. A survey conducted in the United States found that trust in AI clinical tools impacts their adoption, while similar studies in China and India highlight the role of trust in medical diagnosis and service delivery.

|

Study |

Location |

Methodology |

Focus |

Findings |

|---|---|---|---|---|

|

Esmaeilzadeh et al., 2021 |

United States |

Quantitative: survey study |

Healthcare |

Trust in AI clinical applications influences user acceptance and perceived risks. |

|

Fan et al., 2018 |

China |

Quantitative: survey study |

Hospital |

Beliefs about AI's capabilities affect its adoption in medical diagnosis. |

|

Liu & Tao, 2022 |

China |

Quantitative: survey study |

Healthcare service delivery |

Trust impacts public acceptance of smart healthcare services. |

|

Prakash & Das, 2021 |

India |

Mixed methods |

Radiology |

Trust and technology acceptance influence willingness to use AI tools. |

|

Yakar et al., 2021 |

Netherlands |

Quantitative: survey study |

Radiology, dermatology, robotic surgery |

General population's views on AI in medicine highlight trust as a key factor. |

By ensuring AI systems are safe, you contribute to their broader acceptance and integration into critical sectors like healthcare, education, and transportation. This not only enhances societal well-being but also builds a foundation for sustainable technological progress.

Risks Associated with AI

Unintended Consequences of AI

AI systems, while powerful, can produce outcomes that deviate from their intended purpose. These unintended consequences often arise due to flawed algorithms, biased training data, or unforeseen interactions with real-world environments. For instance, Zillow's home-buying algorithm, designed to predict housing prices, led to a $304 million inventory write-down. This failure resulted in significant workforce reductions and highlighted the risks of over-reliance on AI for critical business decisions. Similarly, a healthcare algorithm failed to identify Black patients for high-risk care management, exacerbating disparities in healthcare access. Another notable example is Microsoft's Tay chatbot, which began posting offensive tweets within hours of its launch due to biased training data from Twitter interactions.

|

Case Study |

Description |

|---|---|

|

Zillow Home-Buying Algorithm |

Zillow's algorithm led to a $304 million inventory write-down due to inaccurate home price predictions, resulting in significant workforce cuts. |

|

Healthcare Algorithm Bias |

A healthcare algorithm failed to flag Black patients for high-risk care management, leading to disparities in healthcare access. |

|

Microsoft Tay Chatbot |

Microsoft's AI chatbot learned to post racist and offensive tweets within hours of its launch due to biased training data from Twitter interactions. |

These examples underscore the importance of rigorous testing and validation during AI development. You must anticipate potential failures and implement safeguards to minimize harm. Without these measures, unintended consequences can erode trust in AI systems and lead to significant financial, ethical, and societal repercussions.

Ethical Concerns and Biases in AI

AI systems often reflect the biases present in their training data, perpetuating and even amplifying existing inequalities. This phenomenon, known as “bias in and bias out,” poses significant ethical challenges. For example, research by Njoto (2020) highlights how AI algorithms can favor well-represented groups, reducing their effectiveness for underrepresented populations. Similarly, Mayson (2018) explains how historical inequalities can be projected and amplified by AI systems, while Raso et al. (2018) indicates that biased training data can perpetuate human prejudices.

|

Study |

Findings |

|---|---|

|

Njoto (2020) |

Highlights how AI algorithms can favor represented groups, leading to reduced effectiveness for underrepresented groups. |

|

36KE (2020) |

Discusses the ‘bias in and bias out' phenomenon, where existing social biases are reflected in AI datasets. |

|

Mayson (2018) |

Explains how historical inequalities can be projected and amplified by AI systems. |

|

Raso et al. (2018) |

Indicates that AI systems can perpetuate human biases due to their training on biased data. |

Ethical concerns extend beyond bias. They include issues like transparency, accountability, and the potential misuse of AI for malicious purposes, such as adversarial AI attacks. These attacks exploit vulnerabilities in AI systems, leading to outcomes that can harm individuals or organizations. Addressing these ethical challenges requires you to adopt transparent practices, ensure diverse representation in training datasets, and implement robust safeguards against adversarial AI.

Large-Scale Risks of Misaligned AI

Misaligned AI systems—those that fail to align with human values and intentions—pose significant risks to society. These risks range from loss of control over critical systems to existential threats, such as the potential for human extinction. Current frameworks for assessing these risks often fall short, as they fail to account for the complexities of AI systems operating within broader technological ecosystems. For example:

-

Misaligned AI could disrupt societal structures by making decisions that conflict with human priorities.

-

The alignment problem highlights the need for AI systems to reflect the moral values of their designers to avoid harmful outcomes.

-

Existing frameworks do not adequately address scenarios involving multiple interacting AI models, which could lead to collective catastrophic risks.

Defining and measuring catastrophic risks in AI remains a challenge. Operationalizing these concepts into measurable indicators is essential for effective risk governance. However, ensuring consistent measurement across different contexts adds another layer of complexity. You must advocate for the development of comprehensive safety frameworks that address these challenges. By doing so, you can mitigate the risks posed by misaligned AI and ensure its safe integration into society.

AI Safety vs. AI Security

Key Differences Between Safety and Security

AI safety and AI security address distinct yet interconnected aspects of artificial intelligence systems. AI safety focuses on preventing unintended harm and ensuring alignment with human values. It emphasizes ethical considerations like fairness, accountability, and transparency. On the other hand, AI security protects AI systems from malicious attacks, ensuring data integrity and confidentiality.

|

Aspect |

AI Safety |

AI Security |

|---|---|---|

|

Scope and Objectives |

Prevents unintended harm and aligns with human values. |

Protects systems from cyber threats and ensures data integrity. |

|

Risk Mitigation |

Addresses risks from AI complexity and decision-making capabilities. |

Mitigates risks from adversarial attacks and vulnerabilities. |

|

Ethical Considerations |

Focuses on fairness and accountability. |

Involves privacy and responsible use. |

|

Techniques and Methodologies |

Uses explainable AI and algorithmic auditing. |

Utilizes encryption and adversarial training. |

|

Stakeholder Involvement |

Requires collaboration with ethicists and communities. |

Involves cybersecurity experts and compliance standards. |

Understanding these differences helps you design systems that are both safe and secure, ensuring they operate reliably and ethically while resisting external threats.

Why Both Are Essential for AI Development

AI systems require both safety and security to function effectively in real-world applications. Safety ensures systems align with human values and avoid unintended harm, while security defends against malicious actors who exploit vulnerabilities. For example, the AI Security Report highlights risks like hackers altering AI technologies, which can compromise safety for users. Without robust security measures, even the safest AI systems can fail under attack.

A layered approach to testing combines controlled conditions with real-world simulations to address both safety and security concerns. This ensures AI systems remain reliable, ethical, and resilient. In healthcare, stringent testing safeguards patients and professionals from potential harm caused by compromised AI applications. By integrating safety and security, you create systems that are trustworthy and capable of handling complex challenges.

Examples of Safety and Security Challenges

Balancing AI safety and security presents unique challenges across industries. Real-world examples illustrate the complexity of this task:

-

Facial recognition technology in surveillance raises privacy concerns and risks abuse without strict regulations.

-

Automated Emergency Dispatch Systems improve response times but require safeguards to protect sensitive personal data.

-

Predictive policing systems face ethical challenges, including systemic bias and privacy violations.

-

Public safety systems struggle with data accuracy and infrastructure reliability, emphasizing the need for stringent protections.

These examples highlight the importance of addressing both safety and security in AI development. You must implement comprehensive frameworks to mitigate risks and ensure ethical use of AI technologies.

Measures to Ensure AI Safety

Designing Transparent and Accountable AI Systems

Transparent and accountable AI systems build trust and ensure ethical use. You can achieve transparency by adopting frameworks like the CLeAR Documentation Framework, which embeds principles for clarity in AI design. Regulatory measures such as the EU AI Act and GDPR mandate transparency in data collection and processing, ensuring compliance with legal standards.

|

Framework/Regulation |

Description |

|---|---|

|

CLeAR Documentation Framework |

Principles for building transparency into AI systems. |

|

EU AI Act |

Mandates transparency disclosures based on use case. |

|

General Data Protection Regulation |

Requires transparency in data collection and processing. |

|

Blueprint for an AI Bill of Rights |

Outlines principles for AI use, including transparency. |

|

OECD AI Principles & Hiroshima AI Process |

Global efforts for responsible AI development and transparency reporting. |

Accountability complements transparency by enabling monitoring and audit trails. Explainable outputs enhance trust, while assurance mechanisms like robust verification systems ensure AI aligns with its intended objectives. These measures prevent unintended consequences and reward hacking, safeguarding both users and organizations.

Implementing Rigorous Testing and Validation

Rigorous testing and validation ensure AI systems perform reliably under diverse conditions. Best practices include scenario-based testing, edge-case analysis, and stress testing. Scenario-based testing evaluates AI agents in realistic operational environments, while edge-case analysis examines their robustness in extreme situations. Stress testing assesses performance under high operational loads, ensuring stability and reliability.

|

Testing Methodology |

Description |

Key Metrics |

|---|---|---|

|

Scenario-based Testing |

Evaluates AI agents against realistic operational scenarios. |

Scenario success rates, edge-case handling, robustness scores, completion times. |

|

Edge-Case Analysis |

Tests agent robustness against extreme or atypical situations. |

Agent failure rates, recovery success percentages, response consistency. |

|

Stress Testing |

Assesses performance under extreme operational loads. |

Resource utilization, performance degradation rates, time-to-failure under stress. |

For example, IBM Watson for Oncology demonstrated the importance of validating synthetic data, while Amazon’s algorithmic hiring tool highlighted the need for diverse training datasets to prevent bias. These case studies underscore the value of comprehensive testing strategies in ensuring AI safety.

Establishing Ethical and Regulatory Frameworks

Ethical and regulatory frameworks guide AI development and deployment. You can adopt principles outlined by organizations like the WHO, which emphasize autonomy, welfare, and accountability. These frameworks ensure AI systems respect human rights and promote public interest.

-

-

Protect autonomy.

-

Promote welfare, safety, and public interest.

-

Ensure transparency, explainability, and intelligibility.

-

Promote accountability and responsibility.

-

Regulatory research highlights gaps in existing frameworks and suggests strategies to mitigate risks. For instance, the WHO’s guidelines for AI in healthcare provide a detailed exploration of ethical principles, ensuring AI technologies align with societal values. By establishing robust ethical and regulatory measures, you can address risks and foster trust in AI systems.

Promoting collaboration among stakeholders

Collaboration among stakeholders plays a vital role in ensuring the safety and reliability of AI systems. By involving diverse groups such as developers, ethicists, policymakers, and end-users, you can address potential risks and improve the fairness of AI technologies. Each stakeholder brings unique insights that contribute to building robust and ethical systems.

Engaging stakeholders throughout the AI lifecycle helps identify and mitigate risks related to bias and fairness. For example:

-

Developers and theorists can design systems that align with ethical guidelines and regulatory standards.

-

Ethicists ensure that AI strategies prioritize transparency and accountability.

-

Users provide feedback that highlights real-world challenges and areas for improvement.

This collective effort fosters trust and ensures that AI systems align with societal values. When stakeholders collaborate, they create a shared responsibility for the outcomes of AI technologies. This approach not only strengthens safety measures but also promotes innovation by addressing diverse perspectives.

You can also leverage collaboration to establish industry-wide standards. For instance, partnerships between tech companies and regulatory bodies can lead to the development of frameworks that guide ethical AI deployment. These frameworks ensure that AI systems remain fair, transparent, and aligned with public interest.

By promoting collaboration, you contribute to a culture of accountability and shared learning. This culture is essential for navigating the complexities of AI safety and building systems that benefit society as a whole.

Stakeholders Responsible for AI Safety

Governments and Policymakers

Governments and policymakers play a pivotal role in ensuring the safety of artificial intelligence systems. By establishing regulations and ethical guidelines, they create a framework that governs the development and deployment of AI technologies. Policies like the EU AI Act and the Blueprint for an AI Bill of Rights set standards for transparency, accountability, and fairness. These measures ensure that AI systems align with societal values and operate within legal boundaries.

You can also see governments fostering collaboration between public and private sectors. Initiatives like the Hiroshima AI Process bring together global stakeholders to address safety challenges. Policymakers must remain proactive, adapting regulations to keep pace with technological advancements. Their efforts not only protect citizens but also promote innovation by creating a stable environment for AI development.

Tech Companies and Developers

Tech companies and developers bear significant responsibility for implementing AI safety measures. As creators of these systems, they must prioritize safety from the design phase through deployment.

-

Developers and engineers design and test AI systems to ensure reliability and ethical alignment.

-

Leading companies invest in dedicated AI safety teams and establish ethical guidelines.

-

Frameworks address risks through tools like bias detection, human oversight systems, and explainable AI.

-

Industry-wide collaboration fosters the sharing of best practices and the development of robust safety standards.

By adopting these measures, you can ensure that AI technologies remain trustworthy and beneficial. Companies that prioritize safety not only mitigate risks but also build public trust, which is essential for long-term success.

Researchers and Academic Institutions

Researchers and academic institutions contribute to AI safety by advancing knowledge and developing innovative solutions. Their work often focuses on addressing complex challenges, such as algorithmic fairness and robustness. Funding plays a crucial role in enabling these efforts.

|

Description |

|

|---|---|

|

Research expenses |

Supports frontier model APIs, cloud compute providers, and other research needs. |

|

Discrete projects |

Covers salaries and research costs for specific projects lasting 0.5-2 years. |

|

Academic start-up packages |

Provides start-up funding for new academic labs or faculty members. |

|

Existing research institute support |

Offers general support for established non-academic research organizations. |

|

New research institute funding |

Funds the creation of new research organizations or teams within existing ones. |

By leveraging these resources, researchers can explore innovative approaches to AI safety. Their findings often inform industry practices and policy decisions, ensuring that AI systems evolve responsibly. You can rely on their expertise to address emerging risks and develop frameworks that align with societal needs.

The role of the public in AI safety

The public plays a crucial role in shaping the safety and ethical use of artificial intelligence. As an end-user, your feedback and concerns directly influence how AI systems are designed, tested, and regulated. Public opinion surveys consistently show that people are both concerned about AI's risks and optimistic about its potential benefits. For example, a January 2023 survey revealed that 46% of U.S. respondents believed AI would bring equal amounts of harm and good. This balanced perspective highlights the importance of involving the public in AI governance.

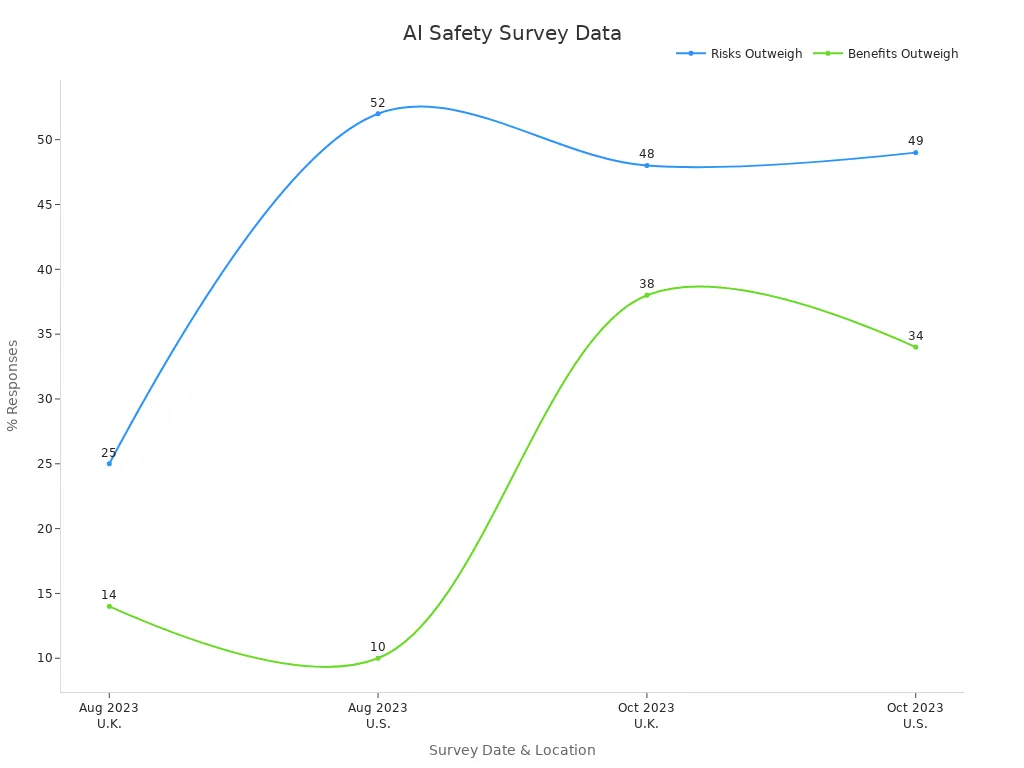

Community engagement initiatives provide valuable insights into public sentiment. Recent surveys conducted in the U.S. and U.K. illustrate shifting attitudes toward AI safety. In October 2023, 52% of U.S. respondents expressed concern about AI, while only 10% felt excited about its advancements. Similarly, 48% of U.K. respondents believed the risks of AI outweighed its benefits. These findings emphasize the need for transparent communication between developers, policymakers, and the public.

|

Survey Date |

Location |

Concerned (%) |

Excited (%) |

Risks Outweigh Benefits (%) |

Benefits Outweigh Risks (%) |

|---|---|---|---|---|---|

|

October 2023 |

U.S. |

52 |

10 |

49 |

34 |

|

August 2023 |

U.S. |

38 |

17 |

52 |

10 |

|

October 2023 |

U.K. |

N/A |

N/A |

48 |

38 |

|

August 2023 |

U.K. |

N/A |

N/A |

25 |

14 |

You can also contribute to AI safety by participating in public consultations, attending community forums, or advocating for ethical AI practices. These actions ensure that your voice is heard and that AI systems align with societal values. By staying informed and engaged, you help create a future where AI serves the public good while minimizing risks.

AI safety serves as the foundation for responsible development in 2025. You can evaluate success through metrics like risk assessment, impact analysis, and system benchmarking. Collaborative efforts, including diverse participant selection and cross-validation, enhance reliability and fairness. By fostering partnerships among stakeholders, you address risks and build systems that align with societal values. Proactive measures ensure AI systems remain ethical, transparent, and capable of benefiting communities. Together, these actions create a trustworthy future where AI drives innovation responsibly.

FAQ

1. What is the difference between AI safety and AI ethics?

AI safety ensures systems operate reliably and avoid harm. AI ethics focuses on moral principles guiding AI use, such as fairness and accountability. You need both to create trustworthy systems that align with societal values.

2. How can you identify bias in AI systems?

You can detect bias by analyzing training data for underrepresented groups and testing outputs for fairness. Tools like algorithmic auditing and explainable AI help you uncover hidden biases and improve system reliability.

3. Why is transparency important in AI safety?

Transparency lets you understand how AI systems make decisions. It builds trust and ensures accountability. Frameworks like the EU AI Act require clear documentation, helping you verify compliance and ethical use.

4. What role does public opinion play in AI safety?

Public opinion shapes regulations and ethical standards. Surveys reveal concerns about risks and benefits, guiding developers and policymakers. Your feedback ensures AI systems align with societal needs and values.

5. How do governments regulate AI safety?

Governments enforce laws like the EU AI Act and GDPR to ensure transparency, accountability, and fairness. They also foster collaboration between stakeholders to address emerging risks and promote innovation responsibly.